Image-to-image translation transforms input images into new, visually coherent outputs by applying techniques like generative adversarial networks (GANs) and deep learning models. This process enables tasks such as style transfer, colorization, and object transfiguration with remarkable accuracy. Explore the rest of the article to understand how these technologies can enhance your visual projects.

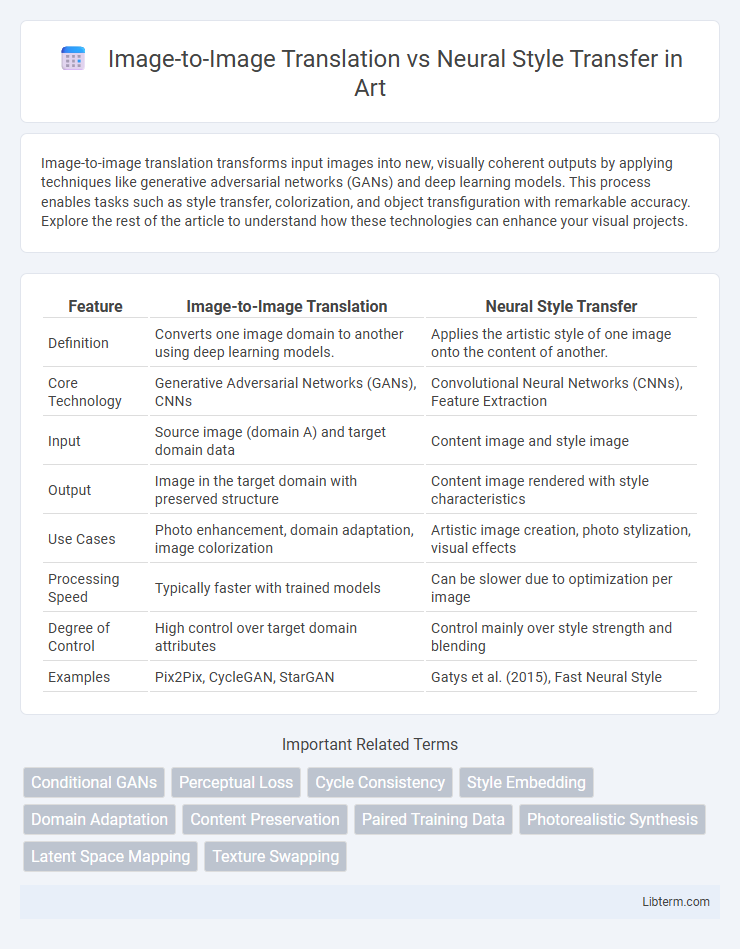

Table of Comparison

| Feature | Image-to-Image Translation | Neural Style Transfer |

|---|---|---|

| Definition | Converts one image domain to another using deep learning models. | Applies the artistic style of one image onto the content of another. |

| Core Technology | Generative Adversarial Networks (GANs), CNNs | Convolutional Neural Networks (CNNs), Feature Extraction |

| Input | Source image (domain A) and target domain data | Content image and style image |

| Output | Image in the target domain with preserved structure | Content image rendered with style characteristics |

| Use Cases | Photo enhancement, domain adaptation, image colorization | Artistic image creation, photo stylization, visual effects |

| Processing Speed | Typically faster with trained models | Can be slower due to optimization per image |

| Degree of Control | High control over target domain attributes | Control mainly over style strength and blending |

| Examples | Pix2Pix, CycleGAN, StarGAN | Gatys et al. (2015), Fast Neural Style |

Introduction to Image-to-Image Translation and Neural Style Transfer

Image-to-Image Translation involves converting images from one domain to another using deep learning models like GANs, enabling tasks such as photo enhancement, colorization, and semantic segmentation. Neural Style Transfer applies a convolutional neural network (CNN) to blend the style of one image with the content of another, producing artistic renditions that combine texture and form. Both techniques leverage sophisticated neural architectures but serve distinct purposes: Image-to-Image Translation emphasizes domain adaptation, while Neural Style Transfer focuses on artistic expression.

Core Concepts: Defining Image-to-Image Translation

Image-to-Image Translation involves converting an input image into a corresponding output image, preserving key structural elements while altering style, content, or domain-specific features through deep learning models such as Generative Adversarial Networks (GANs). It enables seamless transformation across various applications like converting sketches to photos, day to night scenes, or maps to satellite images by learning the mapping from one visual domain to another. Unlike Neural Style Transfer, which blends the style of one image with the content of another, Image-to-Image Translation focuses on producing realistic and contextually accurate outputs based on paired or unpaired training data.

Understanding Neural Style Transfer

Neural Style Transfer is a deep learning technique that recomposes images by separating and recombining content and style representations using convolutional neural networks, enabling the transformation of a source image's visual style to match that of a target artwork. Unlike image-to-image translation, which often relies on paired datasets to learn mappings between different image domains, neural style transfer operates on single images and emphasizes artistic style synthesis without needing extensive training data. Key algorithms, such as Gatys et al.'s method, utilize feature extraction from pretrained networks like VGG19 to optimize stylized output, making it a versatile tool for creative applications.

Key Differences Between Image-to-Image Translation and Neural Style Transfer

Image-to-Image Translation involves converting an input image into a target image with specific structural changes, often using paired datasets to learn mappings between different image domains like sketches to photos or day to night scenes. Neural Style Transfer focuses on combining the content of one image with the artistic style of another, emphasizing texture and color adaptation without altering the underlying structure. Key differences include Image-to-Image Translation's goal of domain adaptation and task-specific transformations versus Neural Style Transfer's artistic rendering aimed at visual aesthetics.

Popular Algorithms and Techniques for Each Approach

Image-to-Image Translation leverages popular algorithms such as Pix2Pix, CycleGAN, and UNIT, which utilize conditional Generative Adversarial Networks (cGANs) to learn mappings between input and output image domains without requiring paired data. Neural Style Transfer primarily employs optimization-based methods like Gatys et al.'s approach, which uses convolutional neural networks (CNNs) to blend content and style representations, and fast style transfer models such as Johnson et al.'s network that apply feed-forward transformations for real-time stylization. Both approaches benefit from advances in deep learning architectures, with image-to-image translation focusing on domain adaptation and style transfer emphasizing creative texture synthesis.

Applications in Art, Photography, and Design

Image-to-Image Translation enables the transformation of images from one domain to another, such as converting sketches into realistic photos or turning day scenes into night, widely used in digital art creation, photo enhancement, and graphic design workflows. Neural Style Transfer applies the artistic style of one image onto the content of another, making it popular for producing unique artwork by blending famous painting styles with personal photographs in fine art and editorial design. Both techniques advance creative expression by automating complex image manipulation tasks, supporting artists, photographers, and designers in generating visually compelling content efficiently.

Advantages and Limitations of Image-to-Image Translation

Image-to-Image Translation excels in generating diverse and realistic output images by learning complex mappings between input and target domains, enabling applications such as photo enhancement, domain adaptation, and object transfiguration with high accuracy. Its limitations include dependency on large labeled datasets for training and potential overfitting, which may reduce performance on unseen data or require significant computational resources. Unlike Neural Style Transfer, which primarily focuses on merging content and style, Image-to-Image Translation emphasizes transforming entire images to match distinct domain characteristics.

Strengths and Challenges of Neural Style Transfer

Neural Style Transfer excels in creating visually striking artwork by blending the content of one image with the style of another, making it ideal for artistic applications and customized aesthetics. Its main challenge lies in preserving fine content details while accurately transferring complex styles, often leading to artifacts or unnatural textures. Computational intensity and longer processing times also pose difficulties for real-time applications and high-resolution outputs.

Choosing the Right Technique for Your Project

Image-to-Image Translation excels in converting images from one domain to another by learning complex mappings, making it ideal for applications like photo enhancement, object transfiguration, and domain adaptation. Neural Style Transfer specializes in blending content and artistic styles by optimizing image aesthetics, which suits projects aiming to create visually compelling artwork or stylized images. Selecting the right technique depends on whether the project prioritizes semantic transformation or artistic style manipulation, with factors such as input-output domain similarity and desired visual effect guiding the choice.

Future Trends in Image Transformation Technologies

Future trends in image transformation technologies emphasize the integration of Image-to-Image Translation and Neural Style Transfer through advanced generative adversarial networks (GANs) and diffusion models, enhancing realism and control in visual outputs. Emerging methods leverage multimodal learning and real-time processing to enable dynamic, personalized transformations across diverse applications such as virtual reality, gaming, and digital art. Continuous improvements in computational efficiency and AI interpretability promise broader accessibility and intuitive user experiences in image editing and creative synthesis.

Image-to-Image Translation Infographic

libterm.com

libterm.com