Conditional probability measures the likelihood of an event occurring given that another event has already happened, providing a crucial tool for updating probabilities in light of new information. It plays a fundamental role in various fields like statistics, machine learning, and decision-making where understanding dependencies between events is essential. Dive into the rest of the article to explore how conditional probability shapes your analysis and predictions.

Table of Comparison

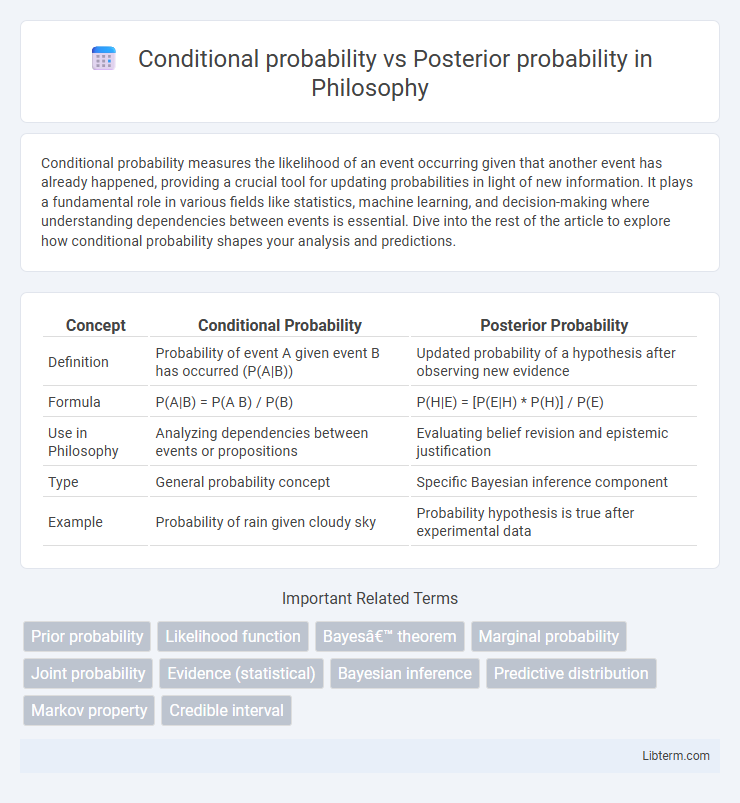

| Concept | Conditional Probability | Posterior Probability |

|---|---|---|

| Definition | Probability of event A given event B has occurred (P(A|B)) | Updated probability of a hypothesis after observing new evidence |

| Formula | P(A|B) = P(A B) / P(B) | P(H|E) = [P(E|H) * P(H)] / P(E) |

| Use in Philosophy | Analyzing dependencies between events or propositions | Evaluating belief revision and epistemic justification |

| Type | General probability concept | Specific Bayesian inference component |

| Example | Probability of rain given cloudy sky | Probability hypothesis is true after experimental data |

Introduction to Conditional and Posterior Probability

Conditional probability measures the likelihood of an event occurring given that another event has already occurred, expressed as P(A|B). Posterior probability refers to the updated probability of a hypothesis or event after considering new evidence, calculated using Bayes' theorem as P(H|E). Understanding these concepts is fundamental in fields like statistics, machine learning, and decision-making under uncertainty.

Defining Conditional Probability

Conditional probability quantifies the likelihood of an event occurring given that another related event has already happened. It is mathematically expressed as P(A|B) = P(A B) / P(B), where P(A|B) represents the probability of event A occurring given event B. This concept forms the foundation for calculating posterior probability, which updates the probability of a hypothesis based on new evidence using Bayes' theorem.

Understanding Posterior Probability

Posterior probability represents the updated likelihood of an event occurring after new evidence is considered, refining prior beliefs with observed data. It is calculated using Bayes' theorem, which combines the prior probability and the likelihood of the observed evidence given the event. Understanding posterior probability is crucial in fields like machine learning and statistical inference, as it quantifies how new information impacts the probability of hypotheses.

Mathematical Formulation: Conditional vs Posterior

Conditional probability, expressed as P(A|B), quantifies the likelihood of event A occurring given event B has occurred, using the formula P(A|B) = P(A B) / P(B) where P(B) > 0. Posterior probability, denoted P(th|D), updates the probability of a hypothesis th after observing data D, following Bayes' theorem: P(th|D) = [P(D|th) * P(th)] / P(D). The key difference lies in posterior probability incorporating prior knowledge P(th) and evidence P(D|th), whereas conditional probability solely measures the probability of A relative to the occurrence of B without prior distributions.

Key Differences Between Conditional and Posterior Probability

Conditional probability measures the likelihood of an event given that another event has occurred, expressed as P(A|B), focusing on the probability of A under the condition of B. Posterior probability, derived from Bayes' theorem, updates the probability of a hypothesis based on new evidence, computed as P(H|E) by combining prior probability and the likelihood of the evidence. The key difference lies in conditional probability's general assessment of dependency between events, while posterior probability specifically incorporates prior beliefs and evidence to update the probability of a hypothesis.

Applications of Conditional Probability

Conditional probability is widely applied in various fields such as medical diagnostics, where it helps calculate the likelihood of a disease given specific symptoms or test results. In risk assessment and finance, it is used to update the probability of an event based on new information, improving decision-making under uncertainty. Machine learning models often rely on conditional probability to determine class membership probabilities based on observed features, enhancing prediction accuracy.

Real-World Uses of Posterior Probability

Posterior probability, derived from Bayes' theorem, updates the likelihood of an event based on new evidence, making it crucial in fields like medical diagnosis where it refines disease probability after test results. In finance, posterior probability aids in risk assessment and decision-making under uncertainty by incorporating recent market data. Unlike conditional probability, which simply measures the chance of an event given another, posterior probability explicitly integrates prior knowledge with evidence for dynamic real-world applications.

Relationship with Bayes’ Theorem

Conditional probability measures the likelihood of an event given that another event has occurred, expressed as P(A|B). Posterior probability represents the updated probability of a hypothesis after observing new data, calculated using Bayes' Theorem as P(H|D) = [P(D|H) * P(H)] / P(D). Bayes' Theorem links conditional and posterior probabilities by updating prior beliefs (P(H)) with observed evidence (P(D|H)) to yield the posterior probability (P(H|D)).

Common Misconceptions and Pitfalls

Conditional probability represents the likelihood of an event given that another event has occurred, often denoted as P(A|B), while posterior probability specifically refers to the updated probability of a hypothesis after observing new evidence, commonly used in Bayesian inference. A common misconception is treating posterior probability and conditional probability as interchangeable, ignoring the role of prior probabilities in posterior calculation. Pitfalls include neglecting base rates or assuming independence, which can lead to incorrect posterior estimates and flawed decision-making.

Summary: Choosing the Right Probability Approach

Conditional probability calculates the likelihood of an event given that another event has occurred, focusing on the relationship between known events. Posterior probability updates the probability of a hypothesis based on new evidence using Bayes' theorem. Selecting the right probability approach depends on whether the problem requires assessing the chance under known conditions (conditional probability) or revising beliefs with incoming data (posterior probability).

Conditional probability Infographic

libterm.com

libterm.com