Conditional probability measures the likelihood of an event occurring given that another event has already happened. It is essential for understanding how events influence each other in fields like statistics, machine learning, and risk assessment. Explore this article to deepen your grasp of conditional probability and enhance your analytical skills.

Table of Comparison

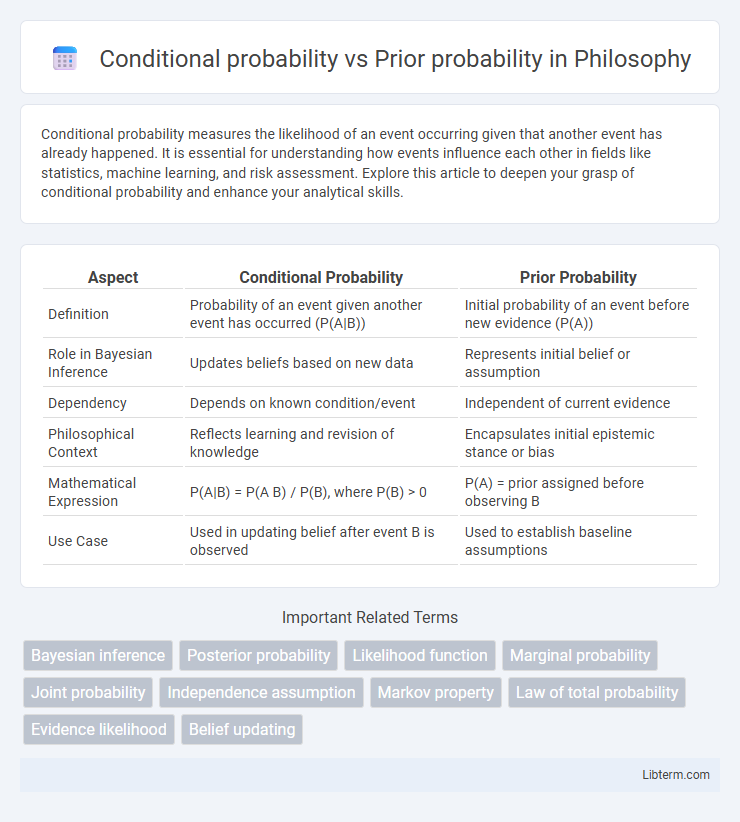

| Aspect | Conditional Probability | Prior Probability |

|---|---|---|

| Definition | Probability of an event given another event has occurred (P(A|B)) | Initial probability of an event before new evidence (P(A)) |

| Role in Bayesian Inference | Updates beliefs based on new data | Represents initial belief or assumption |

| Dependency | Depends on known condition/event | Independent of current evidence |

| Philosophical Context | Reflects learning and revision of knowledge | Encapsulates initial epistemic stance or bias |

| Mathematical Expression | P(A|B) = P(A B) / P(B), where P(B) > 0 | P(A) = prior assigned before observing B |

| Use Case | Used in updating belief after event B is observed | Used to establish baseline assumptions |

Introduction to Conditional and Prior Probability

Conditional probability measures the likelihood of an event occurring given that another event has already happened, denoted as P(A|B). Prior probability represents the initial estimation of the probability of an event before any new evidence is considered, denoted as P(A). Understanding the distinction between conditional and prior probability is crucial for applications in Bayesian inference, where prior beliefs are updated with new data to produce posterior probabilities.

Defining Conditional Probability

Conditional probability quantifies the likelihood of an event occurring given that another related event has already happened, represented mathematically as P(A|B). It contrasts with prior probability, which reflects the initial likelihood of an event without any additional information, denoted as P(A). Understanding conditional probability is essential for Bayesian inference, where it updates prior beliefs based on new evidence.

Understanding Prior Probability

Prior probability represents the initial likelihood of an event before new evidence is considered, serving as a baseline in Bayesian analysis. It quantifies pre-existing knowledge or assumptions about an event's occurrence, independent of current data. Understanding prior probability is essential for correctly updating beliefs through Bayes' theorem when new information becomes available.

Key Differences Between Conditional and Prior Probability

Conditional probability measures the likelihood of an event occurring given that another event has already occurred, represented as P(A|B), while prior probability refers to the initial probability of an event before any new evidence is considered, denoted as P(A). Key differences include the dependency on new information for conditional probability, whereas prior probability is based solely on existing knowledge or assumptions. Conditional probability updates beliefs in light of new data, often used in Bayesian inference, whereas prior probability serves as the starting point for such updates.

Mathematical Representation of Both Probabilities

Conditional probability, denoted as P(A|B), is mathematically represented as the probability of event A occurring given that event B has occurred, calculated by the formula P(A|B) = P(A B) / P(B), where P(A B) is the joint probability of both events A and B. Prior probability, denoted as P(A), represents the initial probability of event A before any additional information is considered; it serves as the baseline probability in Bayesian inference. The key mathematical distinction lies in conditional probability incorporating new evidence through the denominator P(B), while prior probability remains independent of such evidence.

Real-world Applications in Data Science

Conditional probability quantifies the likelihood of an event given that another event has occurred, crucial for predictive modeling and Bayesian inference in data science. Prior probability represents initial beliefs about event probabilities before new data, serving as the foundation for updating models in Bayesian statistics. Applications include spam filtering, where prior probabilities estimate overall spam frequency, and conditional probabilities adjust predictions based on message content features.

Conditional Probability in Bayesian Inference

Conditional probability measures the likelihood of an event occurring given that another event has already occurred, playing a crucial role in Bayesian inference where it updates prior beliefs based on new evidence. In Bayesian inference, the conditional probability, known as the likelihood, quantifies how probable observed data is under different hypotheses or models. This likelihood combines with the prior probability through Bayes' theorem to produce the posterior probability, refining predictions and decision-making in uncertain environments.

Role of Prior Probability in Bayesian Analysis

Prior probability represents the initial belief about an event before any new data is observed, serving as a fundamental input in Bayesian analysis. It quantifies the baseline likelihood of hypotheses and influences the posterior probability by weighting new evidence via Bayes' theorem. This integration allows Bayesian analysis to update beliefs systematically, balancing existing knowledge with observed data to improve decision-making accuracy.

Common Misconceptions and Pitfalls

Conditional probability often gets confused with prior probability, leading to significant misunderstandings in probabilistic reasoning and decision-making. A common misconception is treating the prior probability, which represents initial beliefs before new evidence, as if it already accounts for observed data, whereas conditional probability explicitly incorporates new information to update beliefs. Misinterpreting these probabilities can cause errors such as ignoring base rates or assuming independence when dependencies exist, skewing the outcomes of Bayesian inference and predictive modeling.

Summary and Practical Implications

Conditional probability measures the likelihood of an event given that another event has occurred, while prior probability represents the initial likelihood of an event before any additional information is known. Understanding the difference is crucial for accurate decision-making in fields like medical diagnosis, where prior probabilities reflect disease prevalence and conditional probabilities account for test results. Practical applications include Bayesian inference, which updates prior beliefs based on new evidence, improving predictions and risk assessments.

Conditional probability Infographic

libterm.com

libterm.com