Likelihood measures the probability of a specific event occurring based on known data or conditions, playing a crucial role in statistics and decision-making processes. It quantifies how probable an outcome is, guiding predictions in fields such as finance, science, and everyday problem-solving. Discover more about how understanding likelihood can enhance your analytical skills in the rest of the article.

Table of Comparison

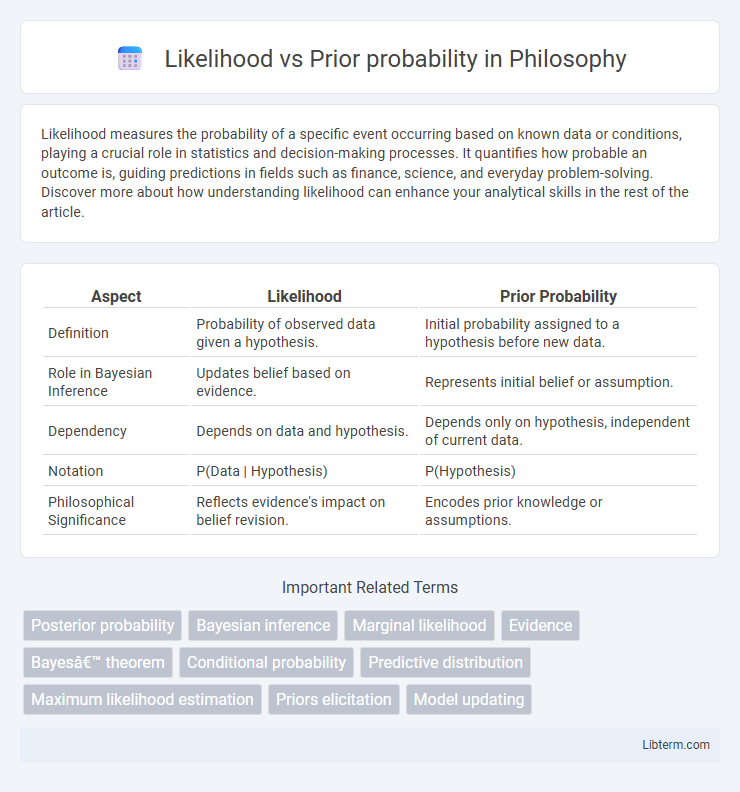

| Aspect | Likelihood | Prior Probability |

|---|---|---|

| Definition | Probability of observed data given a hypothesis. | Initial probability assigned to a hypothesis before new data. |

| Role in Bayesian Inference | Updates belief based on evidence. | Represents initial belief or assumption. |

| Dependency | Depends on data and hypothesis. | Depends only on hypothesis, independent of current data. |

| Notation | P(Data | Hypothesis) | P(Hypothesis) |

| Philosophical Significance | Reflects evidence's impact on belief revision. | Encodes prior knowledge or assumptions. |

Understanding Likelihood and Prior Probability

Likelihood measures how probable the observed data is given a specific hypothesis or parameter, playing a crucial role in updating beliefs in Bayesian inference. Prior probability represents the initial degree of belief in a hypothesis before new data is considered, reflecting existing knowledge or assumptions. Understanding their distinct roles is essential for correctly applying Bayesian methods to statistical modeling and decision-making.

Defining Likelihood in Statistics

Likelihood in statistics quantifies the probability of observing the given data under a specific model or parameter value, serving as a fundamental concept in parameter estimation. Unlike prior probability, which represents initial beliefs before data observation, likelihood functions measure how plausible different parameter values are based on the observed evidence. This distinction enables methods like maximum likelihood estimation to identify parameters that maximize data plausibility within statistical models.

What is Prior Probability?

Prior probability represents the initial belief or knowledge about an event's occurrence before new data is observed. It quantifies the expected frequency or probability of an outcome based on historical information or subjective judgment. Prior probability forms the foundation for Bayesian inference by influencing how evidence updates the likelihood of hypotheses.

Differences Between Likelihood and Prior Probability

Likelihood measures the probability of observing the given data under different parameter values, reflecting how well the model explains the data. Prior probability represents the initial beliefs or assumptions about the parameters before seeing any data, based on previous knowledge or assumptions. The key difference is that likelihood depends on observed data, while prior probability is independent of data and encodes existing knowledge.

Role of Likelihood in Bayesian Inference

Likelihood quantifies how probable observed data is given specific parameter values, directly influencing posterior distribution in Bayesian inference. Unlike prior probability, which encodes initial beliefs about parameters, likelihood updates these beliefs based on empirical evidence. The interplay between likelihood and prior determines the posterior, enabling refined parameter estimation and predictive modeling.

Importance of Prior Probability in Bayesian Analysis

Prior probability represents the initial belief about a parameter before observing data and fundamentally shapes Bayesian inference by influencing posterior estimates. It provides a baseline that integrates existing knowledge or assumptions, enabling more robust decision-making especially when data is scarce or noisy. The combination of prior probability with the likelihood function yields the posterior distribution, which refines uncertainty and improves prediction accuracy in Bayesian analysis.

Examples Illustrating Likelihood vs Prior Probability

In a medical diagnosis context, the prior probability might represent the general prevalence of a disease in the population, such as a 1% chance of having a particular illness before any testing. The likelihood, on the other hand, is the probability of observing specific test results given a patient has or does not have the disease, like a 90% chance of a positive test if the disease is present. These examples clarify how prior probability sets the baseline belief, while likelihood measures how well new evidence supports different hypotheses.

How Likelihood and Prior Interact in Bayesian Updating

Likelihood measures the probability of observed data given a specific hypothesis, while prior probability represents initial beliefs about the hypothesis before seeing data. In Bayesian updating, the likelihood function modifies the prior probability to produce the posterior probability, reflecting updated beliefs after data observation. This interaction mathematically combines evidence and prior knowledge to refine predictions and decision-making under uncertainty.

Common Misconceptions About Likelihood and Priors

Likelihood is often mistaken for prior probability, but it represents the probability of observed data given a specific parameter, not the initial belief about that parameter. Priors express subjective or empirical knowledge before data is observed, whereas likelihood functions update this belief based on new evidence. Misconceptions arise when likelihood is interpreted as a probability distribution over parameters, which it is not, as it only scales relative to how well parameters explain the data.

Practical Applications of Likelihood and Prior Probability

Likelihood quantifies how well a statistical model explains observed data, commonly used in maximum likelihood estimation for parameter fitting in machine learning and bioinformatics. Prior probability represents initial beliefs about parameters before observing data, essential in Bayesian inference for decision-making under uncertainty in fields like medical diagnostics and autonomous systems. Combining likelihood and prior probability enables posterior distribution calculation, improving predictions and model robustness in real-world applications such as risk assessment and natural language processing.

Likelihood Infographic

libterm.com

libterm.com