Parallel computing enhances processing speed by dividing tasks into smaller sub-tasks executed simultaneously across multiple processors. This approach maximizes efficiency, enabling faster data analysis and complex problem-solving in various applications. Discover how parallel computing can transform your projects by reading the full article.

Table of Comparison

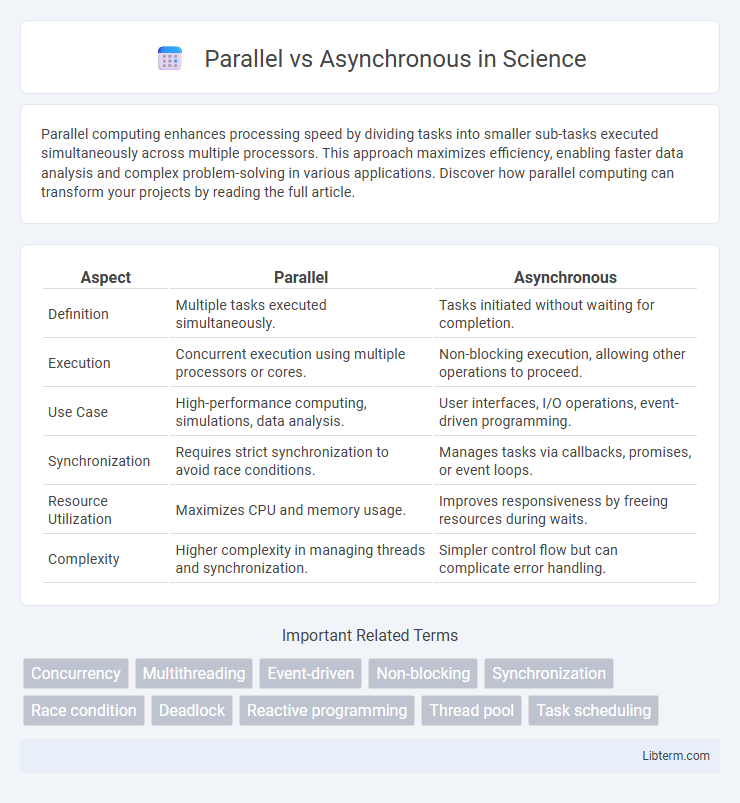

| Aspect | Parallel | Asynchronous |

|---|---|---|

| Definition | Multiple tasks executed simultaneously. | Tasks initiated without waiting for completion. |

| Execution | Concurrent execution using multiple processors or cores. | Non-blocking execution, allowing other operations to proceed. |

| Use Case | High-performance computing, simulations, data analysis. | User interfaces, I/O operations, event-driven programming. |

| Synchronization | Requires strict synchronization to avoid race conditions. | Manages tasks via callbacks, promises, or event loops. |

| Resource Utilization | Maximizes CPU and memory usage. | Improves responsiveness by freeing resources during waits. |

| Complexity | Higher complexity in managing threads and synchronization. | Simpler control flow but can complicate error handling. |

Introduction to Parallel and Asynchronous Concepts

Parallel computing involves executing multiple tasks simultaneously by dividing a problem into subproblems that run on separate processors or cores, enhancing computational speed and efficiency. Asynchronous programming, in contrast, allows tasks to run independently without waiting for others to complete, improving application responsiveness by managing operations like I/O or user interactions non-blockingly. Understanding these core concepts is crucial for optimizing performance and resource utilization in modern software development.

Defining Parallel Processing

Parallel processing involves dividing a computational task into smaller sub-tasks that are executed simultaneously across multiple processors or cores, significantly reducing overall execution time. This method leverages concurrency at the hardware level to improve performance in complex applications such as scientific simulations, image processing, and large-scale data analysis. By contrast, asynchronous processing manages tasks by allowing operations to run independently without waiting for others to complete, optimizing resource utilization but not necessarily reducing execution time through simultaneous computation.

Understanding Asynchronous Operations

Asynchronous operations allow tasks to run independently of the main program flow, improving responsiveness by not blocking the execution thread. Unlike parallel processing, which divides tasks across multiple processors to run simultaneously, asynchronous operations handle tasks by leveraging callbacks, promises, or async/await patterns to manage latency efficiently. This approach is essential in web development and I/O-bound tasks where waiting times can be minimized without occupying system resources.

Key Differences Between Parallel and Asynchronous

Parallel execution involves multiple tasks running simultaneously on multiple processors or cores, maximizing hardware utilization for faster performance. Asynchronous execution allows tasks to start without waiting for others to complete, improving application responsiveness by handling operations in a non-blocking manner. The key difference lies in parallelism relying on concurrent processing units, while asynchronous emphasizes task scheduling and completion without blocking the main execution thread.

Use Cases for Parallel Processing

Parallel processing excels in use cases requiring simultaneous execution of multiple tasks, such as large-scale scientific simulations, video rendering, and real-time data analysis in finance. It boosts performance by dividing complex computations across multiple processors or cores, ideal for tasks with high computational intensity and minimal inter-task communication. Industries like automotive for autonomous driving systems and healthcare for genome sequencing leverage parallel processing to handle vast data sets efficiently.

When to Choose Asynchronous Approach

Choose an asynchronous approach when handling IO-bound operations such as network requests or file system access to maximize resource efficiency and avoid blocking tasks. It is ideal for applications requiring high responsiveness, like user interfaces or real-time data processing, where waiting for tasks to complete would degrade performance. Asynchronous methods improve scalability by allowing multiple tasks to proceed independently without occupying threads unnecessarily.

Performance Comparison: Speed and Efficiency

Parallel processing executes multiple tasks simultaneously by dividing workloads across multiple cores or processors, significantly reducing execution time and enhancing computational speed for large data sets. Asynchronous execution improves efficiency by allowing tasks to run independently without waiting for others to complete, optimizing resource utilization and minimizing idle time, especially in I/O-bound operations. While parallelism excels in CPU-intensive processes by leveraging concurrency, asynchrony is more effective for handling latency and responsiveness in tasks involving waiting periods or external resources.

Challenges in Implementing Parallel and Asynchronous Models

Implementing parallel and asynchronous models presents challenges such as managing data consistency, avoiding race conditions, and ensuring thread safety in concurrent environments. Debugging and testing become complex due to non-deterministic execution orders and potential deadlocks or livelocks. Efficient resource allocation and balancing workloads to minimize latency and maximize throughput require careful design and optimization to prevent performance bottlenecks.

Best Practices for Developers

Implement best practices for parallel and asynchronous programming by carefully managing thread safety and resource sharing to avoid race conditions and deadlocks. Utilize efficient task scheduling libraries like Java's ForkJoinPool or Python's asyncio to optimize CPU and I/O-bound operations, ensuring responsive applications. Monitor system performance metrics and implement proper exception handling to maintain stability and scalability in concurrent environments.

Conclusion: Choosing the Right Approach

Choosing between parallel and asynchronous approaches depends largely on task requirements and system constraints. Parallel processing excels in CPU-intensive operations by dividing workloads across multiple processors, enhancing throughput and reducing execution time. Asynchronous programming suits I/O-bound tasks, enabling efficient resource utilization and maintaining application responsiveness without blocking threads.

Parallel Infographic

libterm.com

libterm.com