Marginal probability measures the likelihood of an event occurring regardless of the outcomes of other variables, providing a foundational concept in probability theory and statistics. It helps you understand the overall chance of an event by summing or integrating over all possible values of other variables. Explore the rest of this article to deepen your grasp of marginal probability and its applications in data analysis.

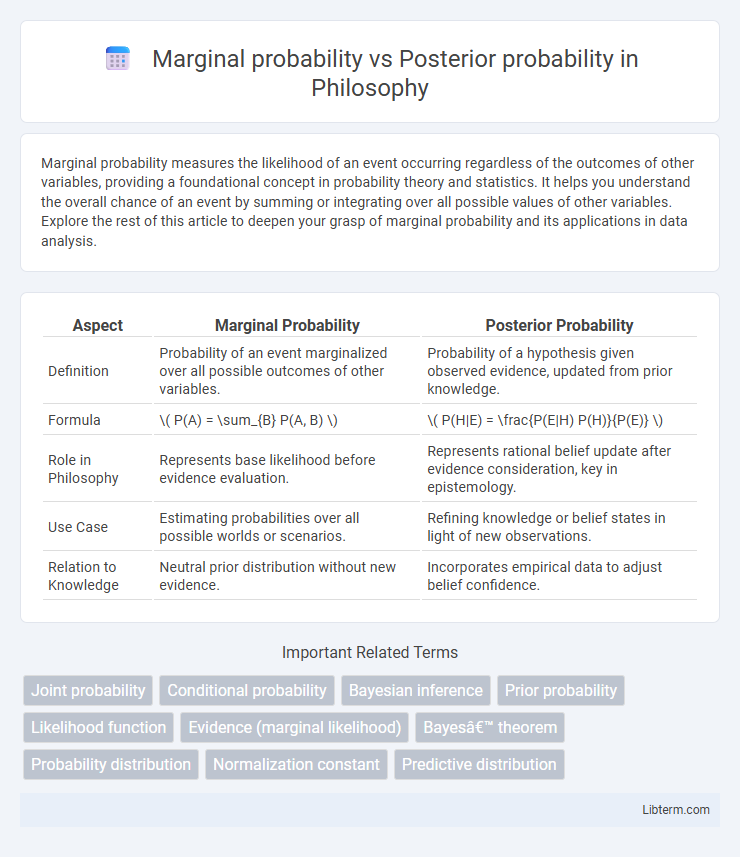

Table of Comparison

| Aspect | Marginal Probability | Posterior Probability |

|---|---|---|

| Definition | Probability of an event marginalized over all possible outcomes of other variables. | Probability of a hypothesis given observed evidence, updated from prior knowledge. |

| Formula | \( P(A) = \sum_{B} P(A, B) \) | \( P(H|E) = \frac{P(E|H) P(H)}{P(E)} \) |

| Role in Philosophy | Represents base likelihood before evidence evaluation. | Represents rational belief update after evidence consideration, key in epistemology. |

| Use Case | Estimating probabilities over all possible worlds or scenarios. | Refining knowledge or belief states in light of new observations. |

| Relation to Knowledge | Neutral prior distribution without new evidence. | Incorporates empirical data to adjust belief confidence. |

Introduction to Probability in Statistics

Marginal probability refers to the likelihood of an event occurring regardless of the outcomes of other variables, calculated by summing or integrating over the joint probabilities. Posterior probability is the updated probability of an event given new evidence or data, derived using Bayes' theorem by combining prior probability with the likelihood of the observed data. Both concepts are fundamental in statistics for interpreting uncertain information and making probabilistic inferences from data.

Understanding Marginal Probability

Marginal probability represents the likelihood of an event occurring regardless of other variables, calculated by summing or integrating over the probabilities of all possible outcomes of those variables. It serves as the foundational probability from which conditional and posterior probabilities are derived in Bayesian inference. Understanding marginal probability is essential for interpreting joint distributions and for computing posterior probabilities through Bayes' theorem.

Understanding Posterior Probability

Posterior probability quantifies the likelihood of a hypothesis given observed data, updating prior beliefs by incorporating evidence through Bayes' theorem. It contrasts with marginal probability, which measures the overall likelihood of an event summing over all possible outcomes without conditioning on specific hypotheses. Understanding posterior probability is essential in Bayesian inference, enabling precise decision-making based on updated, data-driven probabilities.

Mathematical Definitions and Notation

Marginal probability, denoted as P(X), is the probability of an event X occurring irrespective of other variables, typically obtained by summing or integrating over joint probabilities: P(X) = _Y P(X, Y) or P(X) = P(X, Y) dY for discrete and continuous variables respectively. Posterior probability, written as P(th | X), represents the updated probability of a parameter th given observed data X, and is computed using Bayes' theorem: P(th | X) = P(X | th) P(th) / P(X), where P(X) serves as the marginal likelihood. Marginal probability functions as the normalization constant in Bayesian inference, ensuring the posterior probability sums or integrates to one over the parameter space.

Key Differences: Marginal vs Posterior Probability

Marginal probability represents the likelihood of an event occurring without consideration of other variables, calculated by summing or integrating over the joint probabilities of all related outcomes. Posterior probability, central to Bayesian inference, updates the probability of a hypothesis based on observed evidence using Bayes' theorem. Key differences include marginal probability's unconditional nature versus posterior probability's conditional dependence on new data, making the latter crucial for decision-making under uncertainty.

The Role of Bayes’ Theorem

Marginal probability quantifies the likelihood of an event occurring, independent of other variables, by summing or integrating over all possible outcomes. Posterior probability represents the updated probability of a hypothesis given new evidence, calculated directly using Bayes' Theorem. Bayes' Theorem plays a crucial role by linking prior probability and likelihood to derive the posterior, allowing for refined probabilistic inference in Bayesian statistics.

Examples Illustrating Marginal Probability

Marginal probability refers to the probability of an event occurring without consideration of other variables, such as the probability of drawing a red card from a deck, which is 26/52 or 0.5. For example, in a disease testing scenario, the marginal probability of testing positive is calculated by summing the probabilities of testing positive given different health conditions weighted by their respective probabilities in the population. This differs from posterior probability, which involves updating probabilities based on new evidence, such as the probability of having a disease given a positive test result.

Examples Illustrating Posterior Probability

Posterior probability quantifies the likelihood of a hypothesis given observed data, such as calculating the probability of having a disease after receiving a positive test result, where prior probability reflects the disease prevalence. For example, if a medical test for diabetes has a sensitivity of 90% and specificity of 85%, with a prior prevalence of 5%, the posterior probability of actually having diabetes after a positive test can be computed using Bayes' theorem. This calculation demonstrates how posterior probability updates prior beliefs by integrating new evidence, distinguishing it from marginal probability, which represents the overall probability of observed data regardless of hypotheses.

Applications in Real-World Scenarios

Marginal probability plays a critical role in fields like risk assessment and epidemiology by providing the likelihood of an event occurring regardless of other variables, enabling baseline predictions in uncertain environments. Posterior probability is extensively applied in machine learning and medical diagnostics to update the probability of a hypothesis based on new evidence, improving decision-making accuracy in dynamic contexts. Bayesian networks leverage both marginal and posterior probabilities to model complex dependencies and refine predictions in artificial intelligence and finance.

Conclusion: Choosing the Right Probability Approach

Marginal probability calculates the likelihood of an event based on overall data without considering specific evidence, making it useful for general predictions. Posterior probability updates this likelihood by incorporating new evidence, providing more accurate and context-sensitive insights. Selecting the appropriate probability approach depends on whether you require broad event estimates or refined probabilities conditioned on observed data.

Marginal probability Infographic

libterm.com

libterm.com