Prior probability represents the initial assessment of an event's likelihood before new evidence is considered, forming the foundation of Bayesian inference. It helps quantify uncertainty based on existing knowledge, guiding decision-making processes in fields like statistics, machine learning, and risk analysis. Discover how understanding prior probability can enhance your analytical skills by reading the rest of this article.

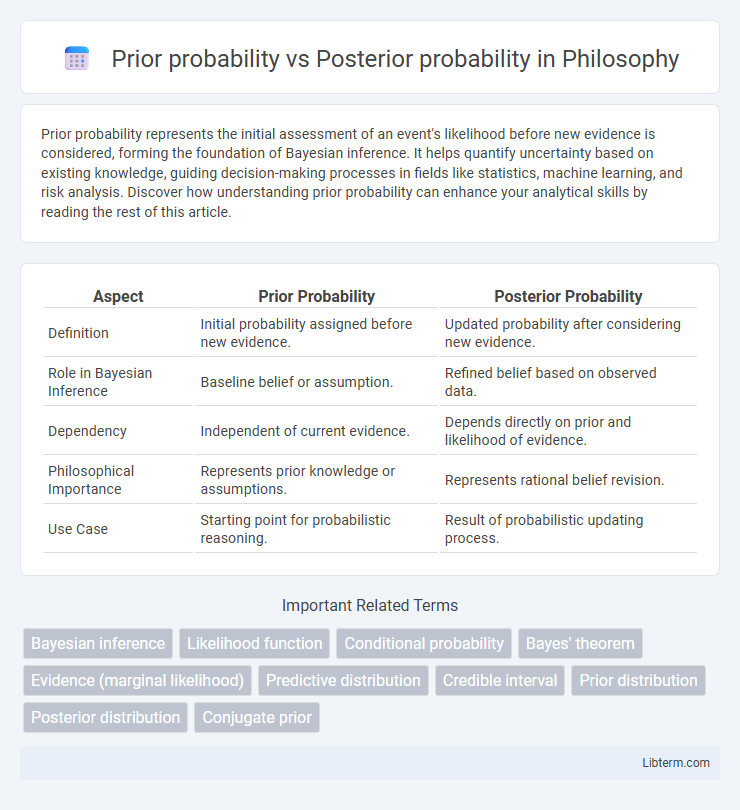

Table of Comparison

| Aspect | Prior Probability | Posterior Probability |

|---|---|---|

| Definition | Initial probability assigned before new evidence. | Updated probability after considering new evidence. |

| Role in Bayesian Inference | Baseline belief or assumption. | Refined belief based on observed data. |

| Dependency | Independent of current evidence. | Depends directly on prior and likelihood of evidence. |

| Philosophical Importance | Represents prior knowledge or assumptions. | Represents rational belief revision. |

| Use Case | Starting point for probabilistic reasoning. | Result of probabilistic updating process. |

Introduction to Probability in Statistics

Prior probability refers to the initial estimation of the likelihood of an event based on existing knowledge before new data is taken into account. Posterior probability is the revised probability of an event occurring after updating the prior probability with new evidence using Bayes' theorem. Understanding the distinction between prior and posterior probabilities is fundamental in statistical inference and decision-making under uncertainty.

Defining Prior Probability

Prior probability quantifies the initial belief about an event or hypothesis before new evidence is taken into account, often represented as P(H) in Bayesian statistics. It serves as the baseline probability derived from existing knowledge, historical data, or expert judgment. Accurately defining prior probability is essential for Bayesian inference, as it influences the calculation of posterior probability by combining prior information with observed evidence.

Understanding Posterior Probability

Posterior probability represents the likelihood of an event or hypothesis after considering new evidence, updated from the prior probability using Bayes' theorem. It combines prior knowledge with observed data to provide a refined probability estimate, crucial in fields like machine learning, medical diagnosis, and statistical inference. Understanding posterior probability enables more accurate decision-making by quantifying how evidence influences the certainty of a hypothesis.

Key Differences Between Prior and Posterior Probability

Prior probability represents the initial belief about an event's likelihood before observing any new data, based solely on existing knowledge or assumptions. Posterior probability updates this belief by incorporating new evidence through Bayes' theorem, reflecting the probability of the event after considering the data. The key difference lies in prior being the starting estimation and posterior being the refined probability adjusted for observed information.

Role of Bayes’ Theorem

Prior probability represents the initial estimation of an event's likelihood before considering new evidence. Posterior probability updates this estimation by integrating observed data through Bayes' Theorem, which mathematically combines prior probability with the likelihood of the new evidence. Bayes' Theorem serves as the foundational formula enabling the transition from prior to posterior probability, crucial for statistical inference and decision-making under uncertainty.

Examples Illustrating Prior vs Posterior Probabilities

Prior probability represents the initial assessment of an event's likelihood before new evidence is introduced, such as estimating a 30% chance of rain based on seasonal data. Posterior probability updates this estimate by incorporating new evidence, for example, increasing the rain probability to 70% after observing dark clouds and humidity levels. In medical testing, a prior probability might be the general prevalence of a disease in a population, while the posterior probability recalculates the chance of disease presence after a positive test result using Bayes' theorem.

Importance in Bayesian Inference

Prior probability represents the initial belief about an event before observing new data, serving as a foundational component in Bayesian inference. Posterior probability updates this belief by incorporating observed evidence through Bayes' theorem, providing a refined and more accurate estimate. The interplay between prior and posterior probabilities is essential for dynamic learning and decision-making under uncertainty.

Applications in Real-World Scenarios

Prior probability represents the initial belief about an event's likelihood before new evidence is considered, while posterior probability updates this belief after incorporating the new data using Bayes' theorem. In medical diagnostics, prior probabilities are based on disease prevalence, and posterior probabilities refine diagnosis accuracy by integrating test results. Similarly, in spam email filtering, prior probabilities estimate the baseline frequency of spam, and posterior probabilities adjust classification based on email content features.

Common Misconceptions and Challenges

Prior probability represents the initial belief about an event before new evidence is introduced, while posterior probability updates this belief by incorporating the new data using Bayes' theorem. A common misconception is treating prior probability as subjective or arbitrary when it is often based on historical data or well-established knowledge. Challenges include accurately selecting priors to avoid bias and computational difficulties in posterior probability estimation for complex models or limited data scenarios.

Summary and Key Takeaways

Prior probability represents the initial belief about an event before new evidence is considered, while posterior probability updates this belief after incorporating the new data through Bayes' theorem. Key takeaways include understanding that prior probabilities set the baseline assumptions, and posterior probabilities provide refined insights by combining prior knowledge with empirical evidence. These concepts are central to Bayesian inference, enabling more accurate decision-making under uncertainty.

Prior probability Infographic

libterm.com

libterm.com