Bayes' theorem provides a mathematical framework for updating the probability of a hypothesis based on new evidence, making it essential for decision-making under uncertainty. It calculates the conditional probability by combining prior knowledge with observed data, enhancing the accuracy of predictions in fields like machine learning, medicine, and statistics. Explore this article to understand how Bayes' theorem can improve your analytical skills and reasoning processes.

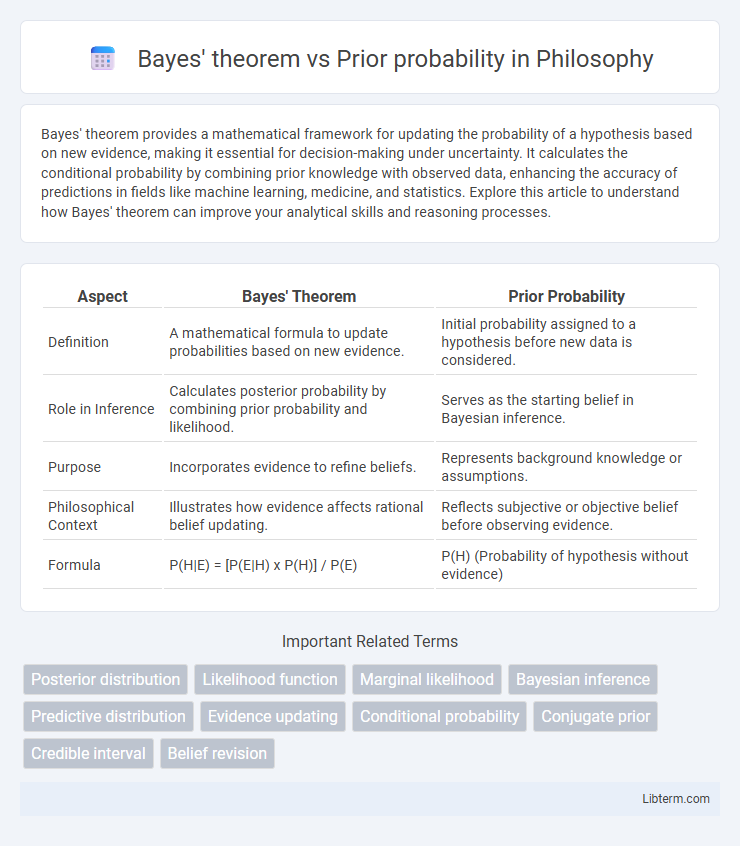

Table of Comparison

| Aspect | Bayes' Theorem | Prior Probability |

|---|---|---|

| Definition | A mathematical formula to update probabilities based on new evidence. | Initial probability assigned to a hypothesis before new data is considered. |

| Role in Inference | Calculates posterior probability by combining prior probability and likelihood. | Serves as the starting belief in Bayesian inference. |

| Purpose | Incorporates evidence to refine beliefs. | Represents background knowledge or assumptions. |

| Philosophical Context | Illustrates how evidence affects rational belief updating. | Reflects subjective or objective belief before observing evidence. |

| Formula | P(H|E) = [P(E|H) x P(H)] / P(E) | P(H) (Probability of hypothesis without evidence) |

Understanding Bayes' Theorem: A Brief Overview

Bayes' Theorem calculates the probability of an event based on prior knowledge of conditions related to the event, using prior probability as a key input. It updates the probability estimate for a hypothesis as new evidence or data becomes available, reflecting how initial beliefs are adjusted. Prior probability represents the initial assessment before considering current evidence, making Bayes' Theorem crucial for refining predictions in statistical inference and machine learning.

Defining Prior Probability in Bayesian Analysis

Prior probability in Bayesian analysis represents the initial belief about an event or parameter before new evidence is considered, serving as a fundamental component in the updating process. Bayes' theorem mathematically combines this prior probability with the likelihood of observed data to produce the posterior probability, reflecting updated beliefs. Accurately defining the prior is crucial for reliable Bayesian inference, especially in fields like machine learning, medical diagnosis, and risk assessment.

Core Differences Between Bayes’ Theorem and Prior Probability

Bayes' theorem is a fundamental formula in probability theory that updates the probability of a hypothesis based on new evidence, whereas prior probability represents the initial degree of belief in that hypothesis before the evidence is considered. The core difference lies in Bayes' theorem's role as a dynamic calculation tool that integrates prior probabilities with likelihoods to produce a posterior probability. Prior probability is static and subjective, serving as the foundational input that Bayes' theorem modifies to refine probabilistic inferences.

Role of Prior Probability in the Bayesian Framework

Prior probability represents initial beliefs about an event or hypothesis before new evidence is considered in the Bayesian framework. It serves as the foundational probability that Bayes' theorem updates with observed data to yield the posterior probability. The role of prior probability is crucial for incorporating existing knowledge and guiding the inference process in Bayesian analysis.

How Bayes’ Theorem Utilizes Prior Probability

Bayes' Theorem integrates prior probability as a foundational element to update the likelihood of an event based on new evidence, enhancing decision-making accuracy. Prior probability represents the initial assessment of an event's chance before considering current data, serving as the baseline input for Bayes' calculations. This approach enables the dynamic adjustment of probabilities, combining historical knowledge with fresh information to refine predictions in statistical inference and machine learning models.

Mathematical Representation: Bayes’ Theorem vs Prior Probability

Bayes' theorem mathematically expresses the posterior probability as P(A|B) = [P(B|A) * P(A)] / P(B), integrating the likelihood and prior probability to update beliefs with new evidence. Prior probability, denoted P(A), represents the initial probability of event A before considering current data, serving as a foundational component in Bayesian inference. The theorem quantifies how prior knowledge (P(A)) is adjusted by the likelihood of observed data (P(B|A)) relative to the overall evidence (P(B)) to yield the updated posterior probability.

Practical Applications: From Theory to Real-World Problems

Bayes' theorem enhances decision-making in practical applications by updating prior probability with new evidence, leading to more accurate predictions in fields such as medical diagnosis, spam filtering, and risk assessment. Prior probability represents initial beliefs before data observation, serving as a critical baseline that Bayesian inference refines through likelihood functions. This dynamic integration of prior knowledge and observed data enables robust solutions across real-world problems by quantifying uncertainty and improving inferential accuracy.

Challenges in Estimating Accurate Priors

Estimating accurate priors for Bayes' theorem poses significant challenges due to limited or biased data, which can skew posterior probabilities and reduce inference reliability. In many real-world scenarios, prior probabilities are subjective or based on incomplete information, complicating their precise quantification. Robust techniques such as hierarchical modeling and empirical Bayes methods are essential to mitigate uncertainty and improve prior estimation accuracy.

Case Studies: Bayes’ Theorem and Prior Probability in Action

Case studies demonstrate Bayes' theorem's power in updating prior probabilities with new evidence to improve decision-making accuracy. For example, in medical diagnostics, prior probability of a disease is adjusted by likelihood ratios from test results to calculate a more precise posterior probability. These applications show how Bayes' theorem refines initial assumptions (priors) by integrating real-world data, enhancing predictions in diverse fields like finance, machine learning, and epidemiology.

Future Directions in Bayesian Inference and Prior Estimation

Future directions in Bayesian inference emphasize developing adaptive methods for prior estimation that dynamically incorporate data-driven insights and domain-specific knowledge. Advances in hierarchical models and machine learning integration aim to refine prior selection, enhancing model flexibility and predictive accuracy. Emerging research explores automated and robust prior specification techniques to facilitate scalable Bayesian approaches in complex, high-dimensional settings.

Bayes' theorem Infographic

libterm.com

libterm.com