Posterior probability represents the likelihood of an event or hypothesis given new evidence, calculated using Bayes' theorem. It plays a crucial role in updating predictions and decisions based on observed data. Discover how your understanding of uncertainty can improve by exploring the rest of the article.

Table of Comparison

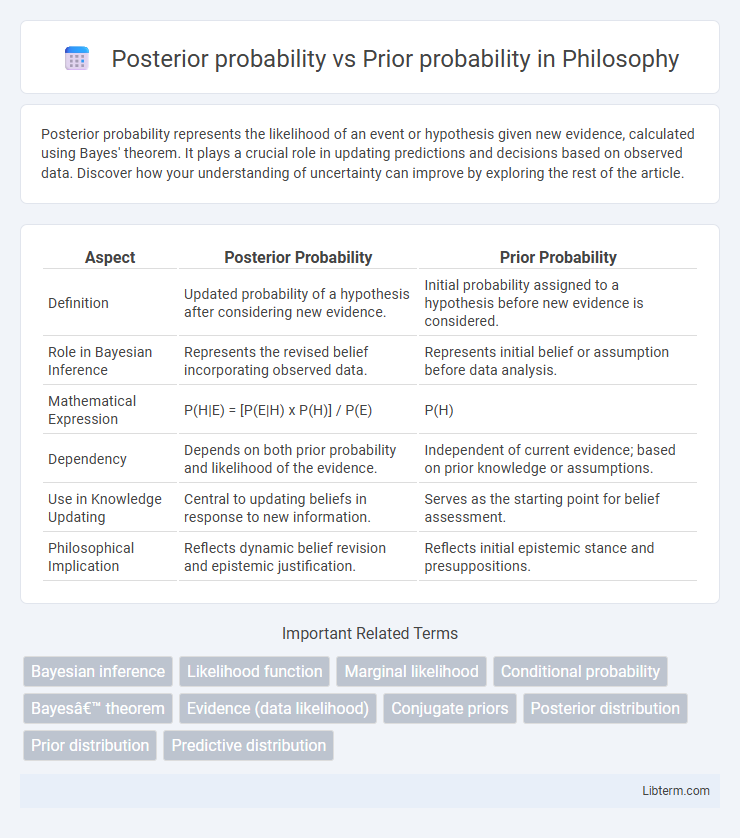

| Aspect | Posterior Probability | Prior Probability |

|---|---|---|

| Definition | Updated probability of a hypothesis after considering new evidence. | Initial probability assigned to a hypothesis before new evidence is considered. |

| Role in Bayesian Inference | Represents the revised belief incorporating observed data. | Represents initial belief or assumption before data analysis. |

| Mathematical Expression | P(H|E) = [P(E|H) x P(H)] / P(E) | P(H) |

| Dependency | Depends on both prior probability and likelihood of the evidence. | Independent of current evidence; based on prior knowledge or assumptions. |

| Use in Knowledge Updating | Central to updating beliefs in response to new information. | Serves as the starting point for belief assessment. |

| Philosophical Implication | Reflects dynamic belief revision and epistemic justification. | Reflects initial epistemic stance and presuppositions. |

Introduction to Probability in Statistics

Posterior probability represents the updated likelihood of an event occurring after considering new evidence, derived using Bayes' theorem in statistical inference. Prior probability reflects the initial belief about an event's occurrence before any new data is observed, serving as the baseline in Bayesian analysis. Understanding the relationship between prior and posterior probabilities is fundamental in probability theory for making informed decisions based on evolving information.

Defining Prior Probability

Prior probability quantifies the initial belief about an event before new evidence is introduced, representing the baseline likelihood based on existing knowledge or assumptions. It serves as a foundational component in Bayesian inference, guiding the updating process when observed data is incorporated. Defining prior probability accurately is crucial for reliable posterior probability calculations in statistical modeling and decision-making.

Understanding Posterior Probability

Posterior probability quantifies the likelihood of an event or hypothesis after considering new evidence, updating the initial belief represented by the prior probability. It is computed using Bayes' theorem, which combines the prior probability with the likelihood of the observed data. Understanding posterior probability is crucial in Bayesian inference, enabling more accurate decision-making by refining predictions based on available information.

Key Differences Between Prior and Posterior Probabilities

Prior probability represents the initial belief about an event's likelihood before observing any new evidence, based solely on existing knowledge or historical data. Posterior probability updates this belief by incorporating new evidence through Bayes' theorem, reflecting a revised probability after considering observed data. Key differences include that prior probability is static and independent of current evidence, whereas posterior probability is dynamic and conditioned on the latest information.

The Role of Bayesian Inference

Posterior probability represents the updated probability of a hypothesis after incorporating new evidence, while prior probability reflects the initial belief before observing data. Bayesian inference systematically combines prior probability with likelihood derived from observed data to compute the posterior probability. This process enables dynamic updating of beliefs, essential in fields like machine learning, medical diagnosis, and decision-making under uncertainty.

Real-World Examples: Prior vs Posterior

In real-world applications like spam filtering, prior probability represents the initial likelihood of an email being spam based on historical data, while posterior probability updates this likelihood after analyzing the specific content of a new message. Medical diagnosis leverages prior probability as the prevalence rate of a disease in a population, with posterior probability refining the diagnosis probability once patient symptoms and test results are evaluated. In machine learning, Bayesian models incorporate prior probabilities as input assumptions and compute posterior probabilities to improve decision-making accuracy as more data becomes available.

How Data Updates Affect Probabilities

Posterior probability represents the updated probability of an event after incorporating new evidence or data, while prior probability reflects the initial belief before observing any data. Bayesian inference combines the prior probability with the likelihood of the observed data to calculate the posterior probability, allowing continuous refinement of beliefs as more data becomes available. This dynamic update process enables more accurate predictions and decision-making based on evolving information.

Mathematical Formulation: Bayes’ Theorem

Bayes' Theorem mathematically expresses the relationship between posterior probability, prior probability, and likelihood as P(th|X) = [P(X|th) * P(th)] / P(X), where P(th|X) is the posterior probability, P(th) is the prior probability, P(X|th) is the likelihood, and P(X) is the marginal likelihood or evidence. The prior probability P(th) represents initial belief about parameter th before observing data X, while the posterior probability P(th|X) updates this belief after incorporating evidence from data using the likelihood function P(X|th). This theorem provides a fundamental framework for Bayesian inference, enabling probability updates as new data is observed.

Common Misconceptions and Pitfalls

Posterior probability is the updated probability of an event given new evidence, while prior probability represents the initial belief before observing the data. A common misconception is treating the prior as irrelevant once data is available, ignoring how the prior influences posterior outcomes, especially in cases with limited or noisy data. Pitfalls arise from improper selection of priors, leading to biased posterior probabilities that can misguide decision-making and inference.

Practical Applications in Research and Industry

Posterior probability, updated by incorporating new data, plays a crucial role in adaptive systems such as personalized medicine and machine learning models, where real-time decision-making is essential. Prior probability reflects initial beliefs or historical data, guiding early-stage research design and risk assessment in fields like finance and engineering. Combining prior and posterior probabilities enables dynamic model refinement and improved predictive accuracy, enhancing practical outcomes in scientific investigations and industrial processes.

Posterior probability Infographic

libterm.com

libterm.com