Orthogonality is a fundamental concept in mathematics and computer science that refers to the independence or non-interference between components, allowing them to function without affecting each other. This principle is essential for designing modular systems, simplifying debugging, and enhancing code maintainability by ensuring that changes in one part do not impact others. Explore the full article to understand how orthogonality can improve your system design and programming practices.

Table of Comparison

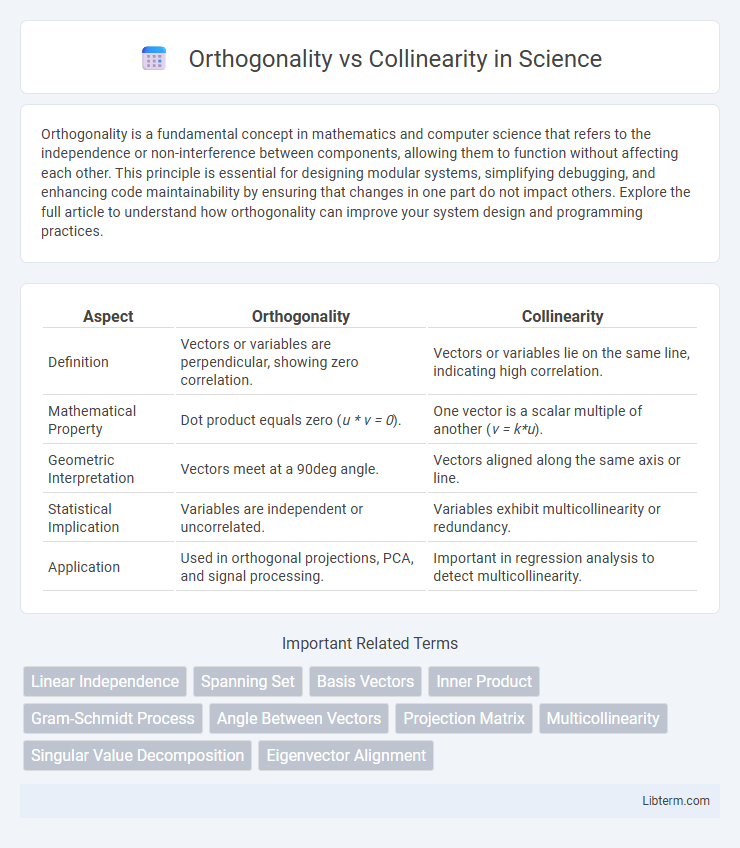

| Aspect | Orthogonality | Collinearity |

|---|---|---|

| Definition | Vectors or variables are perpendicular, showing zero correlation. | Vectors or variables lie on the same line, indicating high correlation. |

| Mathematical Property | Dot product equals zero (u * v = 0). | One vector is a scalar multiple of another (v = k*u). |

| Geometric Interpretation | Vectors meet at a 90deg angle. | Vectors aligned along the same axis or line. |

| Statistical Implication | Variables are independent or uncorrelated. | Variables exhibit multicollinearity or redundancy. |

| Application | Used in orthogonal projections, PCA, and signal processing. | Important in regression analysis to detect multicollinearity. |

Understanding Orthogonality in Data Analysis

Orthogonality in data analysis refers to the concept where variables or vectors are statistically independent and uncorrelated, meaning their dot product equals zero. This property ensures minimal redundancy and multicollinearity, enabling more stable and interpretable regression models. Unlike collinearity, where variables exhibit linear dependence, orthogonal variables allow for clearer isolation of individual effects on the dependent variable, enhancing model robustness and predictive accuracy.

Defining Collinearity in Statistics

Collinearity in statistics refers to a linear relationship between two predictor variables in a regression model, where one variable can be expressed as a near-perfect linear function of another. This phenomenon causes difficulties in estimating individual regression coefficients accurately due to inflated standard errors and unstable estimates. Understanding collinearity is essential for diagnosing multicollinearity and ensuring reliable interpretation of coefficients in multiple regression analysis.

Mathematical Foundations: Orthogonality vs Collinearity

Orthogonality in mathematics refers to the condition where two vectors have a dot product equal to zero, indicating perpendicularity in an inner product space. Collinearity occurs when two or more vectors lie along the same line, meaning one vector is a scalar multiple of the other, leading to linear dependence. Orthogonality emphasizes independence and zero correlation, while collinearity points to direct proportionality and redundancy in vector spaces.

Visualizing Orthogonality and Collinearity

Visualizing orthogonality involves depicting vectors or lines intersecting at right angles, emphasizing their perpendicular relationship with a 90-degree angle. Collinearity visualization shows points or vectors lying exactly on the same straight line, indicating dependency or alignment. Graphical representations often use coordinate axes plots to distinctly illustrate these spatial relationships for clearer understanding in vector analysis or multivariate statistics.

Impact on Linear Regression Models

Orthogonality in linear regression models ensures predictor variables are uncorrelated, leading to stable coefficient estimates and improved interpretability. Collinearity, where predictors are highly correlated, inflates variance of coefficient estimates, causing unreliable parameter inference and reduced model predictability. Addressing collinearity through techniques like variance inflation factor (VIF) analysis or principal component regression enhances model robustness and predictive accuracy.

Detection Techniques for Collinearity Issues

Detection techniques for collinearity issues primarily include Variance Inflation Factor (VIF), which quantifies how much the variance of an estimated regression coefficient increases due to collinearity. Condition Index and Eigenvalue analysis identify dependencies among predictor variables by examining the eigenvalues derived from the correlation matrix. Pairwise correlation matrices and Scatterplot matrices visually detect potential multicollinearity by revealing high correlations between independent variables.

Consequences of Ignoring Orthogonality

Ignoring orthogonality in statistical models often leads to increased multicollinearity, which inflates variance estimates of regression coefficients and reduces the precision of parameter estimates. This results in unstable coefficient estimates, making it difficult to determine the true effect of predictor variables on the response variable. Failure to address orthogonality compromises model interpretability and weakens the reliability of hypothesis tests and confidence intervals.

Practical Examples: Orthogonality and Collinearity in Practice

Orthogonality in practice is often seen in experimental design, where factors such as temperature and pressure are varied independently to isolate their effects on a chemical reaction, ensuring no overlap in influence and clearer interpretation of results. Collinearity commonly occurs in regression analysis, particularly in econometrics, where independent variables like income and education level are highly correlated, complicating the estimation of individual variable effects on outcomes like spending behavior. Understanding the distinction allows practitioners to design better experiments and improve statistical models by addressing multicollinearity issues through variable selection or dimensionality reduction techniques.

Remedies and Solutions for Collinearity

Collinearity in regression analysis can be addressed by removing or combining highly correlated predictor variables, which reduces redundancy and improves model stability. Applying dimensionality reduction techniques such as Principal Component Analysis (PCA) transforms correlated variables into orthogonal components, effectively eliminating multicollinearity. Regularization methods like Ridge or Lasso regression impose penalties on coefficient size, mitigating the impact of collinearity by shrinking regression coefficients toward zero.

Key Differences and Practical Implications

Orthogonality refers to vectors or variables being perpendicular with zero correlation, simplifying regression models by reducing multicollinearity and improving coefficient interpretability. Collinearity occurs when variables are highly correlated, leading to unstable coefficient estimates and inflated standard errors that complicate model inference. In practice, orthogonal predictors enhance model robustness and predictive accuracy, while collinearity demands remedial measures like variable selection or dimensionality reduction techniques.

Orthogonality Infographic

libterm.com

libterm.com