File system cache enhances your computer's performance by temporarily storing frequently accessed data in fast memory, reducing read and write times. Efficient cache management minimizes disk access, speeding up applications and improving overall system responsiveness. Discover how optimizing your file system cache can significantly boost your device's efficiency by reading the rest of this article.

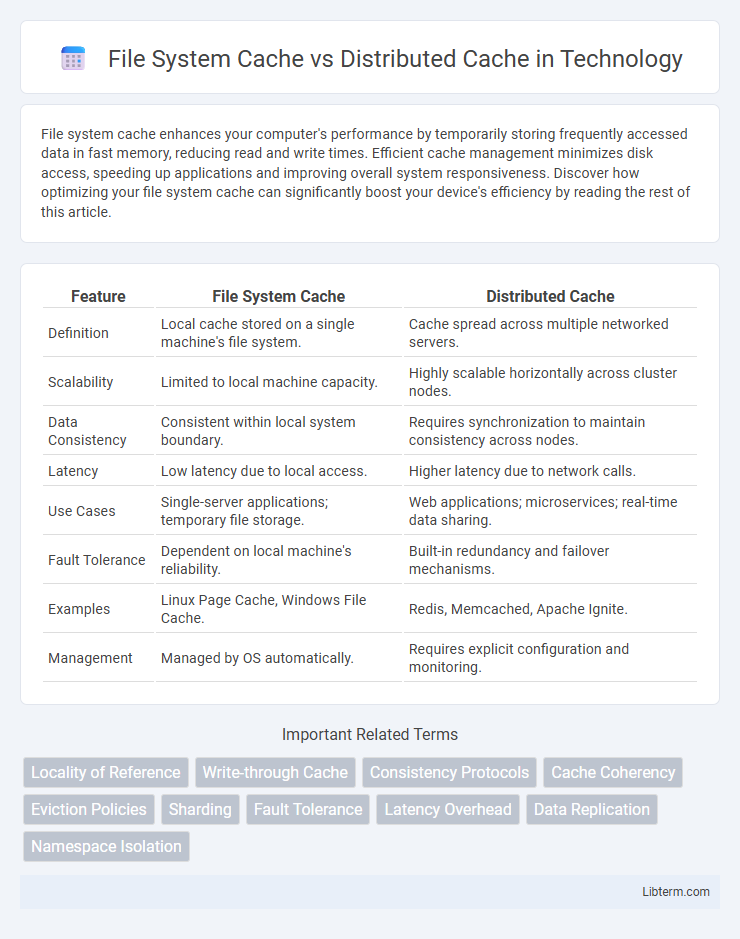

Table of Comparison

| Feature | File System Cache | Distributed Cache |

|---|---|---|

| Definition | Local cache stored on a single machine's file system. | Cache spread across multiple networked servers. |

| Scalability | Limited to local machine capacity. | Highly scalable horizontally across cluster nodes. |

| Data Consistency | Consistent within local system boundary. | Requires synchronization to maintain consistency across nodes. |

| Latency | Low latency due to local access. | Higher latency due to network calls. |

| Use Cases | Single-server applications; temporary file storage. | Web applications; microservices; real-time data sharing. |

| Fault Tolerance | Dependent on local machine's reliability. | Built-in redundancy and failover mechanisms. |

| Examples | Linux Page Cache, Windows File Cache. | Redis, Memcached, Apache Ignite. |

| Management | Managed by OS automatically. | Requires explicit configuration and monitoring. |

Introduction to File System Cache and Distributed Cache

File System Cache temporarily stores frequently accessed data blocks from the storage device in memory to reduce disk I/O latency, enhancing system performance by accelerating file read and write operations. Distributed Cache, in contrast, is a memory-based storage system that spans multiple networked servers to provide high-speed data access and scalability for large-scale applications. Both caching mechanisms optimize data retrieval but differ in architecture, scope, and use cases within modern computing environments.

Core Concepts and Definitions

File System Cache stores frequently accessed disk data in local memory to reduce read/write latency and improve application performance by minimizing physical disk operations. Distributed Cache is a networked memory store that enables multiple servers to share cached data, enhancing scalability, fault tolerance, and data availability in distributed computing environments. Both caches optimize data retrieval but serve different architectures: File System Cache operates within a single machine, while Distributed Cache supports coordinated caching across multiple nodes.

How File System Cache Works

File System Cache stores frequently accessed data directly in memory to reduce disk I/O latency, improving read and write performance by temporarily holding file system metadata and data blocks. This caching mechanism monitors file operations and retains recently used file information, allowing faster access without repeatedly querying the slower disk storage. It operates locally within a single machine, optimizing performance for applications relying on repeated file access patterns.

How Distributed Cache Works

Distributed cache functions by storing data across multiple networked servers, allowing for faster data retrieval and scalability compared to centralized file system caches that rely on a single machine's storage. It leverages in-memory storage on various nodes to reduce latency, improve load balancing, and ensure data consistency through synchronization protocols such as replication or partitioning. Common implementations like Redis or Memcached facilitate high availability and fault tolerance by distributing cache entries and handling node failures transparently.

Performance Comparison: File System vs Distributed Cache

File system cache stores frequently accessed data locally on disk, offering low latency and high throughput for single-node applications but limited scalability across multiple servers. Distributed cache, such as Redis or Memcached, enables data sharing and fast access across clusters by storing data in-memory, drastically reducing response times for distributed systems. Performance depends on use case: file system cache excels in local workloads with minimal network overhead, while distributed cache provides consistent, low-latency access in scalable, multi-node environments.

Scalability and Availability Considerations

File System Cache provides low-latency access to frequently used data stored locally, but its scalability is limited by the single node's hardware and it can become a single point of failure affecting availability. Distributed Cache systems like Redis or Memcached enable scalable data storage across multiple nodes, improving fault tolerance and high availability through data replication and partitioning. These systems efficiently handle increased workloads and node failures without service disruption, making them ideal for large-scale, mission-critical applications.

Use Cases and Suitability

File System Cache excels in scenarios requiring fast local access to frequently used data, such as single-server applications and development environments, due to its low latency and simplicity. Distributed Cache is ideal for large-scale, multi-node systems needing high availability, fault tolerance, and scalability, making it suitable for cloud-native applications and microservices architectures. Choosing between them depends on factors like data consistency requirements, infrastructure complexity, and performance demands.

Data Consistency and Reliability

File System Cache stores frequently accessed data locally on a single machine, ensuring fast read/write speeds but risking data inconsistency during failures or node crashes due to limited synchronization mechanisms. Distributed Cache, such as Redis or Memcached, spreads cached data across multiple nodes with built-in replication and consensus protocols, enhancing data consistency and fault tolerance in clustered environments. Distributed caching systems typically implement strong consistency models and automatic failover, providing higher reliability for applications requiring synchronized and durable data access.

Pros and Cons of Each Approach

File System Cache offers fast local access by storing frequently used data on the same machine, resulting in low latency and reduced network overhead, but it lacks scalability and data consistency across multiple servers. Distributed Cache enables high scalability and data sharing across clusters, supporting fault tolerance and real-time synchronization, while incurring network latency and increased complexity in cache management. Choosing File System Cache suits single-node applications needing quick retrieval, whereas Distributed Cache excels in multi-node environments requiring coherent, scalable caching solutions.

Choosing the Right Caching Solution

File System Cache stores frequently accessed data locally on a single server's disk, providing quick read speeds for applications with limited scale and requiring low administrative overhead. Distributed Cache spans multiple servers, enhancing scalability and fault tolerance while supporting high-throughput applications needing real-time data synchronization across a network. Selecting between File System Cache and Distributed Cache depends on factors like data consistency requirements, application scale, latency sensitivity, and infrastructure complexity.

File System Cache Infographic

libterm.com

libterm.com