Accurate estimation is crucial for project success, ensuring resources are allocated effectively and timelines are realistic. Leveraging data-driven techniques and expert insights enhances the precision of your estimates, minimizing risks and cost overruns. Explore the rest of this article to discover proven strategies that will improve your estimation process.

Table of Comparison

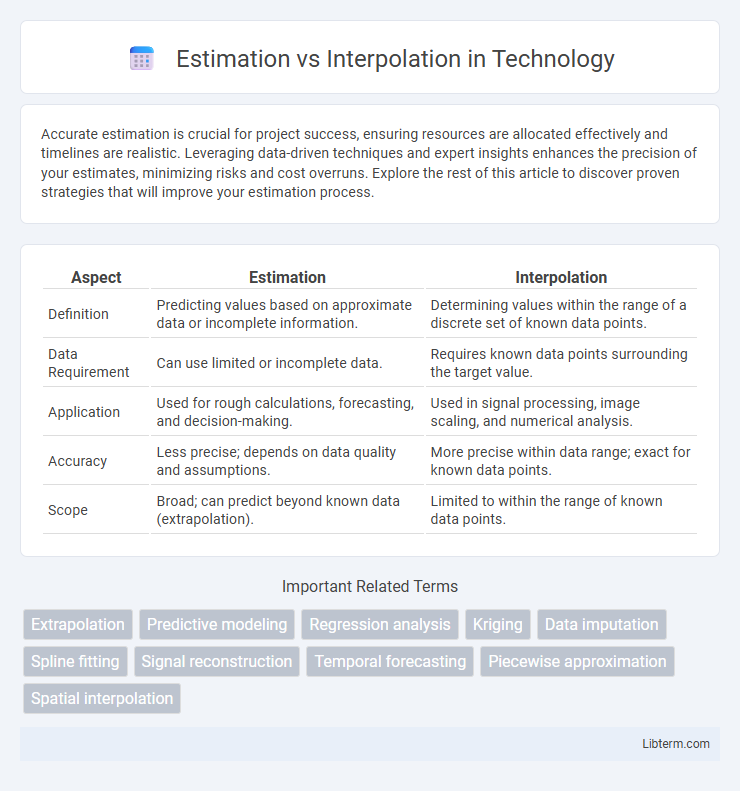

| Aspect | Estimation | Interpolation |

|---|---|---|

| Definition | Predicting values based on approximate data or incomplete information. | Determining values within the range of a discrete set of known data points. |

| Data Requirement | Can use limited or incomplete data. | Requires known data points surrounding the target value. |

| Application | Used for rough calculations, forecasting, and decision-making. | Used in signal processing, image scaling, and numerical analysis. |

| Accuracy | Less precise; depends on data quality and assumptions. | More precise within data range; exact for known data points. |

| Scope | Broad; can predict beyond known data (extrapolation). | Limited to within the range of known data points. |

Introduction to Estimation and Interpolation

Estimation involves predicting unknown values by analyzing known data points and using statistical or mathematical models to infer results beyond or within the range of observations. Interpolation specifically calculates intermediate values within the range of a discrete set of known data points, ensuring a smooth transition between them using methods such as linear, polynomial, or spline interpolation. Both techniques are essential in data analysis, signal processing, and scientific computing for reconstructing missing information and refining datasets.

Defining Estimation: Concepts and Methods

Estimation involves predicting unknown values based on observed data using statistical models or algorithms that account for underlying data patterns and variability, often applied in contexts with incomplete or noisy information. Common methods include point estimation, where a single value approximates the parameter of interest, and interval estimation, which provides a range of plausible values reflecting uncertainty. Techniques such as maximum likelihood estimation, Bayesian estimation, and least squares play essential roles in generating accurate and reliable estimates across fields like signal processing, econometrics, and machine learning.

Understanding Interpolation: Key Principles

Interpolation involves estimating unknown values by constructing new data points within the range of a discrete set of known data points. Key principles include maintaining smoothness, ensuring continuity, and minimizing error by fitting functions such as linear, polynomial, or spline curves through existing data. Accurate interpolation relies on the spatial distribution of known points and the appropriate selection of methods to capture underlying data patterns effectively.

Core Differences Between Estimation and Interpolation

Estimation involves predicting values for unknown data points based on a model or trend, often extrapolating beyond observed data, while interpolation specifically calculates values within the range of a discrete set of known data points. Estimation may rely on probabilistic methods and can handle uncertainty, whereas interpolation assumes data continuity and uses mathematical functions such as linear, polynomial, or spline interpolation to fill gaps. Core differences include that interpolation is confined to existing data boundaries and typically yields more precise results, whereas estimation encompasses broader predictions with potential extrapolation and varying degrees of accuracy.

Applications of Estimation in Real-World Scenarios

Estimation plays a critical role in real-world applications such as weather forecasting, where incomplete data is used to predict future conditions, and in financial modeling to approximate market trends and risks. In engineering, estimation techniques assist in project cost assessments and load calculations when exact measurements are unavailable. Unlike interpolation, which fills known data gaps within a range, estimation extends beyond observed data to provide actionable insights in uncertain environments.

Practical Uses of Interpolation in Data Analysis

Interpolation is widely used in data analysis for estimating values within the range of a discrete set of known data points, allowing for the generation of continuous datasets from sparse observations. Practical applications include filling missing data, smoothing noisy data, and enhancing visualization accuracy in fields such as meteorology, finance, and engineering. Techniques like linear interpolation, spline interpolation, and polynomial interpolation enable analysts to predict intermediate values with higher precision, improving decision-making and model reliability.

Types of Estimation Techniques

Estimation techniques primarily include point estimation, interval estimation, and Bayesian estimation, each serving distinct purposes in statistical analysis and data prediction. Point estimation provides a single best guess of a parameter, while interval estimation offers a range within which the parameter likely falls, often expressed with confidence intervals. Bayesian estimation incorporates prior knowledge through probability distributions, refining estimates as more data becomes available for more accurate decision-making.

Common Interpolation Methods Explained

Common interpolation methods include linear, polynomial, and spline interpolation, each designed to estimate unknown values within the range of a discrete set of known data points. Linear interpolation connects two adjacent points with a straight line, providing a simple and fast estimation, while polynomial interpolation fits a single polynomial curve through all data points, allowing for smoother but potentially oscillatory results. Spline interpolation, especially cubic splines, employs piecewise polynomials ensuring smoothness at data points and better handling of data variability, making it widely used in graphics and scientific computing.

Advantages and Limitations of Estimation and Interpolation

Estimation provides approximate values based on limited data, enabling quick decision-making with minimal computational resources, but its accuracy heavily depends on the quality and representativeness of the input data. Interpolation generates precise values within the range of known data points, offering high reliability for continuous data, yet it fails to predict values outside the sampled data range and may introduce errors if the underlying function is complex. Both techniques balance trade-offs between accuracy, computational effort, and applicability depending on the data structure and analysis requirements.

Choosing Between Estimation and Interpolation: Best Practices

Choosing between estimation and interpolation depends on the data availability and desired accuracy; interpolation is preferred when data points are dense and continuous, offering precise predictions within the existing data range. Estimation is suitable for sparse or incomplete data, providing broader approximations beyond known values. Best practices involve evaluating data quality, understanding model assumptions, and aligning methods with specific analytic goals to ensure reliable results.

Estimation Infographic

libterm.com

libterm.com