Distributed computing enables multiple interconnected computers to work together, processing complex tasks more efficiently than a single machine. This approach enhances scalability, fault tolerance, and resource sharing across diverse systems. Discover how distributed computing can transform your IT infrastructure and boost performance by reading the full article.

Table of Comparison

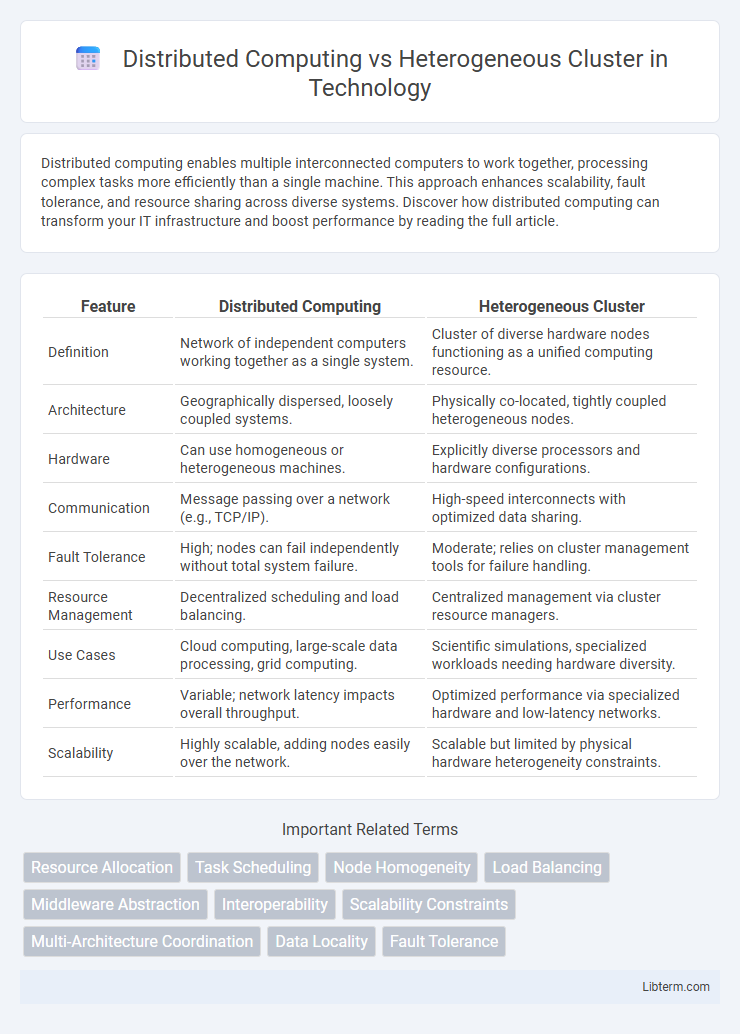

| Feature | Distributed Computing | Heterogeneous Cluster |

|---|---|---|

| Definition | Network of independent computers working together as a single system. | Cluster of diverse hardware nodes functioning as a unified computing resource. |

| Architecture | Geographically dispersed, loosely coupled systems. | Physically co-located, tightly coupled heterogeneous nodes. |

| Hardware | Can use homogeneous or heterogeneous machines. | Explicitly diverse processors and hardware configurations. |

| Communication | Message passing over a network (e.g., TCP/IP). | High-speed interconnects with optimized data sharing. |

| Fault Tolerance | High; nodes can fail independently without total system failure. | Moderate; relies on cluster management tools for failure handling. |

| Resource Management | Decentralized scheduling and load balancing. | Centralized management via cluster resource managers. |

| Use Cases | Cloud computing, large-scale data processing, grid computing. | Scientific simulations, specialized workloads needing hardware diversity. |

| Performance | Variable; network latency impacts overall throughput. | Optimized performance via specialized hardware and low-latency networks. |

| Scalability | Highly scalable, adding nodes easily over the network. | Scalable but limited by physical hardware heterogeneity constraints. |

Introduction to Distributed Computing and Heterogeneous Clusters

Distributed computing enables multiple independent computers to work together as a single system, enhancing processing power and fault tolerance by distributing tasks across networked nodes. Heterogeneous clusters consist of diverse hardware and software configurations, combining different types of processors, memory architectures, and operating systems to optimize performance for specific workloads. This approach leverages the strengths of varied computing resources while managing compatibility and communication challenges inherent in mixed environments.

Core Concepts: Distributed vs. Heterogeneous Systems

Distributed computing involves multiple interconnected computers working together to perform tasks, emphasizing resource sharing, scalability, and fault tolerance across a network. A heterogeneous cluster consists of diverse hardware or software configurations within a single system, optimizing performance by leveraging varied computing resources tailored to specific workloads. Core differences lie in distributed systems prioritizing networked collaboration and fault tolerance, while heterogeneous clusters focus on integrating diverse components for efficient, specialized processing.

Architecture Differences: A Comparative Overview

Distributed computing architecture involves multiple interconnected nodes that collaborate to perform tasks by sharing resources and workload, often across geographically dispersed locations, ensuring scalability and fault tolerance. In contrast, heterogeneous cluster architecture consists of physically co-located diverse hardware systems unified under a single management framework, optimizing performance by leveraging varied computing capabilities such as CPUs, GPUs, and specialized accelerators. The core architectural difference lies in distributed computing's emphasis on networked coordination across multiple systems versus heterogeneous clusters' integration of different hardware types within a single system environment.

Scalability and Flexibility Analysis

Distributed computing systems achieve high scalability by partitioning tasks across multiple nodes, enabling parallel processing and efficient resource utilization. Heterogeneous clusters enhance flexibility by integrating diverse hardware architectures, such as CPUs, GPUs, and FPGAs, optimizing performance for varied workloads. Scalability in heterogeneous clusters depends on effective workload distribution and resource management to exploit the unique capabilities of each component.

Performance Optimization: Key Considerations

Performance optimization in distributed computing relies on efficient resource allocation, minimizing network latency, and balancing workload across multiple nodes to maximize throughput and reduce execution time. Heterogeneous clusters require specialized scheduling algorithms that account for varied hardware capabilities, memory architectures, and processing speeds to achieve optimal performance. Key considerations include data locality, communication overhead, and adaptive load balancing to fully leverage the computational power of diverse cluster components.

Resource Management and Allocation Strategies

Distributed computing involves coordinating multiple interconnected computers to share workloads, requiring dynamic resource management algorithms to optimize CPU, memory, and network usage across homogeneous nodes. In contrast, heterogeneous clusters consist of diverse hardware architectures where resource allocation strategies must account for varying processing capabilities, power consumption, and compatibility, often leveraging workload profiling and adaptive scheduling. Effective resource management in both systems employs load balancing, fault tolerance, and scalability techniques, but heterogeneous clusters demand more sophisticated methods to maximize overall performance and efficiency.

Fault Tolerance and Reliability Comparison

Distributed computing systems enhance fault tolerance by replicating data across multiple nodes, ensuring system reliability even when individual components fail. Heterogeneous clusters, composed of varied hardware and software configurations, face challenges in achieving uniform fault tolerance due to inconsistencies in performance and compatibility. Despite these challenges, advanced load balancing and redundancy strategies in heterogeneous clusters can increase system reliability by efficiently managing node failures and resource variability.

Use Cases and Industry Applications

Distributed computing enables large-scale data processing across multiple networked computers, ideal for industries like finance for real-time fraud detection and scientific research requiring high-performance simulations. Heterogeneous clusters combine diverse hardware types, such as CPUs, GPUs, and TPUs, optimizing workloads in AI training, multimedia rendering, and biotech drug discovery where specialized processing boosts efficiency. Both technologies drive innovation in cloud services, big data analytics, and complex computational tasks, but heterogeneous clusters particularly excel in environments demanding tailored hardware acceleration.

Challenges and Limitations in Both Approaches

Distributed computing faces challenges such as network latency, data consistency, and fault tolerance, which complicate task synchronization across geographically dispersed nodes. Heterogeneous clusters struggle with hardware and software compatibility, leading to inefficient resource utilization and complex workload scheduling. Both approaches exhibit scalability limitations due to overhead in managing diverse components and maintaining system reliability under dynamic workloads.

Future Trends and Evolving Technologies

Future trends in distributed computing emphasize increased scalability and integration of AI-driven resource management to optimize workload distribution across heterogeneous clusters. Emerging technologies such as edge computing and blockchain are enhancing the capability of heterogeneous clusters to support diverse architectures and improve fault tolerance while reducing latency. Advances in hardware accelerators and software frameworks are fostering seamless interoperability and dynamic resource allocation within heterogeneous environments, driving efficiency and computational power forward.

Distributed Computing Infographic

libterm.com

libterm.com