Distribution shift occurs when the statistical properties of data change between training and deployment, causing prediction errors in machine learning models. Understanding different types of distribution shifts, such as covariate or label shift, is crucial for maintaining model accuracy and robustness. Explore the rest of the article to learn how to detect, adapt to, and mitigate distribution shifts effectively in your projects.

Table of Comparison

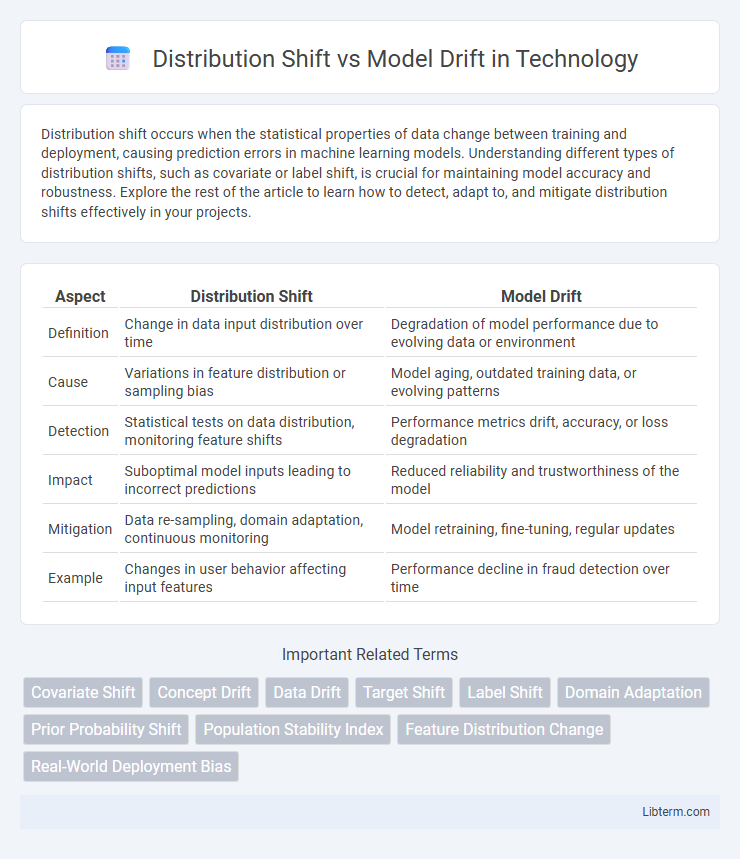

| Aspect | Distribution Shift | Model Drift |

|---|---|---|

| Definition | Change in data input distribution over time | Degradation of model performance due to evolving data or environment |

| Cause | Variations in feature distribution or sampling bias | Model aging, outdated training data, or evolving patterns |

| Detection | Statistical tests on data distribution, monitoring feature shifts | Performance metrics drift, accuracy, or loss degradation |

| Impact | Suboptimal model inputs leading to incorrect predictions | Reduced reliability and trustworthiness of the model |

| Mitigation | Data re-sampling, domain adaptation, continuous monitoring | Model retraining, fine-tuning, regular updates |

| Example | Changes in user behavior affecting input features | Performance decline in fraud detection over time |

Understanding Distribution Shift and Model Drift

Distribution shift refers to changes in the input data's statistical properties compared to the training phase, impacting a model's accuracy when encountering new, unseen data. Model drift occurs when the predictive performance of a deployed model degrades over time due to evolving data distributions or external factors. Understanding these concepts is essential for maintaining reliable machine learning systems by implementing monitoring and retraining strategies.

Key Differences Between Distribution Shift and Model Drift

Distribution shift occurs when the statistical properties of input data change over time, affecting model performance by altering feature distributions. Model drift refers to the degradation of a model's predictive accuracy due to changes in the underlying relationship between input features and target variables. Key differences include distribution shift impacting input data directly, while model drift involves changes in model behavior or output accuracy despite stable input distributions.

Causes of Distribution Shift in Machine Learning

Distribution shift in machine learning occurs when the statistical properties of the input data change between training and deployment phases, leading to model performance degradation. Common causes include changes in data collection methods, evolving user behavior, sensor malfunctions, or environmental variations that alter the feature distribution. Understanding these causes is critical for developing robust models that can adapt to real-world dynamics and maintain reliable predictions.

Common Triggers of Model Drift

Model drift commonly occurs due to changes in data distribution, evolving user behavior, or shifts in external factors such as market trends and regulatory environments. These triggers cause the model's performance to degrade over time by making the training data less representative of new, incoming data. Regular monitoring and retraining using updated datasets help mitigate the impact of model drift.

Impact of Distribution Shift on Model Performance

Distribution shift occurs when the statistical properties of input data change between training and deployment, leading to model drift that negatively affects predictive accuracy. This shift often reduces model reliability, causing increased error rates and biased outputs as the model encounters unfamiliar data patterns. Continuous monitoring and adaptation strategies are essential to mitigate the impact of distribution shift on long-term model performance.

Detecting Model Drift in Production Environments

Detecting model drift in production environments involves monitoring changes in data patterns and model performance metrics to identify when the model no longer aligns with the current input distribution. Techniques such as statistical tests comparing feature distributions, performance degradation alerts, and adaptive retraining schedules are essential to maintaining model accuracy over time. Implementing automated drift detection tools like Population Stability Index (PSI) and tracking metrics such as prediction confidence can help promptly flag model drift and prevent performance loss.

Strategies for Handling Distribution Shift

Strategies for handling distribution shift include continuous monitoring of input data distributions and implementing robust retraining pipelines to adapt models to new data patterns. Employing domain adaptation techniques and leveraging transfer learning frameworks can improve model generalization under evolving conditions. Data augmentation and synthetic data generation further enhance model resilience by simulating diverse data scenarios, mitigating the impact of distributional changes.

Techniques to Mitigate Model Drift

Techniques to mitigate model drift include continuous monitoring of model performance using metrics like accuracy, precision, and recall to detect deviations from baseline behavior. Implementing automated retraining pipelines with up-to-date data helps maintain model relevance in changing environments. Employing adaptive learning methods, such as online learning or incremental updates, allows models to evolve with new data patterns and reduces performance degradation over time.

Monitoring and Maintenance Best Practices

Effective monitoring of distribution shift involves continuously tracking input data characteristics to detect changes that impact model performance, using tools like data validation pipelines and drift detection algorithms. Model drift monitoring requires evaluating model outputs against ground truth over time through performance metrics such as accuracy, precision, and recall to identify degradation. Best practices include implementing automated alerts for significant deviations, scheduled retraining based on detected drift, and maintaining comprehensive logs for auditing and troubleshooting.

Future Directions: Robustness Against Distribution Shift and Drift

Future research in robustness against distribution shift and model drift emphasizes adaptive algorithms capable of continuous learning from evolving data streams. Techniques such as meta-learning, domain adaptation, and reinforcement learning are increasingly explored to maintain model performance amidst changing environments. Developing explainable detection mechanisms and uncertainty quantification methods enhances proactive handling of distributional changes and drift in real-time applications.

Distribution Shift Infographic

libterm.com

libterm.com