Parametric texture synthesis generates realistic textures by modeling statistical properties extracted from example images, enabling seamless pattern creation for computer graphics and design. This approach offers control over texture characteristics, ensuring consistent appearance across various scales and transformations. Explore the rest of the article to understand how parametric methods can enhance your texture generation projects.

Table of Comparison

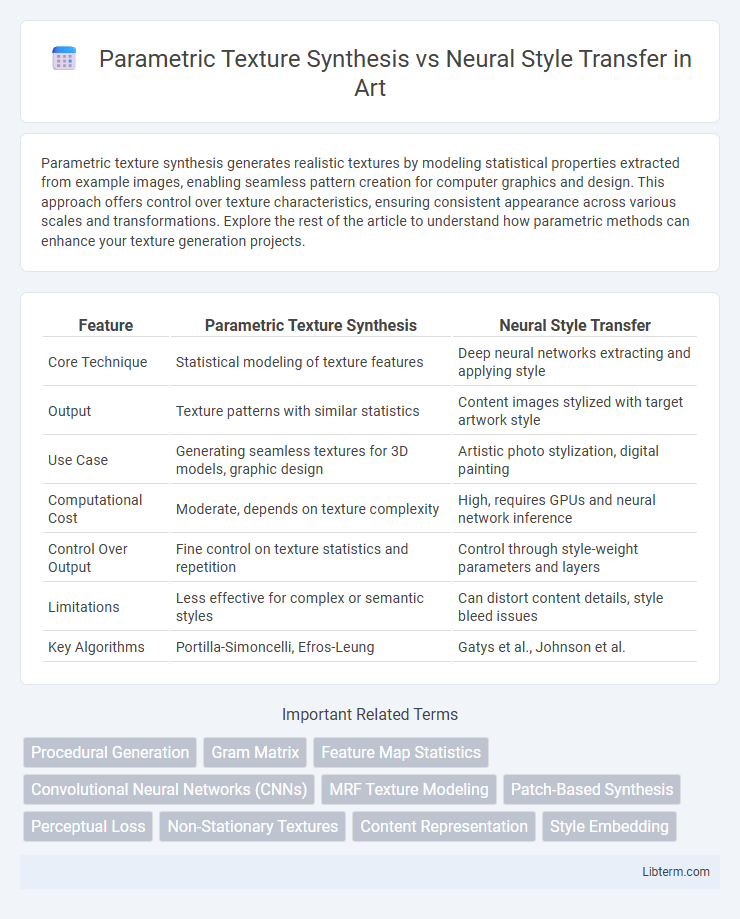

| Feature | Parametric Texture Synthesis | Neural Style Transfer |

|---|---|---|

| Core Technique | Statistical modeling of texture features | Deep neural networks extracting and applying style |

| Output | Texture patterns with similar statistics | Content images stylized with target artwork style |

| Use Case | Generating seamless textures for 3D models, graphic design | Artistic photo stylization, digital painting |

| Computational Cost | Moderate, depends on texture complexity | High, requires GPUs and neural network inference |

| Control Over Output | Fine control on texture statistics and repetition | Control through style-weight parameters and layers |

| Limitations | Less effective for complex or semantic styles | Can distort content details, style bleed issues |

| Key Algorithms | Portilla-Simoncelli, Efros-Leung | Gatys et al., Johnson et al. |

Introduction to Texture Synthesis and Style Transfer

Texture synthesis involves generating large textures from small samples by modeling the statistical properties of the original texture to produce visually consistent patterns, while neural style transfer uses deep convolutional neural networks to merge the content of one image with the texture or style of another. Parametric texture synthesis relies on explicitly defined statistical models to recreate texture appearance, whereas neural style transfer implicitly learns texture representations through layers of a pretrained network, enabling more flexible and complex style reproduction. Both approaches address texture generation but differ fundamentally in their reliance on handcrafted statistical parameters versus learned feature representations.

Understanding Parametric Texture Synthesis

Parametric texture synthesis generates new images by statistically matching feature distributions from a sample texture using models like Portilla-Simoncelli or wavelet-based representations. This method focuses on reproducing texture patterns through explicit parameter estimation, ensuring synthesized textures maintain perceptual similarity to the original. Unlike neural style transfer, which relies on deep convolutional neural networks to merge content and style, parametric synthesis emphasizes texture characterization without altering structural content.

Fundamentals of Neural Style Transfer

Neural Style Transfer fundamentally relies on convolutional neural networks (CNNs) to extract and blend the content and style representations of images by optimizing a loss function that balances content preservation and style replication. It uses feature maps from pre-trained networks, typically VGG19, to capture high-level semantic content and detailed texture patterns, enabling the synthesis of images that maintain original content while adopting complex artistic styles. Unlike parametric texture synthesis, which manipulates statistical summaries of textures, neural style transfer encodes style as correlations between feature responses, allowing more flexible and semantically rich style application.

Core Algorithms and Techniques Compared

Parametric Texture Synthesis relies on statistical models such as Markov Random Fields or kaleidoscopic algorithms to generate textures by matching feature distributions from sample images. Neural Style Transfer employs deep convolutional neural networks, particularly leveraging Gram matrices to capture and transfer the style features between content and style images. The core distinction lies in parametric methods focusing on explicit statistical texture representation, whereas neural style transfer uses learned deep feature correlations for more flexible and semantically rich stylization.

Advantages of Parametric Texture Synthesis

Parametric texture synthesis offers precise control over texture properties by utilizing statistical models that capture complex patterns without relying on large datasets, ensuring consistent and reproducible results. It excels in generating high-quality, seamless textures suitable for applications requiring detailed surface details and efficient computation compared to neural style transfer, which can introduce artifacts and is computationally intensive. This method's adaptability to various texture types supports real-time applications in computer graphics, game development, and virtual reality environments.

Strengths of Neural Style Transfer

Neural Style Transfer excels in capturing complex artistic styles by leveraging deep convolutional neural networks to blend content and style images realistically. It generates high-quality, detailed outputs that maintain semantic coherence between the source content and the applied style, outperforming parametric texture synthesis in style diversity and adaptability. This method's ability to transfer abstract patterns and intricate brushstrokes makes it particularly effective for creative applications requiring nuanced and expressive visual transformations.

Performance and Computational Requirements

Parametric Texture Synthesis typically offers faster performance with lower computational requirements, leveraging statistical models to generate textures efficiently. Neural Style Transfer demands significantly higher computational resources, primarily due to deep convolutional neural networks and iterative optimization processes. Consequently, for real-time applications, parametric methods are more suitable, whereas neural style transfer excels in achieving complex artistic effects at the cost of increased processing time.

Use Cases and Creative Applications

Parametric Texture Synthesis excels in generating detailed, high-resolution textures for 3D modeling, gaming environments, and virtual reality applications by replicating the statistical properties of sample textures. Neural Style Transfer is commonly used in digital art, photo editing, and social media content creation, enabling the fusion of artistic styles with original images in real time. Both techniques empower creative industries by automating complex visual effects and expanding the possibilities for personalized and innovative design workflows.

Limitations and Challenges Faced

Parametric Texture Synthesis struggles with capturing global structures and complex patterns due to its reliance on statistical feature matching, often leading to repetitive or unnatural textures. Neural Style Transfer faces challenges in preserving content fidelity while transferring intricate styles, frequently resulting in artifacts and high computational costs. Both methods encounter difficulties in scalability and generalization across diverse image domains, limiting their practical applications.

Future Trends in Texture Synthesis and Style Transfer

Emerging advancements in parametric texture synthesis and neural style transfer emphasize real-time adaptability and higher fidelity in preserving structural and semantic details. Integration of generative adversarial networks (GANs) and transformer architectures drives improvements in texture diversity and multi-scale style representation. Future trends foresee hybrid models combining parametric control with deep learning to enhance customization and efficiency across various applications in gaming, virtual reality, and digital art.

Parametric Texture Synthesis Infographic

libterm.com

libterm.com