Standardization enhances consistency and quality across products, services, and processes, leading to increased efficiency and customer satisfaction. It simplifies communication and ensures compliance with industry regulations and international standards. Discover how standardization can benefit Your business and improve overall performance by reading the rest of this article.

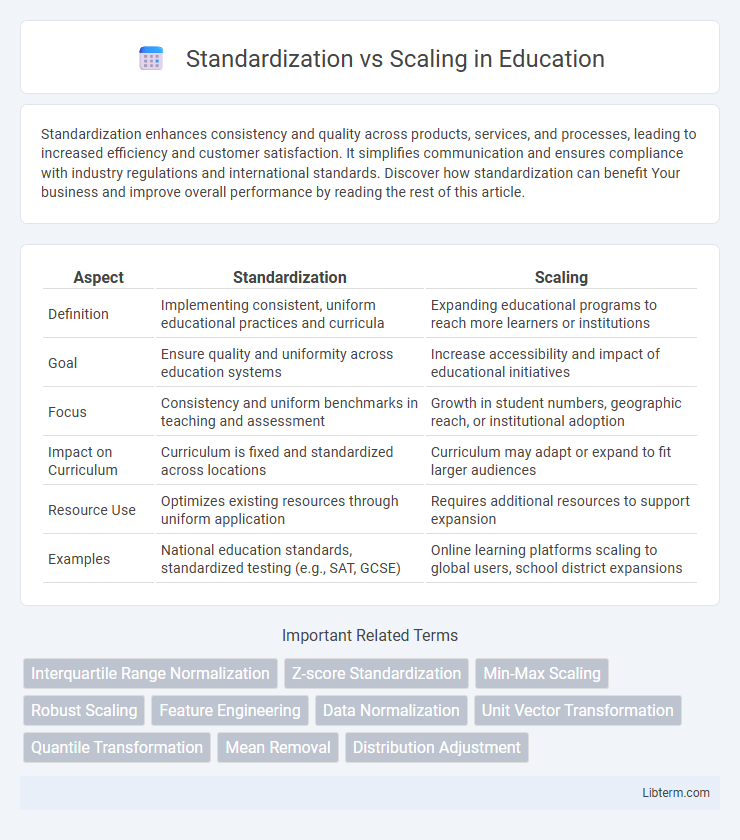

Table of Comparison

| Aspect | Standardization | Scaling |

|---|---|---|

| Definition | Implementing consistent, uniform educational practices and curricula | Expanding educational programs to reach more learners or institutions |

| Goal | Ensure quality and uniformity across education systems | Increase accessibility and impact of educational initiatives |

| Focus | Consistency and uniform benchmarks in teaching and assessment | Growth in student numbers, geographic reach, or institutional adoption |

| Impact on Curriculum | Curriculum is fixed and standardized across locations | Curriculum may adapt or expand to fit larger audiences |

| Resource Use | Optimizes existing resources through uniform application | Requires additional resources to support expansion |

| Examples | National education standards, standardized testing (e.g., SAT, GCSE) | Online learning platforms scaling to global users, school district expansions |

Introduction to Standardization and Scaling

Standardization transforms data to have a mean of zero and a standard deviation of one, enabling algorithms like SVM and K-means to perform optimally by balancing feature contributions. Scaling modifies data ranges, often using Min-Max Scaling to compress features into a fixed interval, improving convergence speed in models like neural networks. Choosing between standardization and scaling depends on the algorithm's sensitivity to data distribution and feature magnitude.

Defining Standardization

Standardization transforms data to have a mean of zero and a standard deviation of one, ensuring features are on the same scale without altering their distribution shape. This process is essential in machine learning algorithms like support vector machines and principal component analysis, where feature comparability improves model performance. Unlike scaling, which adjusts data to a specified range, standardization maintains the statistical properties of the original dataset while normalizing feature variance.

Understanding Scaling

Scaling transforms data by adjusting the range without altering the distribution shape, often using methods like min-max normalization to fit values within a fixed interval, such as [0,1]. This process preserves the relative distances between data points, making it ideal for algorithms sensitive to magnitude differences, like K-Nearest Neighbors or Support Vector Machines. Effective scaling enhances model performance by ensuring features contribute proportionally during training, particularly when dealing with gradient-based optimization techniques.

Key Differences Between Standardization and Scaling

Standardization transforms data to have a mean of zero and a standard deviation of one, making it suitable for algorithms assuming normally distributed data. Scaling adjusts data within a specific range, often 0 to 1, preserving the shape of the original distribution and improving convergence in gradient-based methods. Key differences include the impact on data distribution, sensitivity to outliers, and use cases aligned with specific machine learning models.

Importance in Data Preprocessing

Standardization and scaling are crucial techniques in data preprocessing that enhance model performance by ensuring numerical features are on a similar scale, preventing bias towards variables with larger magnitudes. Standardization transforms data to have a mean of zero and a standard deviation of one, making it suitable for algorithms assuming normally distributed data, while scaling typically rescales features to a fixed range like [0,1], benefiting distance-based models such as k-nearest neighbors. Proper application of these methods mitigates issues like convergence problems in gradient descent and improves the interpretability of model coefficients, ultimately leading to more robust and reliable machine learning outcomes.

When to Use Standardization

Standardization is essential when features have different units or scales, enabling algorithms like Support Vector Machines or Principal Component Analysis to perform effectively. It centers data by removing the mean and scaling to unit variance, ensuring that each feature contributes equally to the model. Standardization is preferred in scenarios with Gaussian-distributed data or when distance-based metrics are crucial.

When to Use Scaling

Use scaling when your machine learning models rely on distance-based algorithms such as k-nearest neighbors, support vector machines, or gradient descent optimization in neural networks. Scaling ensures that features with different units or ranges do not disproportionately influence the model by normalizing them to a consistent scale, typically between 0 and 1 or using z-score standardization. It is essential when feature magnitudes vary significantly, preventing convergence issues and improving model performance and training stability.

Impact on Machine Learning Models

Standardization transforms features to have zero mean and unit variance, which improves model convergence and stability by ensuring all inputs contribute equally during training. Scaling adjusts features to a specific range, often [0,1], preventing features with larger magnitudes from dominating, enhancing model performance in distance-based algorithms like SVM or k-NN. Choosing between standardization and scaling directly affects the accuracy, training speed, and robustness of machine learning models, especially in gradient-based optimizers and algorithms sensitive to feature distribution.

Common Pitfalls and Misconceptions

Standardization often faces pitfalls such as assuming all features need equal scaling, which can distort models when distributions differ significantly. Scaling challenges include misinterpreting its impact, especially when applying min-max scaling without considering outliers, leading to skewed data ranges. Misconceptions also arise from confusing standardization and normalization as interchangeable, despite their distinct effects on model performance and data interpretation.

Best Practices for Implementation

Standardization ensures consistent quality and uniformity across processes by defining clear protocols and metrics, which facilitates easier scalability. Scaling requires adapting resources and infrastructure to handle increased demand while maintaining operational efficiency and service quality. Best practices for implementation include leveraging automation tools, monitoring performance metrics continuously, and maintaining flexible frameworks that allow for iterative improvements during both standardization and scaling phases.

Standardization Infographic

libterm.com

libterm.com