Specificity enhances communication by providing clear, detailed information that minimizes ambiguity and improves understanding. Precise language helps You convey your message effectively, making your content more engaging and trustworthy. Explore the full article to learn how to apply specificity in your writing for maximum impact.

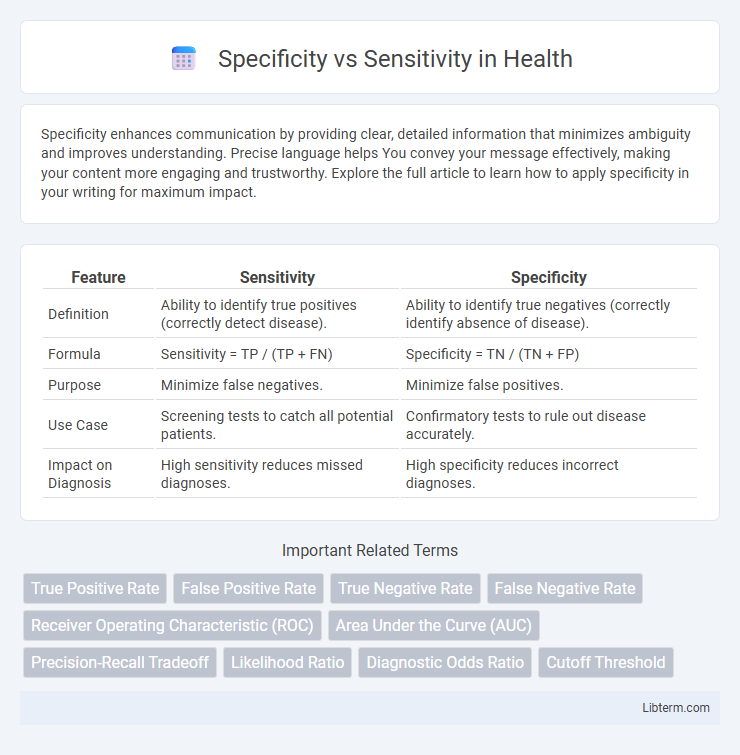

Table of Comparison

| Feature | Sensitivity | Specificity |

|---|---|---|

| Definition | Ability to identify true positives (correctly detect disease). | Ability to identify true negatives (correctly identify absence of disease). |

| Formula | Sensitivity = TP / (TP + FN) | Specificity = TN / (TN + FP) |

| Purpose | Minimize false negatives. | Minimize false positives. |

| Use Case | Screening tests to catch all potential patients. | Confirmatory tests to rule out disease accurately. |

| Impact on Diagnosis | High sensitivity reduces missed diagnoses. | High specificity reduces incorrect diagnoses. |

Understanding Specificity and Sensitivity

Specificity measures a test's ability to correctly identify true negatives, indicating how well it excludes individuals without the condition. Sensitivity reflects the test's ability to detect true positives, highlighting its effectiveness in identifying individuals with the condition. Accurate interpretation of both specificity and sensitivity is crucial for evaluating diagnostic test performance and minimizing false results.

Definitions: Sensitivity vs Specificity

Sensitivity measures a diagnostic test's ability to correctly identify true positive cases, indicating how well it detects individuals with the condition. Specificity evaluates the test's capacity to correctly identify true negative cases, reflecting accuracy in ruling out individuals without the condition. High sensitivity reduces false negatives, while high specificity decreases false positives, both crucial for effective diagnostic accuracy.

Importance in Diagnostic Testing

Specificity measures a diagnostic test's ability to correctly identify true negatives, reducing false positives, while sensitivity assesses its capacity to detect true positives, minimizing false negatives. High sensitivity is crucial for screening diseases to ensure cases are not missed, whereas high specificity is vital for confirming diagnoses to avoid unnecessary treatments. Balancing specificity and sensitivity enhances diagnostic accuracy, improving patient outcomes and guiding effective clinical decisions.

The Role in Disease Detection

Sensitivity measures a diagnostic test's ability to correctly identify patients with a disease, maximizing true positive rates to avoid missed cases. Specificity evaluates the test's capacity to correctly identify disease-free individuals, reducing false positives and unnecessary treatments. Balancing sensitivity and specificity is crucial in disease detection to ensure accurate diagnosis and effective patient management.

Calculating Sensitivity and Specificity

Sensitivity measures the proportion of true positives correctly identified by a test, calculated as true positives divided by the sum of true positives and false negatives. Specificity quantifies the proportion of true negatives accurately detected, computed by dividing true negatives by the sum of true negatives and false positives. Accurate calculation of these metrics is crucial for evaluating diagnostic tests, with sensitivity emphasizing detection of actual cases and specificity focusing on exclusion of non-cases.

Clinical Implications of Sensitivity

High sensitivity in diagnostic tests ensures the accurate identification of patients with a disease, minimizing false negatives and improving early detection rates crucial for conditions like cancer or infectious diseases. Increased sensitivity supports timely intervention and better patient outcomes by capturing nearly all true positive cases, reducing the risk of untreated progression. In clinical settings, tests with high sensitivity are essential for screening protocols where missing a diagnosis could lead to severe consequences.

Clinical Implications of Specificity

High specificity in diagnostic tests minimizes false-positive results, crucial for avoiding unnecessary treatments and patient anxiety in clinical settings. Diseases with serious consequences or costly interventions benefit from tests with high specificity to ensure that only true cases receive further evaluation or therapy. Implementing highly specific tests enhances clinical decision-making by confirming disease absence and reducing the burden of overdiagnosis.

Trade-offs: Sensitivity versus Specificity

Balancing sensitivity and specificity involves trade-offs where increasing sensitivity reduces false negatives but may raise false positives, while enhancing specificity decreases false positives but can increase false negatives. In medical diagnostics, high sensitivity is crucial for screening serious conditions to avoid missed cases, whereas high specificity is vital for confirmatory tests to minimize unnecessary treatments. Optimal threshold settings depend on disease prevalence, test purpose, and the clinical consequences of false positive and false negative results.

Real-World Examples and Case Studies

Specificity measures a test's ability to correctly identify true negatives, while sensitivity quantifies how well it detects true positives; for instance, in HIV screening, highly sensitive tests minimize missed infections, whereas highly specific tests reduce false positives. A tuberculosis diagnostic test in low-prevalence areas requires high specificity to avoid unnecessary treatments, whereas cancer screenings prioritize sensitivity to ensure early detection. Case studies in COVID-19 testing highlight the trade-offs between PCR tests with high sensitivity and rapid antigen tests with greater specificity but lower sensitivity.

Optimizing Test Performance: Balancing Both

Optimizing test performance requires balancing specificity and sensitivity to minimize false positives and false negatives respectively, ensuring accurate detection and diagnosis. High sensitivity improves the identification of true positives, essential in critical conditions, while high specificity reduces false alarms, preventing unnecessary interventions. Achieving an optimal trade-off involves adjusting thresholds and leveraging receiver operating characteristic (ROC) curves to enhance diagnostic precision tailored to clinical or screening needs.

Specificity Infographic

libterm.com

libterm.com