Replication ensures your data remains consistent and highly available across multiple systems, minimizing downtime and data loss risks. This process is critical for disaster recovery and maintaining seamless business operations in dynamic IT environments. Explore the rest of the article to learn how replication strategies can safeguard your digital assets effectively.

Table of Comparison

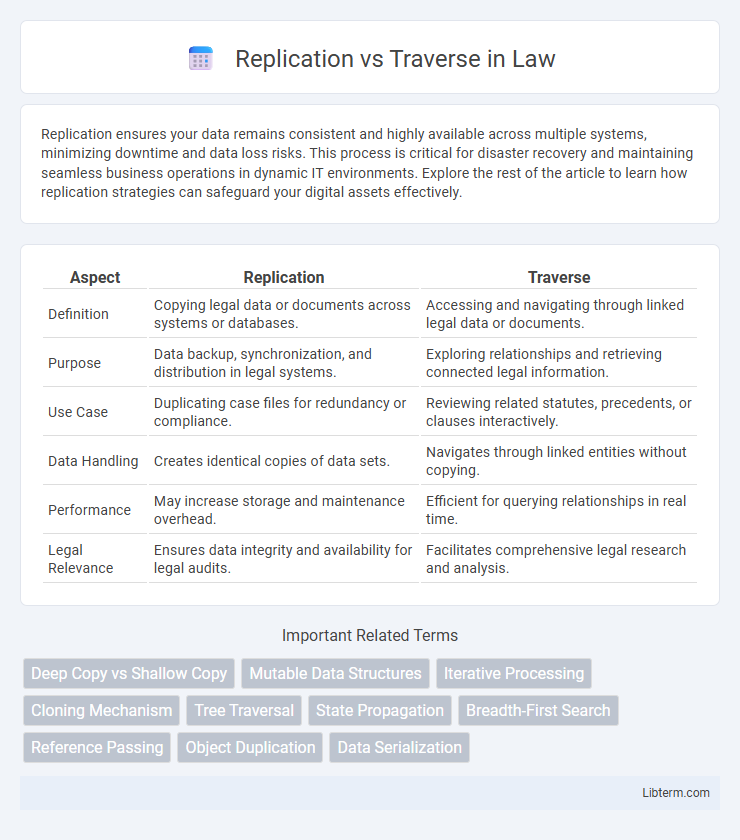

| Aspect | Replication | Traverse |

|---|---|---|

| Definition | Copying legal data or documents across systems or databases. | Accessing and navigating through linked legal data or documents. |

| Purpose | Data backup, synchronization, and distribution in legal systems. | Exploring relationships and retrieving connected legal information. |

| Use Case | Duplicating case files for redundancy or compliance. | Reviewing related statutes, precedents, or clauses interactively. |

| Data Handling | Creates identical copies of data sets. | Navigates through linked entities without copying. |

| Performance | May increase storage and maintenance overhead. | Efficient for querying relationships in real time. |

| Legal Relevance | Ensures data integrity and availability for legal audits. | Facilitates comprehensive legal research and analysis. |

Introduction to Replication and Traverse

Replication involves creating exact copies of data across multiple nodes to ensure consistency, fault tolerance, and high availability in distributed systems. Traverse refers to systematically navigating through data structures or networks to access or analyze stored information efficiently. Understanding replication methods and traversal algorithms is essential for optimizing data retrieval and maintaining system resilience in large-scale computing environments.

Defining Replication in Computing

Replication in computing refers to the process of copying and maintaining database objects, like data or files, across multiple servers or locations to enhance data availability, fault tolerance, and load balancing. It enables consistent and synchronized data updates, ensuring that changes made in one node are propagated to others to prevent data loss or discrepancies. Common replication methods include master-slave, master-master, and multi-master architectures, each tailored to specific performance and consistency requirements.

Understanding Traverse in Data Structures

Traverse in data structures refers to the systematic process of visiting each element within a structure, such as arrays, linked lists, trees, or graphs, to access or process data. Unlike replication, which involves copying data to create duplicates, traversal focuses on sequentially accessing elements to perform operations like searching, updating, or printing. Efficient traversal algorithms, including depth-first and breadth-first strategies for trees and graphs, are fundamental for optimizing data manipulation and retrieval tasks.

Key Differences Between Replication and Traverse

Replication involves copying data from one source to another to ensure consistency and redundancy, while traverse refers to the process of systematically accessing elements within a data structure such as trees or graphs. Replication emphasizes data duplication for backup and synchronization across systems, whereas traverse prioritizes data retrieval and navigation within a single dataset. Key differences include replication's focus on data availability and fault tolerance versus traverse's role in data processing and algorithm implementation.

Common Use Cases for Replication

Replication is commonly used in database systems for backup, disaster recovery, and load balancing to ensure data availability and fault tolerance. It enables real-time data synchronization across multiple servers, supporting read scalability and high availability in distributed applications. Traversal, on the other hand, is primarily used in graph databases and data structures to explore relationships and navigate through connected nodes efficiently.

Typical Applications for Traverse

Traverse is commonly used in graph databases and social network analysis, where exploring relationships between nodes is essential for recommendations, fraud detection, and network optimizations. It excels in scenarios involving pathfinding, such as routing algorithms and hierarchy traversal in organizational charts or supply chains. Replication, in contrast, primarily supports data availability and consistency across distributed systems but is not designed for path exploration or complex relationship queries.

Performance Impacts: Replication vs Traverse

Replication creates multiple data copies, increasing storage requirements and write latency but significantly enhancing read performance by reducing data access time. Traverse operations involve searching through data structures or networks, which can lead to higher CPU and memory usage, especially in complex queries or large datasets, impacting overall system responsiveness. Balancing replication's overhead with traverse efficiency is crucial for optimizing database and distributed system performance.

Challenges and Limitations of Each Approach

Replication faces challenges such as increased storage costs and potential consistency issues due to data redundancy across multiple nodes. Traverse operations encounter limitations in latency and scalability, especially when navigating deep or complex graph structures with numerous connected entities. Both approaches struggle with balancing performance and resource consumption, impacting their suitability for different data architectures.

Best Practices for Choosing Replication or Traverse

Choosing between replication and traverse depends on data consistency requirements and latency tolerance. Replication ensures high availability and quicker read access by duplicating data across nodes, making it ideal for read-heavy workloads with stringent consistency. Traverse suits use cases needing real-time data traversal without duplication, minimizing storage costs but potentially increasing read latency and complexity.

Conclusion: Selecting the Right Method for Your Needs

Replication excels in scenarios requiring data consistency and high availability across distributed systems, making it ideal for disaster recovery and load balancing. Traverse is better suited for exploring complex data relationships and executing graph-based queries efficiently, especially in recommendation engines and network analysis. Choosing the right method depends on your system's goals: replication for synchronizing data copies and traverse for navigating interconnected data structures.

Replication Infographic

libterm.com

libterm.com