Orthonormal refers to a set of vectors that are both orthogonal and normalized, meaning each vector is perpendicular to the others and has a length of one. This concept is fundamental in areas such as linear algebra, computer graphics, and signal processing for simplifying calculations and maintaining numerical stability. Explore the rest of the article to understand how orthonormality can enhance your mathematical and engineering applications.

Table of Comparison

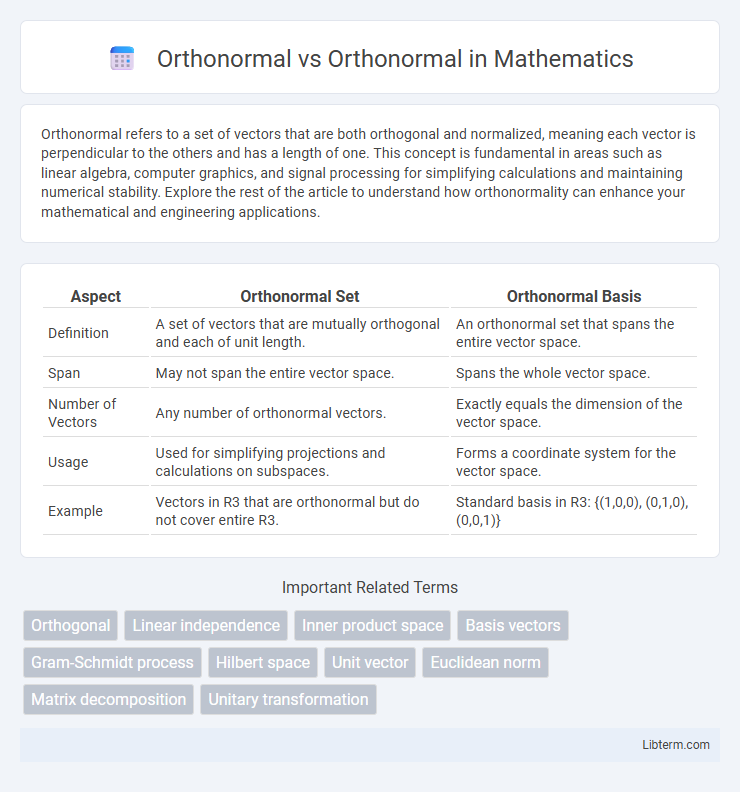

| Aspect | Orthonormal Set | Orthonormal Basis |

|---|---|---|

| Definition | A set of vectors that are mutually orthogonal and each of unit length. | An orthonormal set that spans the entire vector space. |

| Span | May not span the entire vector space. | Spans the whole vector space. |

| Number of Vectors | Any number of orthonormal vectors. | Exactly equals the dimension of the vector space. |

| Usage | Used for simplifying projections and calculations on subspaces. | Forms a coordinate system for the vector space. |

| Example | Vectors in R3 that are orthonormal but do not cover entire R3. | Standard basis in R3: {(1,0,0), (0,1,0), (0,0,1)} |

Defining Orthonormal: A Mathematical Foundation

Orthonormal vectors in a vector space are defined by two key properties: each vector has unit length (norm equal to one) and vectors are mutually perpendicular, meaning their inner product equals zero. This orthonormality ensures an orthonormal basis, facilitating simplified computations in linear algebra, such as projections and decompositions. The mathematical foundation of orthonormality underpins applications in signal processing, quantum mechanics, and computer graphics by providing a stable and efficient framework for vector representation.

The Importance of Orthonormality in Linear Algebra

Orthonormality plays a crucial role in linear algebra by ensuring vectors are both orthogonal and of unit length, which simplifies many mathematical operations. This property facilitates easier computation of projections, transformations, and eigenvalues while maintaining numerical stability. Using orthonormal bases improves the accuracy and efficiency of algorithms in fields such as signal processing, computer graphics, and machine learning.

Orthonormal Vectors: Properties and Examples

Orthonormal vectors are a set of vectors in a vector space that are both orthogonal and normalized, meaning each vector has a length of one and is perpendicular to the others, which simplifies many calculations in linear algebra and applications such as signal processing and machine learning. Key properties include the dot product of any two different vectors being zero, and the dot product of a vector with itself being one, ensuring computational stability and ease in transformations. Examples of orthonormal vectors are the standard basis vectors in Euclidean space, such as (1,0,0), (0,1,0), and (0,0,1) in three-dimensional space, which form an orthonormal basis.

Orthonormal Bases: Building Blocks of Vector Spaces

Orthonormal bases consist of vectors that are both orthogonal and normalized, serving as the building blocks of vector spaces by simplifying computations and preserving length and angle information. These bases enable straightforward representation of any vector in the space as a unique linear combination of basis vectors, facilitating tasks like projection, transformation, and diagonalization in linear algebra. The use of orthonormal bases is fundamental in fields such as quantum mechanics, signal processing, and computer graphics due to their computational efficiency and numerical stability.

Orthonormal Matrices: Structure and Applications

Orthonormal matrices consist of columns and rows that form an orthonormal set, meaning each vector is unit-length and mutually perpendicular, which preserves vector norms and angles during transformations. These matrices are fundamental in linear algebra due to their property \(Q^T Q = I\), ensuring stability and efficiency in computations such as QR decomposition and eigenvalue algorithms. Applications of orthonormal matrices span diverse fields including signal processing for maintaining signal integrity, computer graphics for 3D rotations, and quantum mechanics where they represent unitary transformations preserving probability amplitudes.

Comparing Orthonormal and Orthogonal Concepts

Orthonormal vectors are a special subset of orthogonal vectors that not only maintain perpendicularity but also have unit length, ensuring a magnitude of one. Orthogonal vectors are defined by their zero dot product, indicating perpendicularity but without restrictions on their lengths. The distinction between orthonormal and orthogonal sets is crucial in applications like signal processing, machine learning, and linear algebra, where normalization impacts the computational stability and interpretability of vector spaces.

Orthonormal Transformations in Data Science

Orthonormal transformations in data science involve linear mappings preserving vector length and angles, characterized by orthonormal matrices whose columns form orthonormal bases. These transformations are critical in dimensionality reduction techniques like Principal Component Analysis (PCA), ensuring data integrity through isometric mappings. By maintaining orthogonality and unit norms, orthonormal transformations facilitate efficient computation and enhance model interpretability in high-dimensional data analysis.

Orthonormalization Process: Gram-Schmidt Method

The Orthonormalization process transforms a set of linearly independent vectors into an orthonormal set by applying the Gram-Schmidt method, ensuring each vector is orthogonal to the others and normalized to unit length. This iterative procedure subtracts the projection of the current vector onto all previously orthonormalized vectors, then normalizes the result to maintain orthonormality. The Gram-Schmidt algorithm is fundamental in numerical linear algebra, aiding in QR decomposition and improving the stability of vector space representations.

Real-World Applications of Orthonormal Sets

Orthonormal sets, characterized by vectors that are both orthogonal and normalized, play a pivotal role in signal processing, allowing efficient representation and reconstruction of signals without redundancy. In machine learning, orthonormal bases facilitate dimensionality reduction techniques like Principal Component Analysis (PCA) by ensuring feature independence and improving computational stability. These real-world applications leverage orthonormality to enhance accuracy, optimize data compression, and simplify computations across various engineering and scientific domains.

Common Pitfalls: Misconceptions about Orthonormality

Common pitfalls in understanding orthonormality include confusing orthonormal vectors with merely orthogonal or normalized vectors, neglecting that orthonormal vectors must satisfy both conditions simultaneously. Another misconception is assuming any set of orthogonal vectors is automatically orthonormal without verifying their unit length, leading to errors in calculations involving basis transformations or projections. It's crucial to ensure vectors are unit vectors and mutually orthogonal to correctly apply orthonormality in linear algebra and functional analysis.

Orthonormal Infographic

libterm.com

libterm.com