Bayesian inference is a statistical method that updates the probability of a hypothesis as new evidence becomes available, combining prior knowledge with observed data to improve decision-making accuracy. This approach is widely used in machine learning, data analysis, and scientific research to provide more robust predictions and insights. Explore the rest of the article to discover how Bayesian inference can enhance your analytical skills and problem-solving strategies.

Table of Comparison

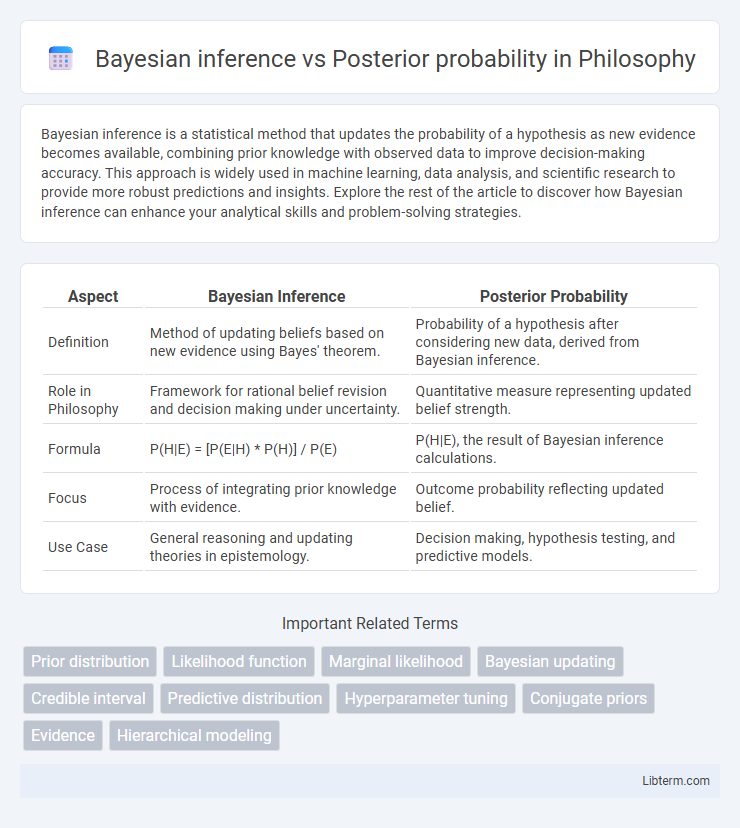

| Aspect | Bayesian Inference | Posterior Probability |

|---|---|---|

| Definition | Method of updating beliefs based on new evidence using Bayes' theorem. | Probability of a hypothesis after considering new data, derived from Bayesian inference. |

| Role in Philosophy | Framework for rational belief revision and decision making under uncertainty. | Quantitative measure representing updated belief strength. |

| Formula | P(H|E) = [P(E|H) * P(H)] / P(E) | P(H|E), the result of Bayesian inference calculations. |

| Focus | Process of integrating prior knowledge with evidence. | Outcome probability reflecting updated belief. |

| Use Case | General reasoning and updating theories in epistemology. | Decision making, hypothesis testing, and predictive models. |

Introduction to Bayesian Inference

Bayesian inference is a statistical method that updates the probability estimate for a hypothesis as new evidence or data becomes available. It relies on Bayes' theorem, which combines prior probability with the likelihood of observed data to calculate the posterior probability. Posterior probability represents the updated belief about the hypothesis after considering the evidence, forming the core output of Bayesian inference.

Defining Posterior Probability

Posterior probability quantifies the likelihood of a hypothesis given observed data, calculated using Bayes' theorem by updating prior beliefs with new evidence. Bayesian inference relies on posterior probability as a core component to make probabilistic predictions and decisions under uncertainty. This process integrates prior distributions and likelihood functions to generate the posterior distribution, which refines knowledge about parameters after data observation.

Key Concepts in Bayesian Analysis

Bayesian inference revolves around updating prior beliefs with observed data to form a posterior probability distribution, which quantifies uncertainty about parameters after considering new evidence. The key concepts in Bayesian analysis include the prior distribution, likelihood function, and posterior distribution, linking observed data with prior knowledge through Bayes' theorem. Posterior probability serves as the foundation for decision-making and prediction in Bayesian frameworks by providing a comprehensive summary of parameter uncertainty.

Bayesian Inference: Process and Principles

Bayesian inference is a statistical method that updates the probability of a hypothesis based on new evidence by applying Bayes' theorem. This process involves combining prior probability, which reflects initial beliefs before data observation, with the likelihood of observed data to compute the posterior probability. The core principle is that the posterior probability continuously improves prediction accuracy as more relevant data becomes available.

Deriving Posterior Probability in Bayesian Framework

Deriving posterior probability in the Bayesian framework involves updating prior beliefs with new evidence using Bayes' theorem, which mathematically expresses the posterior as the product of the likelihood function and the prior probability divided by the marginal likelihood. This process quantifies uncertainty by combining the prior distribution, representing initial knowledge, with the likelihood of observed data under different hypotheses. Bayesian inference thus provides a principled method to compute posterior distributions, enabling probabilistic reasoning and decision-making under uncertainty.

Bayesian Inference vs Posterior Probability: Core Differences

Bayesian inference is a comprehensive statistical framework that updates the probability of a hypothesis based on observed data and prior beliefs, while posterior probability is the specific updated probability distribution of the hypothesis after considering the evidence. Bayesian inference encompasses prior probability, likelihood of observed data, and posterior probability to make probabilistic predictions or decisions. The core difference lies in Bayesian inference being the entire process of updating beliefs, whereas posterior probability represents the final outcome of this process.

Mathematical Foundations: Equations and Definitions

Bayesian inference relies on Bayes' theorem, expressed mathematically as \( P(\theta|D) = \frac{P(D|\theta)P(\theta)}{P(D)} \), where \( \theta \) represents parameters and \( D \) the observed data. Posterior probability, \( P(\theta|D) \), quantifies the updated belief about the parameters after observing data, combining the prior probability \( P(\theta) \) and the likelihood \( P(D|\theta) \). The normalization factor \( P(D) = \int P(D|\theta)P(\theta)d\theta \) ensures the posterior distribution sums to one over all parameter values.

Applications of Bayesian Inference

Bayesian inference is widely applied in machine learning for updating model parameters using observed data, enhancing prediction accuracy in fields like natural language processing and computer vision. It plays a critical role in medical diagnostics by incorporating prior knowledge and patient data to improve disease probability estimations. Bayesian methods also optimize decision-making in finance and risk management by quantifying uncertainty and refining posterior probability distributions for asset returns and default risks.

Practical Examples of Posterior Probability

Posterior probability quantifies the likelihood of a hypothesis given new evidence, as demonstrated in medical diagnostics where test results update the probability of disease presence. Bayesian inference applies this concept by combining prior beliefs with observed data to refine predictions, such as estimating the probability of a customer purchasing a product after analyzing browsing history. Practical examples include spam email filtering, where posterior probabilities assess the likelihood of an email being spam based on word frequencies and previous occurrences.

Conclusion: Integrating Bayesian Inference and Posterior Probability

Integrating Bayesian inference with posterior probability enhances decision-making by updating beliefs based on new evidence, ensuring probability estimates reflect observed data accurately. Bayesian inference provides the mathematical framework to calculate posterior probability, which quantifies the likelihood of hypotheses after incorporating prior knowledge and current data. This synergy enables more precise predictions and robust statistical analysis across fields such as machine learning, medical diagnostics, and financial modeling.

Bayesian inference Infographic

libterm.com

libterm.com