Concurrent processing enables multiple tasks to run simultaneously, increasing efficiency and reducing wait times in computing and programming environments. This approach leverages parallel execution to maximize resource utilization and improve application performance. Explore the rest of the article to understand how concurrent systems can enhance your workflows.

Table of Comparison

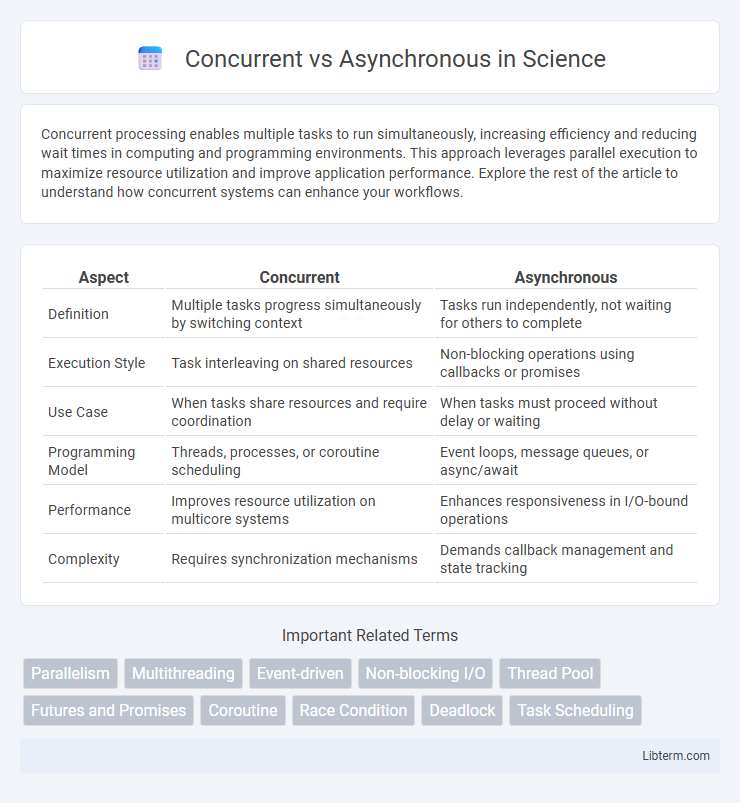

| Aspect | Concurrent | Asynchronous |

|---|---|---|

| Definition | Multiple tasks progress simultaneously by switching context | Tasks run independently, not waiting for others to complete |

| Execution Style | Task interleaving on shared resources | Non-blocking operations using callbacks or promises |

| Use Case | When tasks share resources and require coordination | When tasks must proceed without delay or waiting |

| Programming Model | Threads, processes, or coroutine scheduling | Event loops, message queues, or async/await |

| Performance | Improves resource utilization on multicore systems | Enhances responsiveness in I/O-bound operations |

| Complexity | Requires synchronization mechanisms | Demands callback management and state tracking |

Understanding Concurrency and Asynchrony

Concurrency involves multiple tasks making progress simultaneously, often by managing multiple threads or processes to improve performance and resource utilization. Asynchrony allows tasks to run independently without waiting for others to complete, enabling non-blocking operations and efficient handling of I/O-bound processes. Understanding concurrency and asynchrony is essential for designing responsive applications that maximize system throughput and minimize latency.

Core Differences Between Concurrent and Asynchronous Programming

Concurrent programming involves managing multiple tasks by allowing them to progress simultaneously, optimizing CPU utilization through task switching. Asynchronous programming emphasizes non-blocking operations, enabling a program to continue executing other tasks while waiting for external processes to complete. The core difference lies in concurrency managing multiple tasks at once within a system, whereas asynchrony specifically deals with the timing and handling of operations that may not complete immediately.

Real-World Examples: Concurrent vs Asynchronous Operations

Concurrent operations handle multiple tasks by interleaving their execution, as seen in a web server managing numerous client requests simultaneously. Asynchronous operations allow a system to initiate a task and continue other work without waiting for completion, exemplified by a user interface remaining responsive while loading data in the background. Real-world examples include database transactions handling concurrent queries versus an email client fetching messages asynchronously to avoid blocking the user experience.

Pros and Cons of Concurrent Programming

Concurrent programming enables multiple tasks to make progress simultaneously by efficiently utilizing CPU cores, leading to improved application responsiveness and better resource utilization. However, it introduces complexity in managing shared resources, increasing the risk of race conditions, deadlocks, and subtle bugs that are difficult to reproduce and debug. Proper synchronization mechanisms and careful design are essential to mitigate these risks and fully leverage the performance benefits of concurrency.

Pros and Cons of Asynchronous Programming

Asynchronous programming enables non-blocking operations, improving application responsiveness and resource utilization by allowing tasks to run concurrently without waiting for each to complete. However, it introduces complexity in code management, requiring careful handling of callbacks, promises, or async/await constructs to avoid issues like callback hell and race conditions. Debugging and error handling are also more challenging compared to synchronous programming, potentially increasing development time and maintenance costs.

Performance Implications: Which is Faster?

Concurrent execution allows multiple tasks to progress simultaneously, improving resource utilization and responsiveness especially on multicore processors. Asynchronous programming enhances application performance by avoiding blocking operations, enabling efficient task management and reduced idle time. Performance depends on workload nature; CPU-bound tasks benefit more from concurrency, while I/O-bound operations see significant gains with asynchrony.

Common Use Cases for Concurrency

Concurrency is commonly employed in web servers to handle multiple client requests simultaneously, improving responsiveness and throughput. It is also widely used in real-time systems such as gaming and simulation where multiple processes need to operate in overlapping time frames. Furthermore, concurrent programming is essential in user interface applications to maintain smooth interaction while performing background tasks like data loading or processing.

Common Use Cases for Asynchronous Workflows

Asynchronous workflows are commonly used in web applications to handle user requests without waiting for long-running processes, improving responsiveness and scalability. They enable background processing in systems like email delivery, data fetching, and task scheduling where immediate results are not required. Cloud services and microservices architectures frequently leverage asynchronous messaging to decouple components and ensure efficient resource utilization.

Choosing the Right Approach: Factors to Consider

Choosing between concurrent and asynchronous programming depends on the nature of the task, system resources, and desired responsiveness. Concurrent programming suits CPU-bound tasks requiring parallel execution, while asynchronous approaches excel in I/O-bound operations by enabling non-blocking workflows. Consider factors such as CPU availability, latency sensitivity, and complexity of task coordination to optimize performance and resource utilization.

Best Practices for Implementing Concurrency and Asynchrony

Implement concurrency by breaking tasks into independent units executable in parallel to maximize CPU utilization, leveraging threads or processes while avoiding race conditions through proper synchronization techniques like locks or semaphores. Implement asynchrony by using non-blocking I/O operations, callbacks, promises, or async/await patterns to improve application responsiveness and throughput without blocking the main execution thread. Prioritize choosing the right model based on task nature, ensure thread safety, manage resources efficiently, and perform rigorous testing to prevent deadlocks and race conditions.

Concurrent Infographic

libterm.com

libterm.com