In-memory cache stores data directly in the system's RAM, enabling ultra-fast data retrieval and reducing latency compared to traditional disk-based storage. This technology is essential for improving the performance of applications that require quick access to frequently used information, supporting real-time data processing and enhancing user experience. Discover how implementing an in-memory cache can transform Your system's speed and efficiency by reading the full article.

Table of Comparison

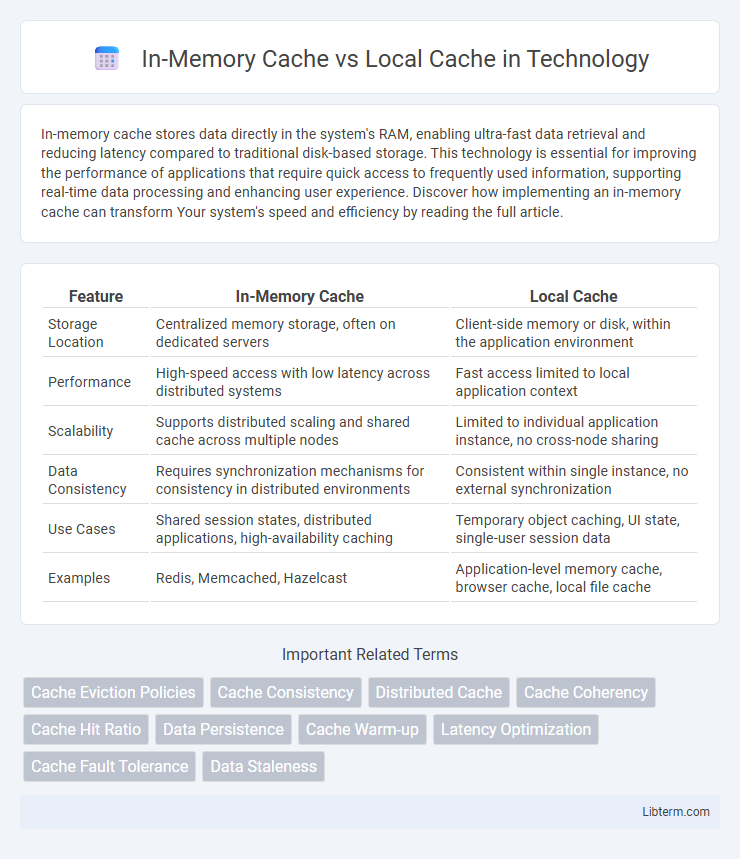

| Feature | In-Memory Cache | Local Cache |

|---|---|---|

| Storage Location | Centralized memory storage, often on dedicated servers | Client-side memory or disk, within the application environment |

| Performance | High-speed access with low latency across distributed systems | Fast access limited to local application context |

| Scalability | Supports distributed scaling and shared cache across multiple nodes | Limited to individual application instance, no cross-node sharing |

| Data Consistency | Requires synchronization mechanisms for consistency in distributed environments | Consistent within single instance, no external synchronization |

| Use Cases | Shared session states, distributed applications, high-availability caching | Temporary object caching, UI state, single-user session data |

| Examples | Redis, Memcached, Hazelcast | Application-level memory cache, browser cache, local file cache |

Introduction to In-Memory Cache and Local Cache

In-memory cache stores data directly within the system's RAM, enabling ultra-fast access times and reducing latency for frequently accessed information. Local cache refers to data stored on the user's device or local system, improving performance by minimizing the need to fetch data from remote servers. Both caching strategies optimize data retrieval but differ in scope and application, with in-memory cache offering centralized speed and local cache providing user-specific storage.

How In-Memory Cache Works

In-memory cache stores data directly within the system's RAM, enabling ultra-fast data retrieval by eliminating disk I/O latency. This caching method holds frequently accessed data objects in memory, ensuring quick response times and reducing backend server load. Its volatile nature requires strategies like replication or persistence to prevent data loss during power failures or system crashes.

How Local Cache Functions

Local cache functions by storing data directly within the application's memory space, enabling rapid data retrieval without network latency. It leverages fast access to frequently used objects or query results, minimizing computation and database load. This approach enhances performance in single-instance or tightly coupled systems but has limitations in scalability and data synchronization across distributed environments.

Performance Comparison: In-Memory vs Local Cache

In-memory cache offers significantly faster data retrieval speeds compared to local cache due to storing data directly in RAM, minimizing disk I/O latency. Local cache, typically stored on disk or within application scope, provides slower access times but reduces memory usage, making it suitable for less frequently accessed data. Performance benchmarks show in-memory cache can accelerate response times by up to 10x, especially in high-throughput systems requiring rapid read/write operations.

Scalability Considerations

In-memory cache offers superior scalability for large-scale distributed systems by enabling fast data access across multiple nodes, whereas local cache is limited to the single application instance, restricting its effectiveness as system demand grows. In-memory caches utilize centralized or distributed frameworks like Redis or Memcached, which dynamically handle increasing workloads and maintain consistency across clusters. Local cache, while providing low-latency access, lacks the ability to scale horizontally and can lead to cache coherence challenges in complex environments.

Data Consistency and Reliability

In-memory cache stores data in RAM accessible across multiple processes or servers, ensuring higher consistency through centralized updates and synchronized states. Local cache resides within a single application instance, offering faster access but risking stale data due to isolated, unsynchronized caching. Data consistency and reliability favor in-memory cache solutions like Redis or Memcached in distributed environments, while local cache suits scenarios with limited consistency requirements and isolated workloads.

Use Cases for In-Memory Cache

In-memory cache excels in high-speed data retrieval scenarios like real-time analytics, session management, and distributed applications where low latency is critical. It supports large-scale, multi-user environments by storing frequently accessed data directly in RAM across servers, enhancing performance and scalability. Use cases include e-commerce platforms, gaming leaderboards, and financial trading systems requiring rapid stateful access.

Use Cases for Local Cache

Local cache is ideal for applications requiring ultra-fast data retrieval with minimal latency, such as user session management and real-time analytics on a single server. It excels in scenarios where data consistency across distributed systems is less critical, enabling immediate access to frequently used objects without network overhead. Use cases include desktop applications, mobile apps, and edge computing environments where quick, local access to cached data enhances performance and responsiveness.

Security Implications

In-memory cache stores data temporarily in RAM, offering faster access but posing higher security risks due to vulnerability to memory scraping and unauthorized access if proper encryption and access controls are not enforced. Local cache, often stored on disk, may reduce exposure to attacks targeting volatile memory but presents risks related to persistence, such as data leakage through residual files or unauthorized retrieval if disk encryption is weak. Effective security strategies must balance these risks by implementing robust authentication, encryption methods, and secure cache invalidation policies tailored to the chosen caching approach.

Choosing the Right Cache Solution

Choosing the right cache solution depends on factors such as response time requirements, data persistence, and scalability needs. In-memory cache offers ultra-fast data access by storing information directly in RAM, making it ideal for applications demanding low latency and high throughput. Local cache, stored closer to the client or application instance, reduces network overhead but may encounter consistency challenges in distributed environments.

In-Memory Cache Infographic

libterm.com

libterm.com