Multithreading allows multiple threads to run concurrently within a single program, improving application performance and responsiveness. It enables efficient utilization of CPU resources by executing tasks in parallel, which is vital for complex, resource-intensive processes. Discover how multithreading can enhance your software development by exploring the detailed benefits and best practices in the rest of this article.

Table of Comparison

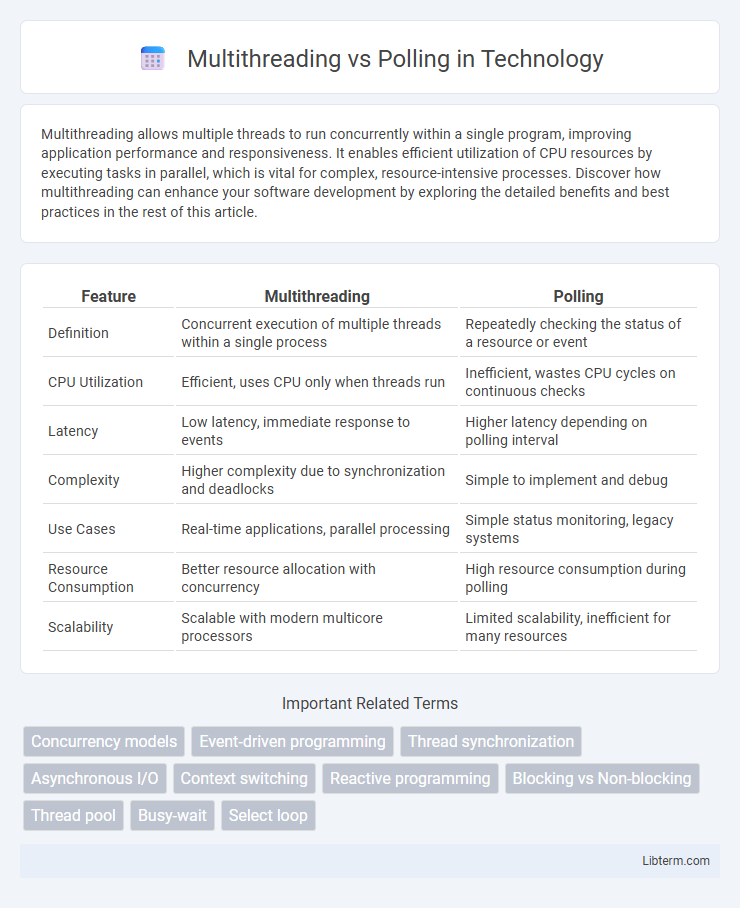

| Feature | Multithreading | Polling |

|---|---|---|

| Definition | Concurrent execution of multiple threads within a single process | Repeatedly checking the status of a resource or event |

| CPU Utilization | Efficient, uses CPU only when threads run | Inefficient, wastes CPU cycles on continuous checks |

| Latency | Low latency, immediate response to events | Higher latency depending on polling interval |

| Complexity | Higher complexity due to synchronization and deadlocks | Simple to implement and debug |

| Use Cases | Real-time applications, parallel processing | Simple status monitoring, legacy systems |

| Resource Consumption | Better resource allocation with concurrency | High resource consumption during polling |

| Scalability | Scalable with modern multicore processors | Limited scalability, inefficient for many resources |

Introduction to Multithreading and Polling

Multithreading enables concurrent execution of multiple threads within a single process, improving resource utilization and application responsiveness by allowing tasks to run in parallel. Polling involves repeatedly checking the status of a resource or condition at regular intervals, which can lead to inefficient CPU usage and delayed response times compared to event-driven approaches. Understanding these concepts is crucial for optimizing performance in real-time and multitasking applications.

Core Concepts: What is Multithreading?

Multithreading is a programming technique that allows multiple threads to execute concurrently within a single process, sharing resources such as memory while improving performance and responsiveness. It leverages CPU cores effectively by running tasks in parallel, reducing idle time and enabling efficient multitasking. This core concept is essential for real-time applications, asynchronous operations, and improving throughput in complex software systems.

Understanding Polling Mechanisms

Polling mechanisms continuously check the status of a resource or event at regular intervals, which can lead to inefficient CPU usage and increased latency compared to multithreading. Unlike multithreading, which allows simultaneous execution of multiple threads to handle tasks concurrently, polling consumes resources even when no new data is available. Understanding polling involves recognizing its simplicity but also its limitations in scalability and responsiveness in real-time applications.

Key Differences Between Multithreading and Polling

Multithreading enables concurrent execution of multiple threads within a single process, improving resource utilization and responsiveness, while polling repeatedly checks a resource's status at intervals, often leading to higher CPU usage and latency. Multithreading minimizes idle time by allowing context switching between threads, whereas polling can cause inefficient processor cycles due to constant status queries. In performance-critical applications, multithreading generally offers better scalability and responsiveness compared to the simpler but less efficient polling technique.

Performance Comparison: Multithreading vs Polling

Multithreading enhances performance by executing multiple threads concurrently, reducing idle CPU time and improving resource utilization compared to polling, which continuously checks for events and wastes CPU cycles. Polling introduces latency due to constant checking intervals, whereas multithreading allows real-time event handling, resulting in faster response times and efficient multitasking. In high-demand applications, multithreading offers superior scalability and throughput over polling, which can degrade performance under heavy loads.

Advantages of Multithreading in Application Development

Multithreading enhances application performance by allowing multiple threads to execute concurrently, improving responsiveness and resource utilization. It enables efficient CPU usage by running background tasks alongside the main process, reducing idle times and enhancing throughput. Multithreading also simplifies complex asynchronous operations, leading to more scalable and responsive applications compared to polling-based approaches.

Limitations and Challenges of Polling

Polling consumes excessive CPU resources due to continuous checking for events, leading to inefficient system performance. It introduces latency as the system may miss real-time responsiveness by relying on fixed intervals rather than immediate event triggers. Scalability issues arise because polling does not scale well with numerous concurrent events, causing increased overhead and resource contention.

Use Cases: When to Use Multithreading vs Polling

Multithreading is ideal for applications requiring concurrent execution of multiple tasks, such as real-time data processing, user interface responsiveness, or parallel computations, where tasks can run independently without blocking each other. Polling suits scenarios involving periodic status checks, like monitoring hardware sensors or waiting for external events, where task simplicity outweighs responsiveness demands. Use multithreading when minimizing latency and maximizing resource utilization is crucial, while polling fits best when system overhead is low and event timing is predictable.

Best Practices for Efficient Resource Management

Multithreading enables concurrent execution of tasks by utilizing multiple threads, improving CPU utilization and responsiveness when managing I/O-bound or CPU-bound operations. Polling continuously checks for resource availability or events, which can lead to inefficient CPU usage and increased latency if not managed properly. Best practices recommend minimizing polling loops, using thread synchronization techniques, and leveraging asynchronous I/O or event-driven programming to optimize resource management and system performance.

Conclusion: Choosing the Right Approach for Your Needs

Multithreading offers efficient parallel execution and improved responsiveness for applications requiring concurrent tasks, while polling provides simpler implementation suitable for low-frequency or less time-sensitive operations. The choice depends on application complexity, resource availability, and latency requirements, with multithreading preferred for high-performance needs and polling suitable for straightforward, event-driven designs. Evaluating system constraints and task criticality ensures the optimal approach aligns with development goals and runtime efficiency.

Multithreading Infographic

libterm.com

libterm.com