Masking techniques play a crucial role in digital image processing by selectively hiding or revealing parts of an image to achieve desired visual effects. Proper use of masking allows for precise control over edits, enhancing image quality without affecting unwanted areas. Discover how mastering masking can transform your visual projects by reading the rest of the article.

Table of Comparison

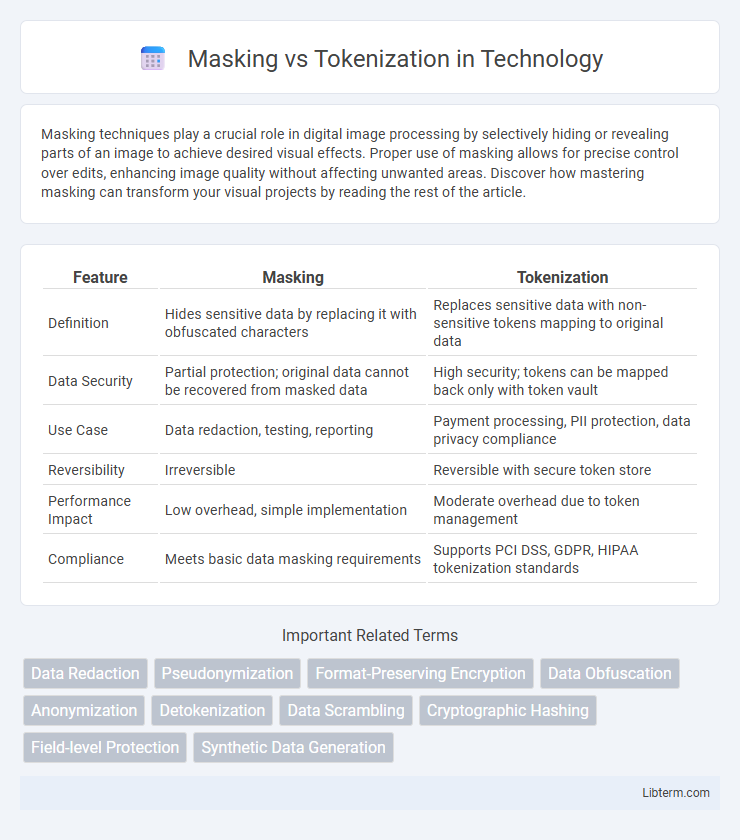

| Feature | Masking | Tokenization |

|---|---|---|

| Definition | Hides sensitive data by replacing it with obfuscated characters | Replaces sensitive data with non-sensitive tokens mapping to original data |

| Data Security | Partial protection; original data cannot be recovered from masked data | High security; tokens can be mapped back only with token vault |

| Use Case | Data redaction, testing, reporting | Payment processing, PII protection, data privacy compliance |

| Reversibility | Irreversible | Reversible with secure token store |

| Performance Impact | Low overhead, simple implementation | Moderate overhead due to token management |

| Compliance | Meets basic data masking requirements | Supports PCI DSS, GDPR, HIPAA tokenization standards |

Introduction to Data Protection Techniques

Masking replaces sensitive data with obfuscated values to prevent exposure during processing or display, preserving data format while concealing actual content. Tokenization substitutes sensitive information with non-sensitive placeholders called tokens, mapping back only through secure token vaults, which eliminates the use of original data in systems. Both techniques enhance data protection by minimizing risks of unauthorized access and ensuring compliance with privacy regulations like GDPR and HIPAA.

What is Masking?

Masking is a data protection technique that hides sensitive information by replacing it with characters or symbols, ensuring that the original data is unreadable and secure during processing or storage. Commonly used in data privacy and security frameworks, masking prevents unauthorized access while maintaining data format and usability for testing or analysis. Unlike tokenization, which substitutes data with unique tokens, masking obscures the data directly, often using methods like character substitution, shuffling, or nulling out parts of the data.

What is Tokenization?

Tokenization is the process of converting sensitive data into non-sensitive tokens that retain essential information for processing but lack exploitable value. Unlike masking, which obscures data by partially hiding it, tokenization replaces the original data with unique identifiers that are mapped back through a secure token vault. This method is widely used in payment processing and data security to protect credit card numbers, personal identification, and other confidential information while maintaining data usability.

Key Differences Between Masking and Tokenization

Masking replaces sensitive data with a masked value that retains the original data's format, ensuring confidential information is concealed while allowing limited processing. Tokenization substitutes sensitive data with a unique, non-sensitive token that maps back to the original data in a secure token vault, eliminating exposure of actual data during transaction processing. Key differences include masking's reversible transformation for limited data use versus tokenization's irreversible substitution for secure data storage and compliance with regulations like PCI DSS.

Use Cases for Masking

Masking is primarily used in data privacy scenarios to hide sensitive information such as personally identifiable information (PII) or payment card details in datasets for development, testing, or analytics without exposing the original data. This technique ensures compliance with regulations like GDPR and HIPAA by rendering sensitive data unreadable to unauthorized users. Tokenization, in contrast, replaces sensitive data with non-sensitive placeholders, commonly used in payment processing systems to minimize the risk of data breaches.

Use Cases for Tokenization

Tokenization is widely used in data security to protect sensitive information such as credit card numbers and personal identification details by replacing them with non-sensitive placeholders while preserving data format for processing. It enables secure data storage and transmission in compliance with regulations like PCI DSS and GDPR by minimizing exposure to actual sensitive data. Unlike masking, tokenization allows systems to perform analytics and transactions without accessing the original data, enhancing both security and functionality.

Security Implications of Each Approach

Masking obfuscates sensitive data by replacing it with a similar but non-sensitive value, reducing exposure risk in environments such as testing or analytics, while maintaining data format consistency. Tokenization replaces sensitive data with randomly generated tokens, storing the original information securely in a separate vault, thereby minimizing data breach impact as tokens have no exploitable value outside the securing system. Both methods enhance security, but tokenization offers stronger protection against data theft by isolating sensitive information, whereas masking primarily mitigates insider threats and unauthorized data access.

Compliance Considerations: Masking vs Tokenization

Masking obscures sensitive data by replacing it with fictitious characters, maintaining the original format but reducing usability for compliance purposes such as GDPR and HIPAA. Tokenization replaces sensitive data with unique, non-sensitive tokens that preserve referential integrity, ensuring strong protection for payment card information under PCI DSS regulations. Both methods support data security and regulatory compliance, but tokenization often provides more robust protection by eliminating sensitive data from systems entirely.

Choosing the Right Technique for Your Organization

Choosing between masking and tokenization depends on the type of sensitive data and compliance requirements your organization faces; masking obscures data for non-production environments, preserving format but hiding actual values, while tokenization replaces data with irreversible tokens ideal for protecting payment card information under PCI DSS. Consider data usage scenarios, security goals, and system integration complexity when deciding, as tokenization requires maintaining token vaults, whereas masking is often simpler to implement for testing purposes. Evaluate regulatory frameworks like GDPR and HIPAA to ensure your chosen method aligns with mandated data protection standards and risk management strategies.

Future Trends in Data Protection Technologies

Masking and tokenization are evolving rapidly to address growing data privacy demands, with future trends emphasizing enhanced cryptographic methods and AI-driven adaptive protection mechanisms. Innovations like dynamic data masking and token vault decentralization are set to improve real-time data security in cloud and hybrid environments. Integration of blockchain for token management and advancements in homomorphic encryption promise to revolutionize secure data access without compromising usability.

Masking Infographic

libterm.com

libterm.com