Orthogonal refers to concepts, vectors, or data sets that are independent or perpendicular to each other, ensuring minimal overlap or interference. In various fields like mathematics, engineering, and computer science, orthogonality simplifies analysis by allowing components to be studied separately without confusion. Explore the article to discover how orthogonal principles optimize your problem-solving approaches.

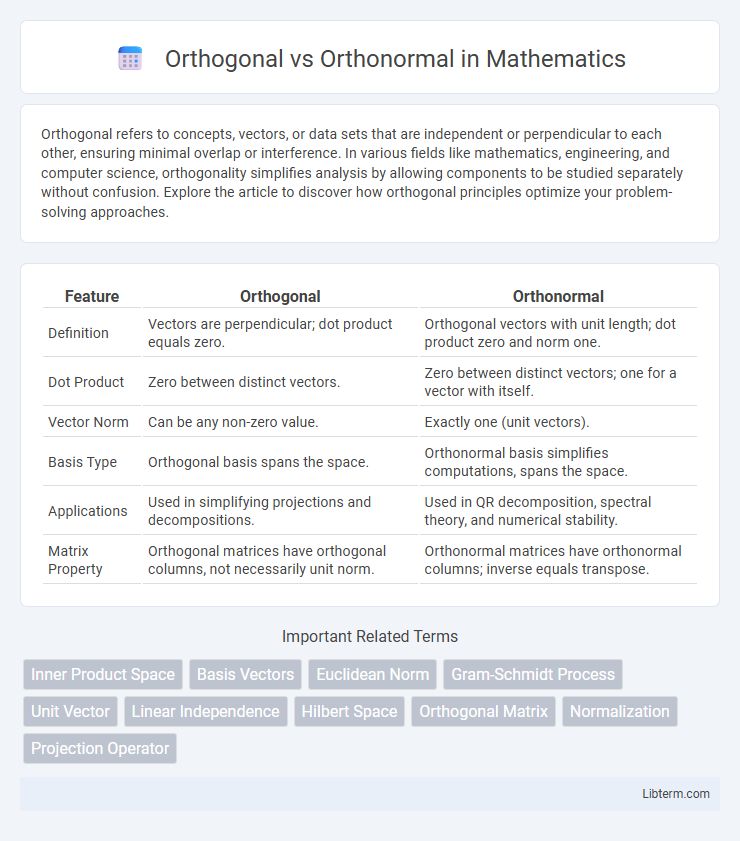

Table of Comparison

| Feature | Orthogonal | Orthonormal |

|---|---|---|

| Definition | Vectors are perpendicular; dot product equals zero. | Orthogonal vectors with unit length; dot product zero and norm one. |

| Dot Product | Zero between distinct vectors. | Zero between distinct vectors; one for a vector with itself. |

| Vector Norm | Can be any non-zero value. | Exactly one (unit vectors). |

| Basis Type | Orthogonal basis spans the space. | Orthonormal basis simplifies computations, spans the space. |

| Applications | Used in simplifying projections and decompositions. | Used in QR decomposition, spectral theory, and numerical stability. |

| Matrix Property | Orthogonal matrices have orthogonal columns, not necessarily unit norm. | Orthonormal matrices have orthonormal columns; inverse equals transpose. |

Introduction to Orthogonality and Orthonormality

Orthogonality refers to the condition where two vectors have a zero dot product, indicating they are perpendicular in vector space. Orthonormality extends this concept by requiring vectors to be both orthogonal and of unit length, ensuring each vector's magnitude is one. These properties are fundamental in linear algebra for simplifying computations in vector spaces, such as in coordinate transformations and decomposition methods.

Defining Orthogonal Vectors

Orthogonal vectors are vectors in an inner product space that have a dot product equal to zero, indicating they are perpendicular to each other. This zero dot product condition defines orthogonality and ensures no projection of one vector onto the other exists. Unlike orthonormal vectors, orthogonal vectors do not necessarily have unit length.

Understanding Orthonormal Vectors

Orthonormal vectors are a set of vectors that are both orthogonal and of unit length, meaning each vector has magnitude one and their pairwise dot products equal zero. This property simplifies many mathematical operations like projections, as the vectors maintain mutual perpendicularity and normalized scale. Understanding orthonormal vectors is fundamental in linear algebra applications, including QR decomposition, eigenvalue problems, and defining basis sets in vector spaces.

Key Differences Between Orthogonal and Orthonormal

Orthogonal vectors are perpendicular with a zero dot product, but they do not necessarily have unit length, whereas orthonormal vectors are both perpendicular and normalized to unit length. Orthogonal sets simplify calculations in linear algebra by ensuring zero cross-correlation, but orthonormal sets further enhance stability and ease of computation due to their length normalization. The key distinction lies in orthonormal vectors having a magnitude of one, making them ideal for constructing orthonormal bases in vector spaces.

Mathematical Properties of Orthogonal Sets

Orthogonal sets consist of vectors whose pairwise inner products equal zero, indicating perpendicularity in vector space. Orthonormal sets extend this by requiring each vector to have unit length, combining orthogonality with normalization. These properties simplify computations in linear algebra, such as projections and basis transformations, by enabling diagonalization of matrices and stable numerical algorithms.

Mathematical Properties of Orthonormal Sets

Orthonormal sets consist of vectors that are both orthogonal and of unit length, satisfying the condition \( \mathbf{u}_i \cdot \mathbf{u}_j = \delta_{ij} \), where \( \delta_{ij} \) is the Kronecker delta. These properties ensure that orthonormal sets preserve vector norms under coordinate transformations and simplify computations in inner product spaces. The mathematical significance includes facilitating diagonalization of matrices and forming bases in Hilbert spaces with straightforward Fourier expansions.

Applications in Linear Algebra

Orthogonal vectors in linear algebra are used to simplify computations by ensuring zero dot product and independence, crucial for solving systems and matrix decompositions. Orthonormal vectors extend this by also having unit length, which facilitates stable numerical methods like QR factorization and spectral analysis. These properties enable efficient algorithms in computer graphics, signal processing, and machine learning for tasks such as dimensionality reduction and data reconstruction.

Real-World Examples of Orthogonal vs Orthonormal

Orthogonal vectors in real-world applications often refer to perpendicular directions in navigation systems, such as the North-South and East-West axes on a map, ensuring independent measurement of displacement without overlap. Orthonormal vectors take this concept further by being not only perpendicular but also of unit length, essential in computer graphics where scaling and rotation transformations require normalized basis vectors for accurate rendering. These distinctions enhance precision in signal processing, where orthogonal signals avoid interference, while orthonormal sets ensure consistent signal energy levels.

Importance in Machine Learning and Data Science

Orthogonal vectors represent data features that are completely uncorrelated, reducing redundancy and multicollinearity in machine learning models. Orthonormal vectors, being both orthogonal and unit-length, facilitate efficient numerical computations and improve the stability of algorithms like PCA and SVD by preserving scale and ensuring independence. Leveraging orthonormal bases enhances dimensionality reduction and feature extraction, which are critical for high-dimensional data processing in data science.

Summary and Final Thoughts

Orthogonal vectors have a zero dot product, indicating they are perpendicular, while orthonormal vectors are not only orthogonal but also have a unit length, making them useful in simplifying computations in linear algebra and signal processing. Orthonormal sets provide a robust foundation for vector space representation, ensuring stability and efficiency in transformations, projections, and matrix decompositions such as QR factorization. Understanding the distinction between orthogonal and orthonormal vectors is crucial for applications in machine learning, computer graphics, and numerical methods where precise vector manipulation is essential.

Orthogonal Infographic

libterm.com

libterm.com