Frequentist inference relies on the long-run frequency properties of estimators to draw conclusions from data, emphasizing objective methods like hypothesis testing and confidence intervals. It interprets probabilities as the limit of relative frequencies in repeated experiments, providing a framework for making decisions under uncertainty. Explore the rest of the article to discover how frequentist methods shape statistical analysis and influence your data-driven decisions.

Table of Comparison

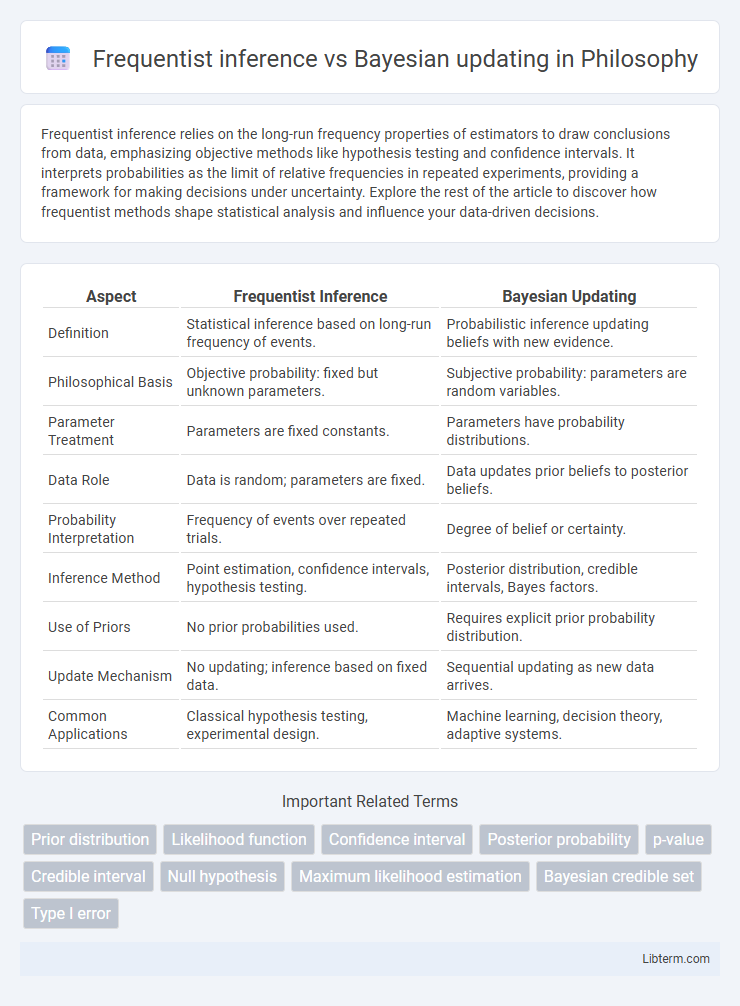

| Aspect | Frequentist Inference | Bayesian Updating |

|---|---|---|

| Definition | Statistical inference based on long-run frequency of events. | Probabilistic inference updating beliefs with new evidence. |

| Philosophical Basis | Objective probability: fixed but unknown parameters. | Subjective probability: parameters are random variables. |

| Parameter Treatment | Parameters are fixed constants. | Parameters have probability distributions. |

| Data Role | Data is random; parameters are fixed. | Data updates prior beliefs to posterior beliefs. |

| Probability Interpretation | Frequency of events over repeated trials. | Degree of belief or certainty. |

| Inference Method | Point estimation, confidence intervals, hypothesis testing. | Posterior distribution, credible intervals, Bayes factors. |

| Use of Priors | No prior probabilities used. | Requires explicit prior probability distribution. |

| Update Mechanism | No updating; inference based on fixed data. | Sequential updating as new data arrives. |

| Common Applications | Classical hypothesis testing, experimental design. | Machine learning, decision theory, adaptive systems. |

Introduction to Statistical Inference

Frequentist inference relies on long-run frequency properties of estimators, emphasizing hypothesis testing and confidence intervals based on fixed parameters. Bayesian updating incorporates prior knowledge through probability distributions, continuously refining beliefs with observed data via Bayes' theorem. Statistical inference bridges these approaches by enabling decision-making under uncertainty using data-driven evidence and probabilistic models.

Core Principles of Frequentist Inference

Frequentist inference relies on the principle of long-run frequency properties, interpreting parameters as fixed but unknown quantities, and bases conclusions solely on the likelihood of observed data under repeated sampling. It uses estimators, confidence intervals, and hypothesis tests to control error rates without incorporating prior beliefs, emphasizing objective procedures. This framework assumes that probabilities represent the frequency of events in an infinite sequence of identical experiments.

Fundamentals of Bayesian Updating

Bayesian updating fundamentally relies on Bayes' theorem, which combines prior beliefs with new evidence to produce an updated posterior probability reflecting the current state of knowledge. Unlike Frequentist inference that depends on long-run frequency properties and p-values, Bayesian methods treat parameters as random variables and provide a coherent framework to incorporate uncertainty and prior information. This iterative process of updating probabilities enables dynamic learning and decision-making based on accumulating data.

Key Differences Between Frequentist and Bayesian Approaches

Frequentist inference relies on long-run frequency properties and treats parameters as fixed but unknown quantities, emphasizing hypothesis testing and p-values. Bayesian updating incorporates prior beliefs with observed data through Bayes' theorem to generate posterior distributions, treating parameters as random variables. Key differences include the interpretation of probability, the role of prior information, and the way uncertainty is quantified and updated.

Role of Probability in Each Paradigm

Frequentist inference interprets probability as the long-run frequency of events in repeated experiments, treating parameters as fixed but unknown quantities. Bayesian updating views probability as a measure of subjective belief or uncertainty about parameters, which are modeled as random variables with prior distributions updated through observed data. This fundamental difference in the role of probability guides statistical methods, with Frequentist methods relying on sampling distributions and Bayesian methods combining prior knowledge with evidence to produce posterior distributions.

Handling Prior Information

Frequentist inference relies solely on observed data without incorporating prior beliefs, treating parameters as fixed but unknown quantities. Bayesian updating integrates prior information through a prior distribution, refining beliefs about parameters as new data emerges. This framework allows explicit quantification and updating of uncertainty, leveraging both historical knowledge and current evidence for decision-making.

Interpretation of Confidence Intervals vs Credible Intervals

Confidence intervals in Frequentist inference represent ranges that would contain the true parameter in a specified proportion of repeated samples, emphasizing long-run frequency properties without assigning probabilities to the parameter itself. Credible intervals in Bayesian updating provide a direct probabilistic statement about the parameter, indicating the range within which the parameter lies with a given probability based on the observed data and prior beliefs. This fundamental difference reflects the Frequentist focus on data repetition and the Bayesian emphasis on subjective probability and prior information integration.

Typical Applications and Use Cases

Frequentist inference is commonly applied in clinical trials, quality control, and A/B testing where fixed parameter estimation and hypothesis testing dominate decision-making. Bayesian updating excels in dynamic environments such as machine learning, adaptive control systems, and predictive modeling where incorporating prior knowledge and continuously updating beliefs with new data improves accuracy. Typical use cases for Bayesian methods include spam filtering, recommendation systems, and real-time decision making under uncertainty, contrasting with Frequentist techniques favored for regulatory settings and standardized experiments.

Advantages and Limitations of Each Method

Frequentist inference provides objective parameter estimation by relying on long-run frequency properties and does not require prior distributions, making it suitable for large sample sizes and well-defined experiments; however, it cannot incorporate prior knowledge and often struggles with small data sets. Bayesian updating integrates prior beliefs with observed data to produce a posterior distribution, allowing for more flexible and intuitive uncertainty quantification, yet it depends heavily on the choice of prior and can be computationally intensive. Both methods offer valuable insights depending on the context but vary significantly in handling prior information, computational demands, and interpretability of results.

Choosing the Right Approach for Your Analysis

Frequentist inference relies on long-run frequencies and fixed parameters, making it ideal for hypothesis testing and confidence interval estimation when prior information is limited or unavailable. Bayesian updating incorporates prior beliefs with observed data to provide a dynamic probabilistic framework, suitable for complex models and iterative learning scenarios. Choosing the right approach depends on data availability, computational resources, and the need for incorporating prior knowledge in decision-making processes.

Frequentist inference Infographic

libterm.com

libterm.com