Multicollinearity occurs when independent variables in a regression model are highly correlated, leading to unreliable coefficient estimates and inflated standard errors. This issue can obscure the true relationship between predictors and the dependent variable, complicating interpretation and weakening predictive power. Explore the rest of the article to understand how to detect, address, and prevent multicollinearity in your analyses.

Table of Comparison

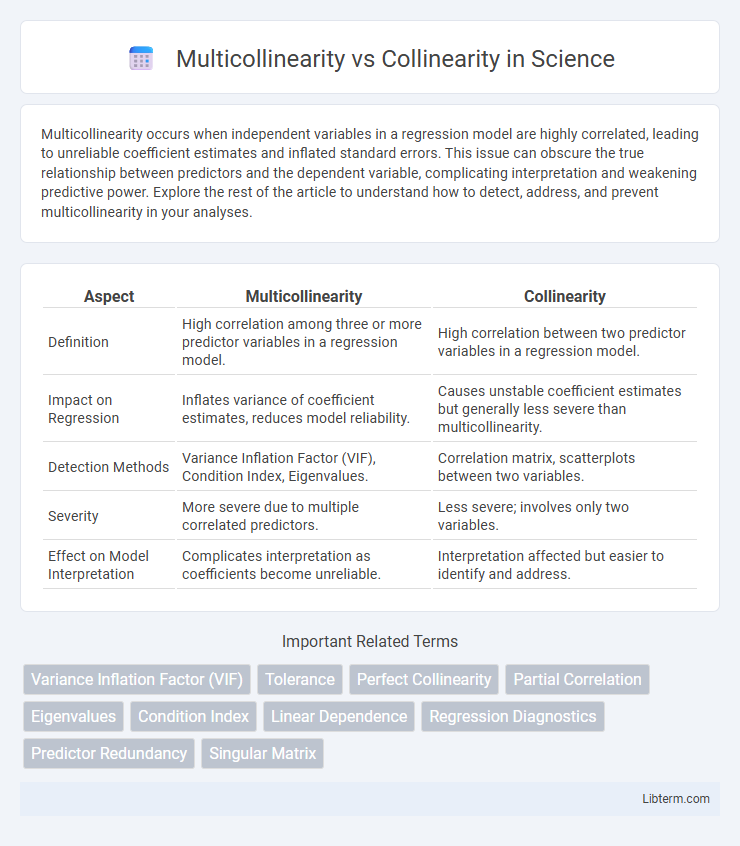

| Aspect | Multicollinearity | Collinearity |

|---|---|---|

| Definition | High correlation among three or more predictor variables in a regression model. | High correlation between two predictor variables in a regression model. |

| Impact on Regression | Inflates variance of coefficient estimates, reduces model reliability. | Causes unstable coefficient estimates but generally less severe than multicollinearity. |

| Detection Methods | Variance Inflation Factor (VIF), Condition Index, Eigenvalues. | Correlation matrix, scatterplots between two variables. |

| Severity | More severe due to multiple correlated predictors. | Less severe; involves only two variables. |

| Effect on Model Interpretation | Complicates interpretation as coefficients become unreliable. | Interpretation affected but easier to identify and address. |

Introduction to Collinearity and Multicollinearity

Collinearity occurs when two predictor variables in a regression model are highly correlated, causing redundancy and potential instability in coefficient estimates. Multicollinearity extends this concept to situations where three or more predictors exhibit high intercorrelations, complicating the accurate assessment of each variable's individual effect. Detecting collinearity and multicollinearity is essential for reliable statistical inference, often addressed through variance inflation factors (VIF) and condition indices.

Definitions: Collinearity vs Multicollinearity

Collinearity refers to a linear relationship between two predictor variables in a regression model, where one variable can be linearly predicted from the other with a high degree of accuracy. Multicollinearity extends this concept to situations involving three or more predictor variables, where one variable is linearly dependent on a combination of others. Both collinearity and multicollinearity can cause issues in estimating regression coefficients, leading to increased standard errors and unreliable statistical inferences.

Causes of Collinearity in Data

Collinearity occurs when two predictor variables in a regression model are highly correlated, while multicollinearity involves multiple predictors exhibiting intercorrelations, complicating the isolation of individual variable effects. Causes of collinearity in data often include measurement errors, inherent relationships between variables, or data collection methods that produce redundant information. Identifying these causes, such as overlapping constructs or poor variable selection, helps improve model stability and interpretability.

Understanding Multicollinearity in Regression Analysis

Multicollinearity occurs when two or more predictor variables in a regression model are highly correlated, making it difficult to isolate the individual effect of each variable on the dependent variable. This intercorrelation inflates the variance of coefficient estimates, leading to unreliable statistical inferences and decreased model interpretability. Detecting multicollinearity involves examining correlation matrices, Variance Inflation Factor (VIF) values exceeding 10, and condition indices, which signal potential issues in regression analysis.

Key Differences Between Collinearity and Multicollinearity

Collinearity refers to a linear relationship between two predictor variables, causing redundancy in regression models, whereas multicollinearity involves high correlations among three or more predictors simultaneously, complicating the identification of individual variable effects. Key differences include the scope, with collinearity being a pairwise phenomenon and multicollinearity extending to multiple variables, and the severity of impact, as multicollinearity usually leads to greater instability in coefficient estimates and model interpretation. Detecting multicollinearity often requires variance inflation factor (VIF) analysis or condition index measures, while collinearity can be identified through simpler correlation matrices.

Identifying Collinearity and Multicollinearity

Identifying collinearity involves detecting a high correlation between two predictor variables within a regression model, typically measured using correlation coefficients or scatterplots. Multicollinearity extends this concept, occurring when three or more predictors are highly interrelated, often assessed through variance inflation factors (VIF), condition indices, or eigenvalues of the correlation matrix. Early detection of collinearity and multicollinearity is crucial for maintaining model accuracy, interpretability, and avoiding inflated standard errors in coefficient estimates.

Impacts on Model Performance and Interpretation

Multicollinearity occurs when two or more predictor variables in a regression model are highly correlated, leading to inflated standard errors and unreliable coefficient estimates, which compromises model stability and significance testing. Collinearity refers to a perfect or near-perfect linear relationship between predictors, causing issues such as singularity in the design matrix and making it impossible to uniquely estimate individual variable effects. Both multicollinearity and collinearity reduce interpretability by distorting the true influence of individual predictors, degrade model performance by increasing variance of coefficient estimates, and complicate variable selection and predictive accuracy in regression analysis.

Detection Techniques and Diagnostic Tools

Multicollinearity and collinearity both refer to high correlations among predictor variables, but multicollinearity involves multiple variables while collinearity typically refers to a near-perfect linear relationship between two variables. Detection techniques for multicollinearity include Variance Inflation Factor (VIF), Tolerance values, and Condition Indexes, which help identify problematic variable interdependencies. Diagnostic tools such as correlation matrices, eigenvalues, and variance decomposition proportions assist in assessing the severity and sources of collinearity in regression models.

Strategies to Address Collinearity and Multicollinearity

Strategies to address collinearity and multicollinearity involve techniques like variance inflation factor (VIF) analysis to identify problematic predictors and applying dimensionality reduction methods such as principal component analysis (PCA) to minimize redundancy. Ridge regression and Lasso regression serve as effective regularization methods to shrink coefficient estimates and improve model stability in the presence of correlated variables. Feature selection approaches, including stepwise selection and domain knowledge-driven elimination, also help reduce collinearity by retaining only the most informative variables.

Practical Examples and Case Studies

In regression analysis, multicollinearity occurs when two or more predictor variables exhibit high correlation, causing difficulties in estimating individual coefficients, as demonstrated in real estate price modeling where house size and number of rooms overlap significantly. Collinearity, a simpler case involving a perfect linear relationship between two variables, can be seen in marketing studies where advertising spend on TV perfectly correlates with radio. Practical case studies in economics reveal multicollinearity problems when GDP growth and employment rates move closely together, affecting the stability and interpretability of regression outcomes.

Multicollinearity Infographic

libterm.com

libterm.com