Gamma radiation is a highly penetrating form of electromagnetic energy emitted during radioactive decay, posing significant health risks if exposure is uncontrolled. Its ability to pass through most materials necessitates specialized shielding, such as lead or concrete, to protect living tissues. Explore the rest of the article to understand gamma radiation's properties, applications, and safety precautions for your well-being.

Table of Comparison

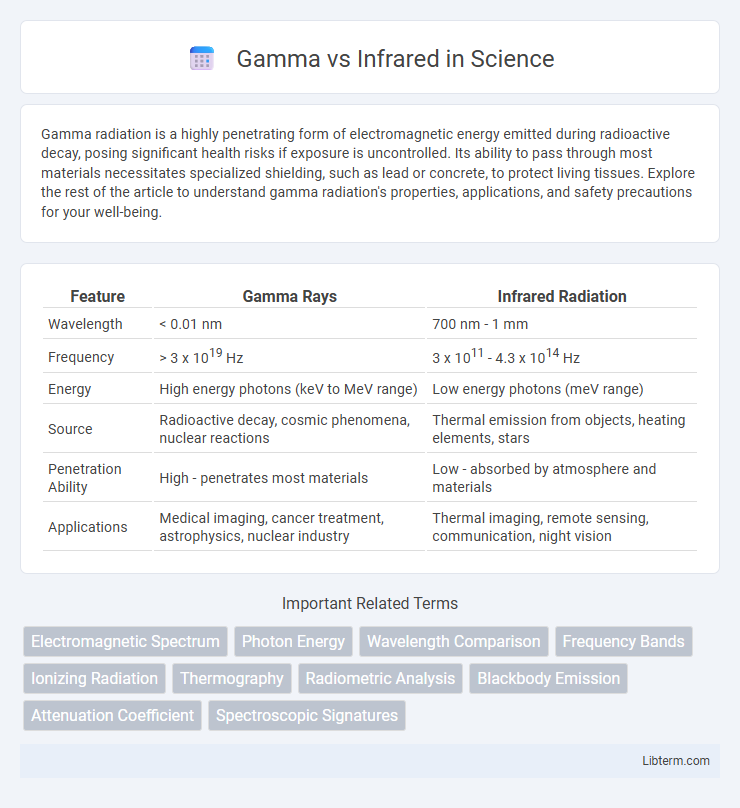

| Feature | Gamma Rays | Infrared Radiation |

|---|---|---|

| Wavelength | < 0.01 nm | 700 nm - 1 mm |

| Frequency | > 3 x 1019 Hz | 3 x 1011 - 4.3 x 1014 Hz |

| Energy | High energy photons (keV to MeV range) | Low energy photons (meV range) |

| Source | Radioactive decay, cosmic phenomena, nuclear reactions | Thermal emission from objects, heating elements, stars |

| Penetration Ability | High - penetrates most materials | Low - absorbed by atmosphere and materials |

| Applications | Medical imaging, cancer treatment, astrophysics, nuclear industry | Thermal imaging, remote sensing, communication, night vision |

Introduction to Gamma and Infrared Radiation

Gamma radiation consists of high-energy electromagnetic waves emitted from atomic nuclei during radioactive decay, characterized by extremely short wavelengths and high frequencies. Infrared radiation, with longer wavelengths than visible light, originates from the thermal vibrations of atoms and molecules, playing a crucial role in heat transfer and remote sensing technologies. Both gamma and infrared radiation occupy distinct segments of the electromagnetic spectrum, influencing their diverse applications in medical imaging, astronomy, and communication systems.

Electromagnetic Spectrum Overview

Gamma rays occupy the highest energy region of the electromagnetic spectrum, with frequencies above 10^19 Hz and wavelengths shorter than 10 picometers, enabling them to penetrate most materials. Infrared radiation lies just below visible light, with frequencies between 10^11 and 10^14 Hz and wavelengths ranging from 700 nanometers to 1 millimeter, commonly associated with heat emission and thermal imaging. The vast difference in energy and wavelength between gamma rays and infrared radiation defines their distinct applications and interactions within the electromagnetic spectrum.

Wavelength Differences: Gamma vs Infrared

Gamma rays possess extremely short wavelengths, typically less than 0.01 nanometers, making them the highest-energy form of electromagnetic radiation. Infrared radiation features much longer wavelengths, ranging from about 700 nanometers to 1 millimeter, situated between visible light and microwave radiation in the electromagnetic spectrum. The vast difference in wavelength corresponds to gamma rays having significantly higher frequencies and energies compared to infrared waves.

Sources of Gamma and Infrared Rays

Gamma rays originate from nuclear reactions, radioactive decay, and cosmic phenomena such as supernovae and neutron stars, emitting highly energetic photons with extremely short wavelengths. Infrared rays are primarily produced by the thermal radiation of objects at moderate temperatures, including the sun, heated surfaces, and living organisms, emitting longer wavelengths associated with heat energy. Gamma sources are mostly extraterrestrial or nuclear, while infrared sources are common in everyday environments due to thermal emissions.

Applications in Science and Technology

Gamma rays penetrate dense materials, making them essential in medical imaging, cancer radiotherapy, and nuclear industry inspections. Infrared radiation is widely used in remote sensing, thermal imaging, and fiber optic communication systems due to its ability to detect heat signatures and transmit data efficiently. Both electromagnetic waves serve critical roles in scientific research, environmental monitoring, and advanced technological development.

Biological Effects and Safety Concerns

Gamma rays, a form of high-energy electromagnetic radiation, penetrate biological tissues deeply, causing ionization that can damage DNA and cellular structures, leading to mutations or cancer. Infrared radiation, with longer wavelengths and lower energy, primarily induces thermal effects, raising tissue temperature without causing direct ionization but potentially leading to burns or heat stress with prolonged exposure. Safety concerns emphasize minimizing gamma exposure due to its high ionizing potential, while infrared precautions focus on avoiding excessive heat absorption to prevent tissue damage.

Detection Methods for Gamma and Infrared

Gamma rays are detected using scintillation detectors, semiconductor detectors, and Geiger-Muller counters that convert high-energy photons into measurable electronic signals. Infrared detection relies on thermal detectors like bolometers and photodetectors such as mercury cadmium telluride (MCT) or indium antimonide (InSb) sensors that respond to heat or infrared photon absorption. Advanced gamma detection systems often utilize cryogenic cooling to improve resolution, while infrared detectors emphasize sensitivity and spectral response in the mid to long-wave IR bands.

Utilization in Medical Field

Gamma rays penetrate deeply into tissues, making them ideal for cancer radiotherapy to target and destroy malignant cells with precision. Infrared radiation is extensively used in medical diagnostics and therapy, such as infrared thermography for detecting inflammation and infrared lamps for pain relief and promoting blood circulation. Both types of electromagnetic radiation provide distinct advantages in medical treatment and imaging, with gamma rays favoring high-energy applications and infrared supporting non-invasive monitoring and recovery.

Industrial and Communication Uses

Gamma rays, with their extremely high frequency and energy, are primarily employed in industrial applications such as non-destructive testing and sterilization due to their ability to penetrate dense materials. Infrared radiation is widely utilized in communication technologies, including fiber optic systems and remote controls, exploiting its ability to transmit data through electromagnetic waves at lower energy levels. Industrial uses of infrared emphasize thermal imaging and quality control, whereas gamma rays serve in precise imaging and material analysis.

Future Trends in Gamma and Infrared Research

Future trends in gamma and infrared research emphasize advancements in sensor technology and data processing algorithms to enhance detection accuracy and range. Emerging applications include space exploration, medical imaging, and environmental monitoring, leveraging improved spectral resolution and real-time analysis capabilities. Integration of artificial intelligence and machine learning models is expected to drive breakthroughs in interpreting complex gamma and infrared signals for various scientific and industrial uses.

Gamma Infographic

libterm.com

libterm.com