Tensor structures enable efficient storage and manipulation of multi-dimensional data, essential for various machine learning and deep learning applications. Understanding how tensors work simplifies complex numerical computations, enhancing the performance of algorithms in image processing, natural language processing, and scientific modeling. Explore the rest of the article to discover how tensors can transform Your approach to data analysis and AI development.

Table of Comparison

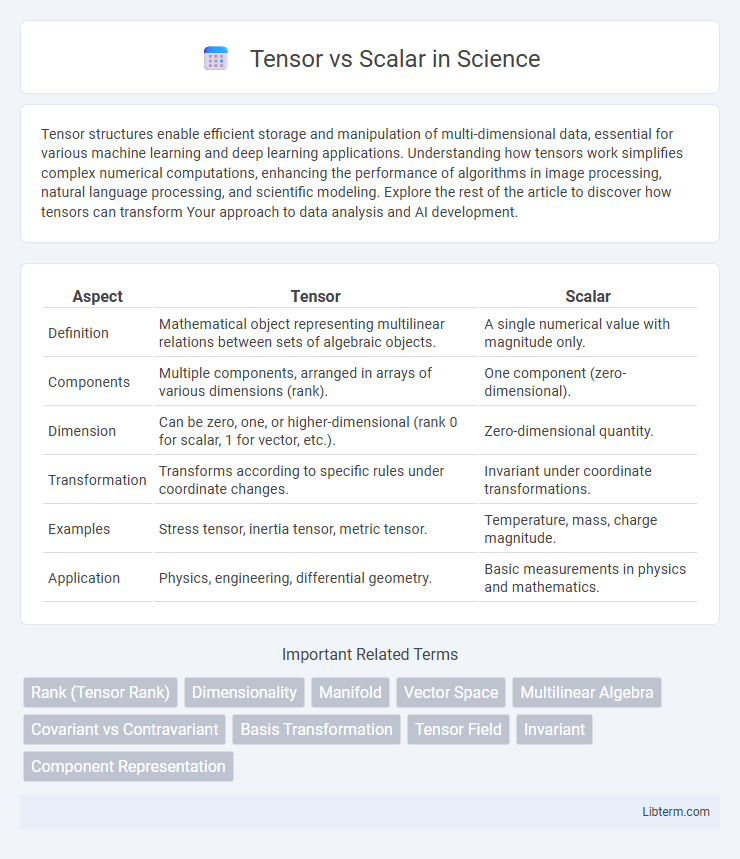

| Aspect | Tensor | Scalar |

|---|---|---|

| Definition | Mathematical object representing multilinear relations between sets of algebraic objects. | A single numerical value with magnitude only. |

| Components | Multiple components, arranged in arrays of various dimensions (rank). | One component (zero-dimensional). |

| Dimension | Can be zero, one, or higher-dimensional (rank 0 for scalar, 1 for vector, etc.). | Zero-dimensional quantity. |

| Transformation | Transforms according to specific rules under coordinate changes. | Invariant under coordinate transformations. |

| Examples | Stress tensor, inertia tensor, metric tensor. | Temperature, mass, charge magnitude. |

| Application | Physics, engineering, differential geometry. | Basic measurements in physics and mathematics. |

Understanding Scalars: Definition and Properties

Scalars are single-valued quantities characterized by magnitude alone and invariant under coordinate transformations, making them fundamental in mathematical and physical contexts. Defined as zero-rank tensors, scalars contrast with higher-rank tensors by lacking directionality, which simplifies operations such as addition and multiplication. Understanding scalar properties, including their behavior under linear transformations and their role in defining other tensor types, is essential for applications in physics, engineering, and computer science.

What is a Tensor? Key Concepts Explained

A tensor is a mathematical object that generalizes scalars, vectors, and matrices to higher dimensions, essential for representing complex data in fields like physics and machine learning. Unlike scalars, which are zero-dimensional single values, and vectors, which are one-dimensional arrays, tensors can exist in multiple dimensions, capturing relationships and transformations within data spaces. Key concepts include tensor rank (order), dimensions, and components, where a rank-2 tensor corresponds to a matrix, and higher-rank tensors enable advanced computations in neural networks and continuum mechanics.

Scalars vs Tensors: Core Differences

Scalars represent single numerical values and have zero dimensions, making them the simplest form of data in mathematical operations. Tensors generalize scalars by encompassing multi-dimensional arrays that extend to vectors, matrices, and higher-dimensional data structures essential in fields like physics and machine learning. The core difference lies in dimensionality, where scalars are rank-0 tensors and tensors can have any number of dimensions, enabling complex data representation and manipulation.

Mathematical Representation of Scalars and Tensors

Scalars are single numerical values represented mathematically as real numbers or 0th-order tensors, denoted simply by variables like \(a \in \mathbb{R}\). Tensors extend this concept, represented as multi-dimensional arrays or ordered sets of components that transform according to specific rules under coordinate changes, such as vectors (1st-order tensors) and matrices (2nd-order tensors), with rank indicating their order. The mathematical formulation of tensors involves multilinear maps or elements of tensor product spaces, providing a unified framework for scalars, vectors, and higher-order data structures.

Real-World Applications of Scalars

Scalars, representing single numerical values without direction, are fundamental in real-world applications such as temperature measurement, speed calculation, and financial transactions where magnitude alone is essential. Unlike tensors, which handle multi-dimensional data and complex relationships, scalars simplify calculations in physics, engineering, and economics by providing straightforward quantifiable metrics. Practical uses include measuring scalar quantities like time, mass, and energy, enabling streamlined data processing and decision-making in everyday scenarios.

Where Are Tensors Used? From Physics to AI

Tensors are extensively used in physics to describe physical properties like stress, strain, and electromagnetic fields, providing a multi-dimensional generalization of scalars and vectors essential for representing complex phenomena. In artificial intelligence, tensors serve as the foundational data structures for neural networks, enabling efficient computation and manipulation of multidimensional data such as images, audio, and text. Machine learning frameworks like TensorFlow leverage tensors to optimize operations across CPUs and GPUs, facilitating scalable model training and inference.

Operations Involving Scalars and Tensors

Operations involving scalars and tensors include scalar multiplication, where each element of a tensor is multiplied by a scalar value, scaling the entire tensor. Element-wise addition or subtraction between a scalar and a tensor adds or subtracts the scalar value to every element in the tensor, effectively broadcasting the scalar. These operations are fundamental in deep learning frameworks like TensorFlow and PyTorch, enabling efficient manipulation of multi-dimensional arrays for model computations.

Visualizing Scalars and Tensors

Visualizing scalars involves representing single numerical values often through color coding or intensity on a plot, enabling quick interpretation of data magnitude. Tensors, as multi-dimensional arrays, require more complex visualization techniques such as heatmaps, 3D plots, or tensor decomposition to reveal patterns across dimensions. Tools like Matplotlib, TensorBoard, and specialized libraries offer effective methods to illustrate tensor structures and scalar values in machine learning and scientific computing.

Importance of Tensors and Scalars in Machine Learning

Tensors and scalars are fundamental components in machine learning, where scalars represent single-valued quantities and tensors generalize this concept to multi-dimensional arrays crucial for handling complex data structures. Tensors enable efficient representation and manipulation of high-dimensional data such as images, audio, and video, facilitating powerful neural network computations. Understanding the distinction and efficient use of both scalars and tensors enhances model precision, scalability, and performance in machine learning applications.

Summary: Choosing Between Scalars and Tensors

Scalars represent single numerical values, making them ideal for simple calculations and situations requiring minimal data complexity. Tensors, as multidimensional arrays, efficiently handle complex data structures common in machine learning and physics applications. Selecting between scalars and tensors depends on the dimensionality and complexity of the data, with tensors offering enhanced flexibility for high-dimensional data processing.

Tensor Infographic

libterm.com

libterm.com