Algorithmic bias occurs when automated systems produce unfair or prejudiced outcomes due to flawed assumptions or data imbalances embedded in their design. This bias can affect decision-making across various sectors, including hiring, lending, and law enforcement, leading to unintended discrimination. Explore the rest of this article to understand how algorithmic bias impacts your daily life and what steps can be taken to mitigate it.

Table of Comparison

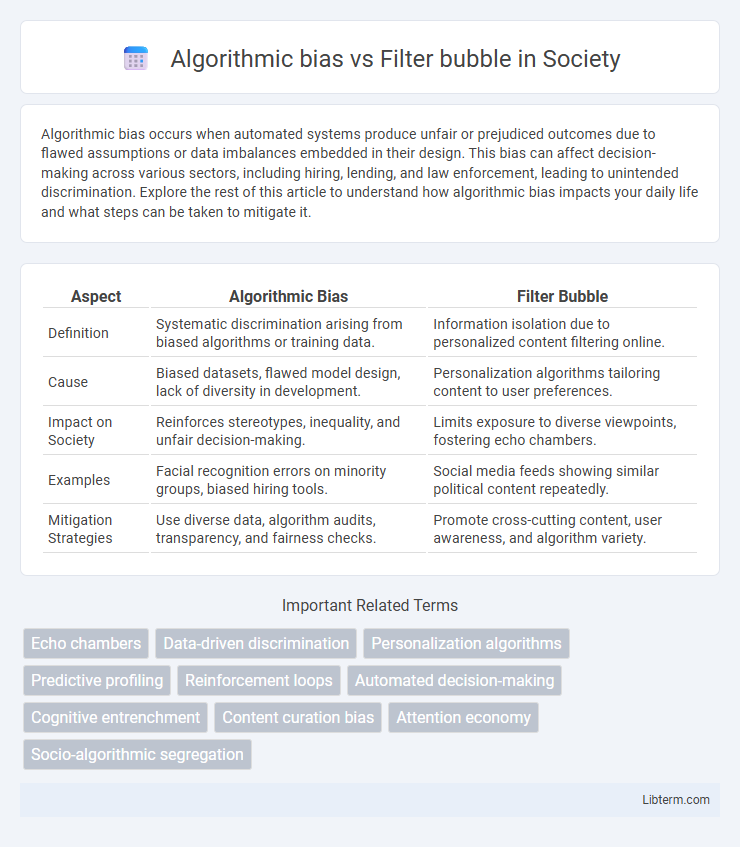

| Aspect | Algorithmic Bias | Filter Bubble |

|---|---|---|

| Definition | Systematic discrimination arising from biased algorithms or training data. | Information isolation due to personalized content filtering online. |

| Cause | Biased datasets, flawed model design, lack of diversity in development. | Personalization algorithms tailoring content to user preferences. |

| Impact on Society | Reinforces stereotypes, inequality, and unfair decision-making. | Limits exposure to diverse viewpoints, fostering echo chambers. |

| Examples | Facial recognition errors on minority groups, biased hiring tools. | Social media feeds showing similar political content repeatedly. |

| Mitigation Strategies | Use diverse data, algorithm audits, transparency, and fairness checks. | Promote cross-cutting content, user awareness, and algorithm variety. |

Understanding Algorithmic Bias

Algorithmic bias occurs when machine learning models produce systematically prejudiced outcomes due to skewed training data or flawed assumptions, impacting fairness and decision-making accuracy. Unlike filter bubbles that isolate users within echo chambers tailored by personalized content algorithms, algorithmic bias directly influences the integrity of automated systems across sectors such as hiring, lending, and law enforcement. Recognizing algorithmic bias involves examining dataset representation and model transparency to ensure equitable algorithmic behavior and mitigate unintended discriminatory effects.

What is a Filter Bubble?

A filter bubble is a personalized information environment created by algorithms that selectively present content based on a user's previous online behavior, interests, and preferences. This phenomenon limits exposure to diverse perspectives by continuously reinforcing existing beliefs and opinions, potentially leading to echo chambers. Unlike algorithmic bias, which refers to systematic errors in algorithms that produce unfair outcomes, a filter bubble specifically pertains to the isolating effect of personalized content curation on user experience.

Core Differences Between Algorithmic Bias and Filter Bubbles

Algorithmic bias refers to systematic and unfair discrimination embedded in data-driven algorithms that cause skewed or prejudiced outcomes, often reflecting societal inequalities. In contrast, filter bubbles arise from personalized content algorithms that isolate users within closed information environments, reinforcing existing beliefs and reducing exposure to diverse perspectives. The core difference lies in algorithmic bias producing unjust results affecting fairness, while filter bubbles primarily limit information variety, impacting knowledge diversity.

How Algorithms Shape Online Experiences

Algorithms shape online experiences by personalizing content based on user data, which can lead to algorithmic bias where certain perspectives are unfairly prioritized or excluded. This selective exposure creates filter bubbles, isolating users in echo chambers that reinforce existing beliefs and limit diverse viewpoints. Understanding these mechanisms is crucial for developing more transparent and equitable digital platforms.

Causes of Algorithmic Bias

Algorithmic bias primarily arises from training data that reflects historical inequalities or societal prejudices, leading to skewed outputs in artificial intelligence systems. Incomplete or non-representative datasets, along with biased design choices made by developers, exacerbate these disparities. Unlike filter bubbles, which result from personalized content curation based on user behavior, algorithmic bias stems directly from flaws in data and algorithm development processes.

Consequences of Living in a Filter Bubble

Living in a filter bubble amplifies confirmation bias by exposing users predominantly to information that aligns with their existing beliefs, limiting diverse perspectives and critical thinking. This reduction in viewpoint diversity fuels political polarization and misinformation spread, as individuals become less likely to challenge or verify the content they encounter. Long-term consequences include diminished social cohesion and an increased risk of societal fragmentation due to entrenched ideological silos.

Real-World Examples: Bias vs Bubbles

Algorithmic bias manifests in real-world scenarios such as facial recognition systems misidentifying minority groups, while filter bubbles are evident in social media platforms like Facebook, where users receive homogenized content reinforcing their existing beliefs. For example, Amazon's hiring algorithm showed gender bias by favoring male candidates, demonstrating bias's impact on fairness, whereas YouTube's recommendation system can trap users in content bubbles, limiting exposure to diverse perspectives. Both phenomena highlight the challenges of algorithm design in promoting equity and informational diversity.

The Role of Social Media Platforms

Social media platforms play a crucial role in algorithmic bias by using personalized algorithms that prioritize content based on user behavior, often reinforcing existing prejudices and limiting exposure to diverse perspectives. Filter bubbles result from these algorithms selectively curating information, creating isolated echo chambers that restrict users' access to contrasting viewpoints. This dynamic intensifies social polarization and hampers informed decision-making by amplifying biased content within user networks.

Combating Algorithmic Bias and Filter Bubbles

Combating algorithmic bias and filter bubbles requires implementing transparent machine learning models and diverse data sets to reduce skewed outputs and echo chambers. Techniques such as fairness-aware algorithms and user-controlled personalization help mitigate biased recommendations and improve content diversity. Continuous monitoring, bias audits, and promoting digital literacy empower users to recognize and challenge algorithmic distortions effectively.

Future Implications for Digital Society

Algorithmic bias threatens digital fairness by perpetuating stereotypes and unequal treatment in AI-driven systems, impacting social equity and trust. Filter bubbles narrow information exposure, reinforcing ideological silos that hinder diverse perspectives and democratic discourse. Addressing these issues is critical for developing transparent algorithms and inclusive platforms that promote a balanced, open digital society.

Algorithmic bias Infographic

libterm.com

libterm.com