Scalability ensures your system can efficiently handle increasing workloads without compromising performance or stability. It involves designing architecture that supports growth in users, data, and transactions while maintaining responsiveness. Explore the rest of this article to learn how to optimize scalability for your projects.

Table of Comparison

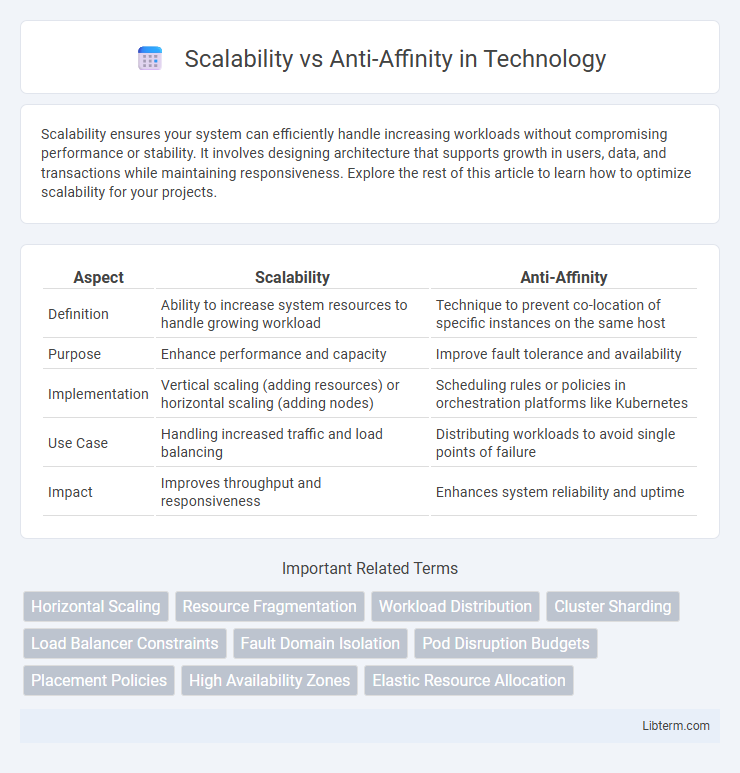

| Aspect | Scalability | Anti-Affinity |

|---|---|---|

| Definition | Ability to increase system resources to handle growing workload | Technique to prevent co-location of specific instances on the same host |

| Purpose | Enhance performance and capacity | Improve fault tolerance and availability |

| Implementation | Vertical scaling (adding resources) or horizontal scaling (adding nodes) | Scheduling rules or policies in orchestration platforms like Kubernetes |

| Use Case | Handling increased traffic and load balancing | Distributing workloads to avoid single points of failure |

| Impact | Improves throughput and responsiveness | Enhances system reliability and uptime |

Understanding Scalability in Distributed Systems

Scalability in distributed systems refers to the ability to efficiently handle increasing workloads by adding resources, such as servers or nodes, without compromising performance. Anti-affinity policies prevent co-locating specific instances of applications or services on the same physical host to avoid resource contention and single points of failure. Balancing scalability with anti-affinity ensures robust, fault-tolerant architectures that can grow seamlessly while maintaining high availability and resilience.

Defining Anti-Affinity and Its Importance

Anti-affinity is a scheduling policy that prevents specific workloads or containers from running on the same physical host or node, enhancing fault tolerance and resource distribution. This strategy mitigates risks of correlated failures and improves uptime by ensuring critical applications are distributed across multiple servers. Scalability benefits from anti-affinity by maintaining balanced resource allocation and avoiding bottlenecks caused by clustered deployments.

Key Differences Between Scalability and Anti-Affinity

Scalability refers to a system's ability to handle increased load by adding resources like servers or instances, ensuring performance growth and resource optimization. Anti-affinity, on the other hand, is a scheduling policy used in distributed systems or Kubernetes clusters to keep certain workloads or pods on separate nodes for fault tolerance and to minimize risk of simultaneous failures. The key difference lies in scalability enhancing resource capacity, while anti-affinity manages workload distribution to improve reliability and avoid resource contention.

How Scalability Impacts Application Performance

Scalability directly enhances application performance by allowing systems to handle increasing workloads through resource expansion, either vertically or horizontally. Efficient scalability ensures consistent response times and system reliability, preventing bottlenecks during traffic spikes. In contrast, anti-affinity rules, which distribute instances across different nodes, support scalability by reducing single points of failure and improving fault tolerance, ultimately maintaining steady application performance under load.

Anti-Affinity Strategies for High Availability

Anti-affinity strategies enhance high availability by ensuring that instances of the same application or service are deployed on separate physical hosts or failure domains, reducing the risk of simultaneous downtime. This approach prevents resource contention and single points of failure by isolating workloads, which improves fault tolerance in distributed systems. Implementing anti-affinity rules in orchestration platforms like Kubernetes or OpenStack optimizes resource allocation and maintains service continuity during scaling operations.

Balancing Scalability with Anti-Affinity Rules

Balancing scalability with anti-affinity rules involves distributing workloads across multiple nodes to prevent resource contention and ensure high availability while allowing systems to scale efficiently. Implementing anti-affinity policies restricts certain workloads from co-locating on the same hardware, which enhances fault tolerance but may limit the maximum density of deployments. Optimizing this balance requires strategic resource allocation and dynamic orchestration tools like Kubernetes to maintain performance without sacrificing resilience.

Common Challenges in Implementing Scalability

Implementing scalability often encounters challenges such as resource contention and uneven workload distribution, which anti-affinity rules aim to address by preventing co-location of critical services on the same node. Managing pod placement policies in Kubernetes or similar orchestration platforms requires careful tuning to balance scalability with fault tolerance and performance. Failure to properly configure anti-affinity can lead to node overloading and reduced application availability, undermining the benefits of horizontal scaling.

Best Practices for Anti-Affinity Configuration

To optimize anti-affinity configuration for scalability, consistently define pod anti-affinity rules based on application labels and topology keys such as zones or nodes. Employ weighted pod anti-affinity to balance strictness and flexibility, preventing resource contention while allowing cluster growth. Regularly monitor cluster resource usage and adapt anti-affinity settings to maintain high availability and efficient workload distribution.

Real-World Use Cases: Scalability vs Anti-Affinity

Scalability ensures systems handle increased loads by adding resources, vital for applications like e-commerce platforms during high traffic. Anti-affinity policies prevent critical services from running on the same physical host, reducing risks of failure in multi-tenant environments such as cloud-hosted databases. Combining scalability with anti-affinity enhances resilience and performance in real-world deployments like distributed microservices and container orchestration.

Choosing the Right Approach for Your Infrastructure

Scalability strategies optimize resource allocation by dynamically adjusting workloads, while anti-affinity rules prevent co-location of critical components to enhance fault tolerance. Selecting the right approach depends on infrastructure goals: prioritize scalability for handling variable demand and use anti-affinity to reduce risk of simultaneous failures. Combining both improves resilience and performance in distributed systems by balancing load and ensuring redundancy.

Scalability Infographic

libterm.com

libterm.com