Load balancing distributes network traffic or computing tasks evenly across multiple servers or resources to optimize performance and avoid overload. Efficient load balancing minimizes downtime and ensures your applications run smoothly under varying loads. Explore the rest of the article to discover how effective load balancing can enhance your system's reliability and speed.

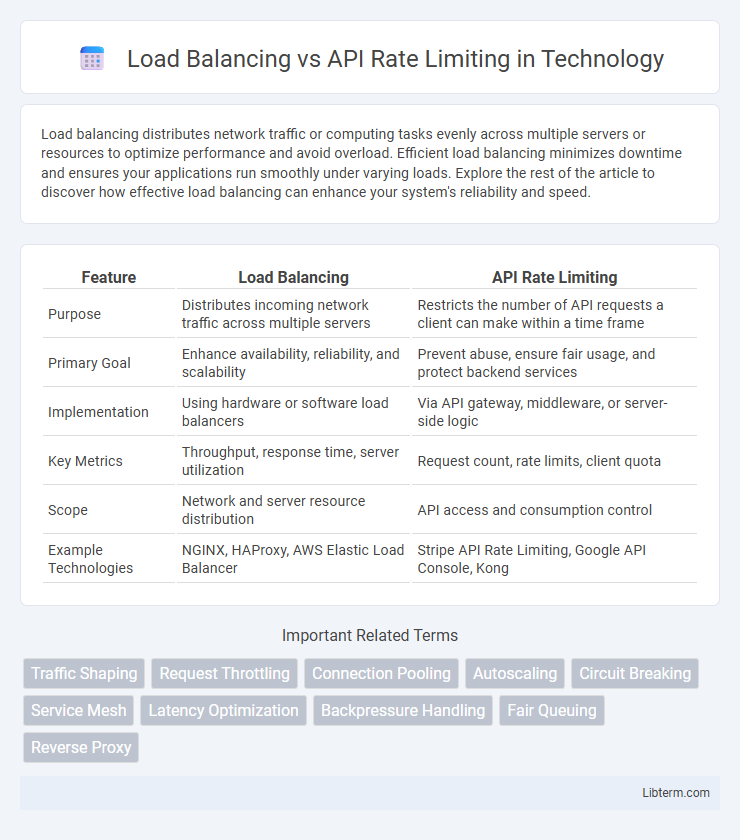

Table of Comparison

| Feature | Load Balancing | API Rate Limiting |

|---|---|---|

| Purpose | Distributes incoming network traffic across multiple servers | Restricts the number of API requests a client can make within a time frame |

| Primary Goal | Enhance availability, reliability, and scalability | Prevent abuse, ensure fair usage, and protect backend services |

| Implementation | Using hardware or software load balancers | Via API gateway, middleware, or server-side logic |

| Key Metrics | Throughput, response time, server utilization | Request count, rate limits, client quota |

| Scope | Network and server resource distribution | API access and consumption control |

| Example Technologies | NGINX, HAProxy, AWS Elastic Load Balancer | Stripe API Rate Limiting, Google API Console, Kong |

Introduction to Load Balancing and API Rate Limiting

Load balancing distributes incoming network traffic across multiple servers to ensure reliability and optimize resource use, improving application performance and availability. API rate limiting controls the number of requests a client can make within a specific time frame, protecting backend services from overload and ensuring fair usage. Both techniques enhance system stability but address different aspects of traffic management and resource protection.

Defining Load Balancing: Role and Importance

Load balancing evenly distributes incoming network traffic across multiple servers to ensure no single server becomes overwhelmed, enhancing system reliability and performance. It plays a critical role in maintaining high availability by preventing server overload and enabling fault tolerance within distributed systems. Efficient load balancing optimizes resource utilization, reduces latency, and supports scalable application deployment in cloud and data center environments.

Understanding API Rate Limiting: Concepts and Use Cases

API rate limiting controls the number of requests a client can make to an API within a specified timeframe to prevent abuse and ensure fair resource allocation. Common use cases include protecting backend services from overload, maintaining service availability during traffic spikes, and enforcing subscription or access tiers for different users. Effective rate limiting strategies involve setting thresholds based on IP address, user tokens, or API keys to balance security and performance without impacting legitimate users.

Key Differences Between Load Balancing and Rate Limiting

Load balancing efficiently distributes incoming network traffic across multiple servers to optimize resource use, maximize throughput, and minimize response time, enhancing system availability and reliability. API rate limiting controls the number of API requests a user or client can make within a specified time frame to protect the system from abuse, prevent overload, and ensure fair usage. Key differences include the purpose--load balancing focuses on traffic distribution and fault tolerance, while rate limiting emphasizes access control and abuse prevention.

Common Algorithms for Load Balancing

Common algorithms for load balancing include Round Robin, which distributes requests sequentially across servers; Least Connections, directing traffic to the server with the fewest active connections; and IP Hash, assigning requests based on the client's IP address to ensure session persistence. These methods optimize resource utilization and improve response time by evenly distributing workloads. Understanding these algorithms helps distinguish load balancing, which manages request distribution, from API rate limiting that controls request frequency to prevent overuse.

Popular Strategies for API Rate Limiting

Popular strategies for API rate limiting include token bucket, fixed window, sliding window, and leaky bucket algorithms, each designed to control the number of API requests within a specified timeframe to prevent abuse and ensure fair resource allocation. Token bucket allows bursts of traffic by accumulating tokens at a steady rate, supporting short bursts without exceeding the limit, while fixed window limits requests strictly within discrete time intervals. Sliding window and leaky bucket offer more granular and smooth rate restriction by tracking requests over rolling time periods or processing requests at fixed rates, making them effective for managing fluctuating traffic patterns.

Benefits of Implementing Load Balancing

Implementing load balancing enhances system reliability by distributing incoming traffic evenly across multiple servers, preventing any single server from becoming a bottleneck. It improves application responsiveness and uptime, ensuring seamless user experiences during traffic surges or hardware failures. Load balancing also supports scalability, allowing infrastructure to grow efficiently while maintaining optimal performance levels.

Advantages and Challenges of API Rate Limiting

API rate limiting enhances security by preventing abuse and ensuring fair resource distribution, which reduces server overload and maintains service availability. Challenges include accurately defining limits to avoid restricting legitimate user access and managing the complexity of enforcing limits across distributed systems. Effective rate limiting requires continuous monitoring and adjustment to balance performance and user experience while protecting APIs from malicious activities.

Choosing Between Load Balancing and Rate Limiting for Your Application

Load balancing improves application performance by distributing incoming traffic evenly across multiple servers, enhancing scalability and fault tolerance. API rate limiting protects backend systems from overload and abuse by restricting the number of requests a client can make within a specific timeframe. Choosing between load balancing and rate limiting depends on your application's needs: prioritize load balancing for handling high traffic volumes smoothly, and implement rate limiting to safeguard resources and ensure fair usage.

Best Practices for Combining Load Balancing and Rate Limiting

Implementing load balancing alongside API rate limiting ensures efficient distribution of client requests while preventing system overload and abuse. Best practices include configuring rate limits at the API gateway level with awareness of load balancer policies, employing consistent hashing to maintain session affinity, and monitoring traffic patterns to dynamically adjust limits and optimize resource utilization. Combining these techniques helps maintain high availability, protect backend services, and enhance user experience through balanced traffic management and fair usage enforcement.

Load Balancing Infographic

libterm.com

libterm.com