Queuing is a fundamental process in computer science and everyday life for managing tasks in an organized, first-in-first-out manner. It optimizes resource allocation and ensures fair access to shared services, enhancing system performance and user experience. Discover how effective queuing strategies can improve Your operations by exploring the rest of this article.

Table of Comparison

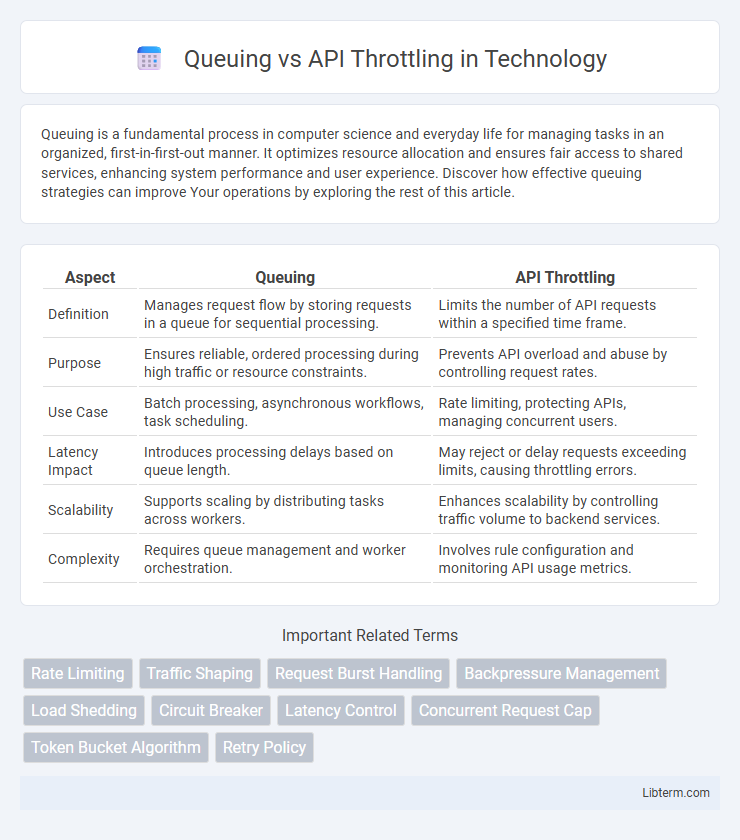

| Aspect | Queuing | API Throttling |

|---|---|---|

| Definition | Manages request flow by storing requests in a queue for sequential processing. | Limits the number of API requests within a specified time frame. |

| Purpose | Ensures reliable, ordered processing during high traffic or resource constraints. | Prevents API overload and abuse by controlling request rates. |

| Use Case | Batch processing, asynchronous workflows, task scheduling. | Rate limiting, protecting APIs, managing concurrent users. |

| Latency Impact | Introduces processing delays based on queue length. | May reject or delay requests exceeding limits, causing throttling errors. |

| Scalability | Supports scaling by distributing tasks across workers. | Enhances scalability by controlling traffic volume to backend services. |

| Complexity | Requires queue management and worker orchestration. | Involves rule configuration and monitoring API usage metrics. |

Introduction to Queuing and API Throttling

Queuing manages the orderly processing of tasks by placing requests in a waiting line to be executed sequentially, ensuring system stability under heavy load. API throttling controls the rate of incoming API calls by limiting the number of requests a client can make within a specified time frame, preventing server overload and abuse. Both techniques optimize resource usage and enhance application performance by managing traffic flow effectively.

Understanding API Throttling

API throttling controls the rate of incoming requests by limiting the number of calls a client can make within a defined time window, ensuring server stability and preventing abuse. Unlike queuing, which holds and processes requests sequentially, throttling rejects or delays excess requests immediately once limits are exceeded. Implementing API throttling improves system reliability, enhances performance, and enforces fair resource usage among users.

What is Queuing in API Management?

Queuing in API management refers to the process of temporarily holding incoming API requests in a queue when the system experiences high traffic or limited resource availability, ensuring controlled and orderly processing. This method helps prevent server overload and maintains consistent performance by managing request bursts efficiently. By prioritizing and scheduling requests, queuing enhances reliability and user experience during peak demand periods.

Key Differences Between Queuing and API Throttling

Queuing manages request processing by placing incoming API calls in a queue to be handled sequentially, ensuring system stability during high traffic. API throttling limits the number of requests a client can make within a specified time frame, preventing abuse and conserving resources. While queuing focuses on orderly processing under load, throttling enforces rate limits to control usage and protect backend services from overload.

Benefits of Queuing for API Requests

Queuing for API requests provides controlled request processing by organizing incoming traffic into manageable sequences, which prevents overload and ensures system stability. This method improves resource utilization and response reliability by smoothing bursts of high traffic and reducing latency spikes. Efficient queuing mechanisms enable predictable throughput, leading to enhanced user experience and robust backend performance.

Advantages of API Throttling

API throttling offers precise control over the rate of incoming requests, preventing server overload and ensuring consistent performance during traffic spikes. By limiting request frequency at the source, API throttling reduces latency and optimizes resource utilization compared to traditional queuing methods. This approach enhances user experience by providing faster response times and maintaining system stability under high demand.

When to Use Queuing vs Throttling

Use queuing when managing workload spikes and ensuring requests are processed systematically without overwhelming system resources, ideal for scenarios requiring ordered task execution or delayed processing. API throttling is best suited for enforcing rate limits, protecting APIs from excessive usage, and maintaining service availability by controlling the number of requests per user or client within a given time frame. Choose queuing to handle asynchronous workflows and throttling to prevent abuse and ensure fair usage policies.

Common Use Cases for Each Approach

Queuing is ideal for handling high volumes of asynchronous tasks such as order processing, email notifications, or batch data imports where requests can be delayed without immediate user feedback. API throttling is commonly used to protect services from abuse in real-time scenarios like rate-limited login attempts, public API access, or preventing server overload during peak traffic periods. Each approach optimizes resource allocation by either buffering requests for later execution or limiting request rates to ensure system stability and fair usage.

Performance Impacts: Queuing vs Throttling

Queuing improves system performance by managing high volumes of requests through orderly processing, reducing server overload and preventing downtime. API throttling controls the rate of incoming requests to maintain server responsiveness but can lead to increased latency and potential request rejections under heavy load. Queuing ensures smoother workload distribution, while throttling enforces strict limits that may degrade user experience during peak traffic.

Best Practices for Managing API Load

Effective management of API load involves implementing queuing mechanisms to handle request bursts by temporarily storing and processing requests sequentially. API throttling enforces limits on the number of requests a client can make within a specified time frame, preventing server overload and ensuring fair resource allocation. Combining queuing with adaptive throttling policies optimizes performance, reduces latency, and maintains system stability under high traffic conditions.

Queuing Infographic

libterm.com

libterm.com