Clustering is a powerful technique in data analysis that groups similar data points into distinct clusters based on shared characteristics, enhancing pattern recognition and decision-making. It helps identify natural groupings within large datasets without prior knowledge of labels, making it essential for customer segmentation, image analysis, and anomaly detection. Explore the rest of the article to uncover how clustering can optimize Your data-driven strategies and improve insights.

Table of Comparison

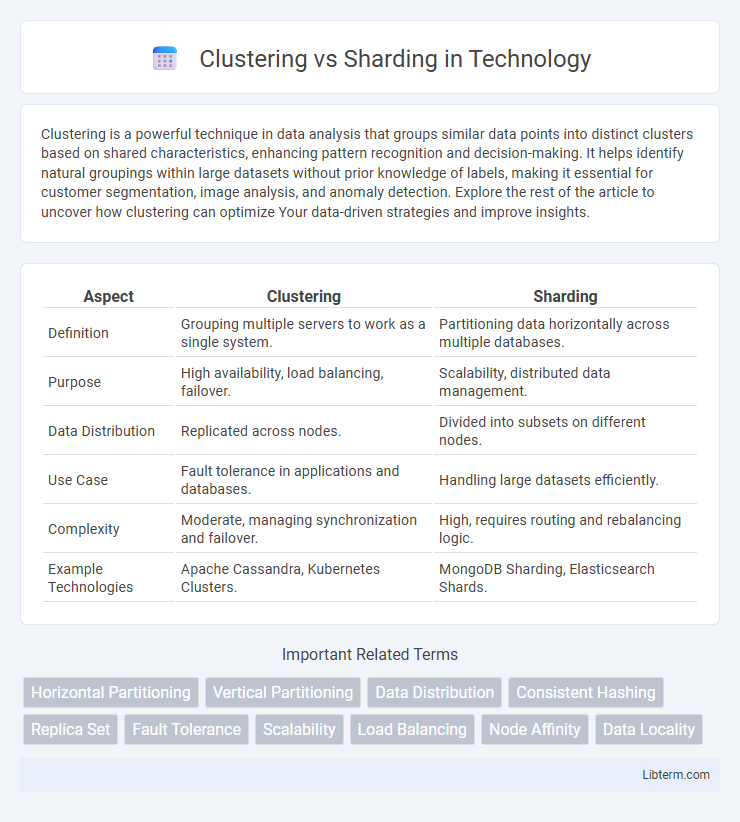

| Aspect | Clustering | Sharding |

|---|---|---|

| Definition | Grouping multiple servers to work as a single system. | Partitioning data horizontally across multiple databases. |

| Purpose | High availability, load balancing, failover. | Scalability, distributed data management. |

| Data Distribution | Replicated across nodes. | Divided into subsets on different nodes. |

| Use Case | Fault tolerance in applications and databases. | Handling large datasets efficiently. |

| Complexity | Moderate, managing synchronization and failover. | High, requires routing and rebalancing logic. |

| Example Technologies | Apache Cassandra, Kubernetes Clusters. | MongoDB Sharding, Elasticsearch Shards. |

Introduction to Clustering and Sharding

Clustering involves grouping multiple servers to work together as a single system, improving load balancing, fault tolerance, and scalability for databases or applications. Sharding splits a database horizontally by distributing data across multiple servers, enabling efficient management of large datasets and enhancing query performance. Both techniques address scalability but differ in their approach: clustering emphasizes resource pooling while sharding focuses on data partitioning.

Core Concepts: What is Clustering?

Clustering is a method of connecting multiple servers or nodes to work together as a single system, enhancing reliability and load balancing. It enables distributed data processing and high availability by allowing failover in case one node fails. Clustering ensures data redundancy and improves system scalability by managing resources across multiple servers.

Core Concepts: What is Sharding?

Sharding is a database architecture technique that horizontally partitions data across multiple servers, enabling efficient handling of large datasets by distributing workloads and improving performance. Each shard contains a subset of the total data, often based on a key such as user ID or geographic location, allowing queries to be directed to specific shards rather than the entire database. This approach enhances scalability and fault isolation, making it ideal for applications with high volume and real-time data processing requirements.

Key Differences Between Clustering and Sharding

Clustering involves grouping multiple servers to work as a single system for improved availability and load balancing, while sharding partitions a database into smaller, more manageable pieces called shards, each distributed across different servers to enhance scalability and performance. Clustering ensures data redundancy and fault tolerance by replicating data across nodes, whereas sharding divides data horizontally to optimize query efficiency and handle large data volumes. Key differences include clustering's focus on high availability versus sharding's emphasis on distributing data for scalability and reduced latency.

Use Cases for Clustering

Clustering is ideal for use cases requiring high availability and fault tolerance, such as in enterprise databases, web servers, and real-time applications where continuous uptime is critical. It enables multiple servers to work together, distributing workloads and providing seamless failover in the event of hardware or software failures. Common scenarios include load balancing for large-scale e-commerce platforms, managing distributed transaction processing, and supporting critical financial systems that demand consistency and reliability.

Use Cases for Sharding

Sharding is effectively used in large-scale databases where horizontal scaling is necessary to manage massive volumes of data and high transaction loads, such as in social media platforms, e-commerce applications, and financial services. It distributes data across multiple servers, enhancing read/write performance and ensuring better availability by isolating failures to individual shards. Sharding is ideal for scenarios requiring partitioning of data sets based on keys like user ID or geographic location to optimize query efficiency and reduce bottlenecks.

Performance Comparison: Clustering vs Sharding

Clustering improves performance by distributing workloads across multiple nodes, enhancing fault tolerance and load balancing within a single database system. Sharding boosts performance by horizontally partitioning data into smaller, manageable pieces across multiple servers, allowing parallel query processing and reduced latency for large datasets. While clustering excels in high availability and scaling read/write operations within tightly coupled nodes, sharding is more effective for massive data growth requiring independent scaling of data partitions.

Scalability Factors: Clustering vs Sharding

Clustering improves scalability by allowing multiple servers to work together as a single system, enhancing fault tolerance and load balancing for transactional consistency. Sharding scales databases horizontally by dividing data into smaller, manageable pieces distributed across multiple servers, drastically improving write and query performance for large datasets. Scalability in clustering is typically limited by shared resources, whereas sharding offers near-linear scalability by adding more shards independently.

Maintenance and Management Considerations

Clustering centralizes management by linking multiple servers to operate as a single unit, simplifying updates and monitoring but requiring more complex synchronization. Sharding distributes data across multiple independent nodes, enhancing scalability at the cost of increased maintenance efforts to handle data partitioning and cross-shard queries. Effective maintenance in clustering involves managing shared resources and failover processes, while sharding demands careful shard allocation and balancing to prevent data hotspots.

Choosing the Right Approach for Your Needs

Clustering enhances database availability and load balancing by grouping multiple servers to work as a single system, ideal for handling high traffic with real-time data consistency. Sharding distributes data across multiple nodes based on a specific key, optimizing performance by enabling horizontal scaling and managing large datasets more efficiently. Selecting clustering suits applications prioritizing fault tolerance and seamless failover, while sharding fits those requiring scalability and fast query responses on massive, partitionable data.

Clustering Infographic

libterm.com

libterm.com