Affinity signifies a natural connection or attraction between people, ideas, or things, often leading to harmonious relationships and mutual understanding. Understanding affinity can enhance your communication skills and deepen personal or professional bonds. Explore the article to discover how affinity influences interactions and how you can leverage it effectively.

Table of Comparison

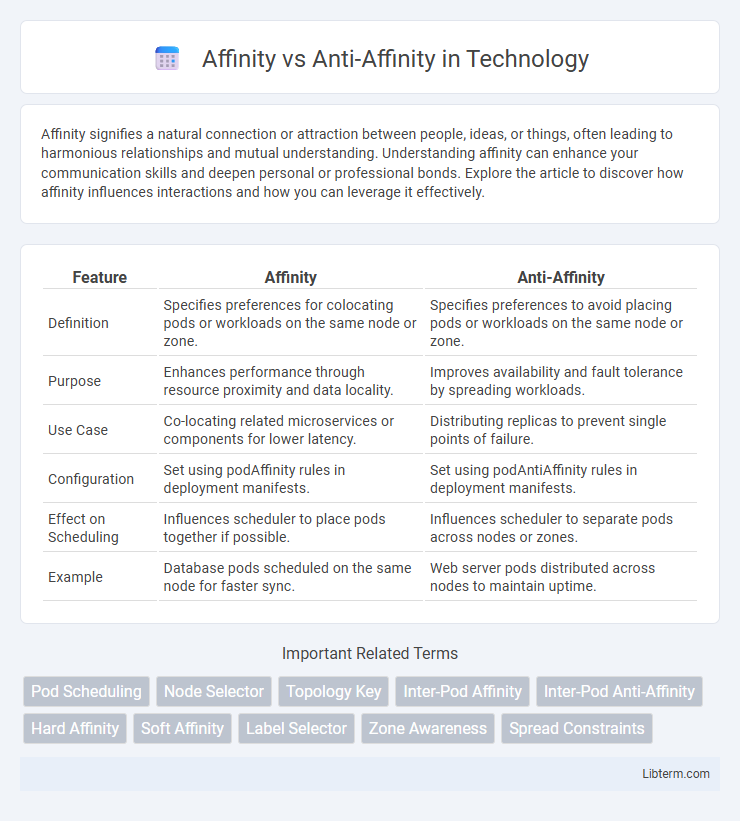

| Feature | Affinity | Anti-Affinity |

|---|---|---|

| Definition | Specifies preferences for colocating pods or workloads on the same node or zone. | Specifies preferences to avoid placing pods or workloads on the same node or zone. |

| Purpose | Enhances performance through resource proximity and data locality. | Improves availability and fault tolerance by spreading workloads. |

| Use Case | Co-locating related microservices or components for lower latency. | Distributing replicas to prevent single points of failure. |

| Configuration | Set using podAffinity rules in deployment manifests. | Set using podAntiAffinity rules in deployment manifests. |

| Effect on Scheduling | Influences scheduler to place pods together if possible. | Influences scheduler to separate pods across nodes or zones. |

| Example | Database pods scheduled on the same node for faster sync. | Web server pods distributed across nodes to maintain uptime. |

Introduction to Affinity and Anti-Affinity

Affinity and anti-affinity are key concepts in workload scheduling that influence pod placement in container orchestration platforms like Kubernetes. Affinity rules direct certain pods to be scheduled together on the same node or close nodes to optimize performance and resource sharing, while anti-affinity rules ensure pods are spread apart to improve fault tolerance and reduce resource contention. These rules use labels and selectors to define the criteria for pod grouping or separation, enabling more efficient and reliable cluster management.

Understanding Affinity: Key Concepts

Affinity in computing refers to the practice of scheduling tasks or processes to run on the same physical or logical resources to improve performance and reduce latency. Key concepts include CPU affinity, which binds processes to specific CPUs, and node affinity, which ensures workloads run on designated nodes to optimize resource utilization. Understanding affinity enables better workload distribution by leveraging proximity and resource locality, enhancing overall system efficiency.

Exploring Anti-Affinity: Key Concepts

Anti-affinity rules in Kubernetes enhance workload reliability by preventing pods from being scheduled on the same node or topology domain, thereby reducing the risk of correlated failures. These rules specify criteria that prohibit co-location of pods, often used to ensure high availability and fault tolerance in distributed systems. By leveraging labels and topology keys, anti-affinity enables precise control over pod distribution across cluster resources, optimizing resilience and performance.

Why Affinity Matters in Distributed Systems

Affinity in distributed systems ensures that related tasks or processes are co-located on the same node or within close proximity, improving data locality and reducing network latency for faster execution. Anti-affinity policies prevent resource contention and single points of failure by distributing workloads across different nodes, enhancing fault tolerance and availability. Understanding affinity is crucial for optimizing system performance, resource utilization, and reliability in complex cluster environments.

Benefits of Using Anti-Affinity Rules

Anti-affinity rules enhance application availability by ensuring that critical workloads are distributed across distinct nodes, reducing the risk of service disruption due to hardware failures. They improve fault tolerance and load balancing in container orchestration environments like Kubernetes by preventing resource contention and single points of failure. This strategic separation facilitates better resource utilization and increases overall system resilience.

Use Cases for Affinity in Cloud Environments

Affinity in cloud environments is essential for optimizing performance by co-locating related workloads, such as microservices that require low-latency communication or applications with shared data dependencies. It improves resource utilization by grouping interdependent containers or virtual machines on the same host or zone, enhancing throughput and reducing network overhead. Use cases include stateful applications, big data processing clusters, and tightly coupled distributed systems where proximity accelerates synchronization and data sharing.

Real-World Applications of Anti-Affinity

Anti-affinity rules in cloud computing are critical for enhancing application availability by ensuring that specific virtual machines or containers are not co-located on the same physical host. Real-world applications include deploying microservices that require isolation to prevent simultaneous failures and managing stateful workloads such as databases to avoid data loss from hardware faults. Enterprises use anti-affinity to maintain compliance, enhance security by separating sensitive components, and optimize fault tolerance in distributed systems.

Challenges When Implementing Affinity and Anti-Affinity

Implementing affinity and anti-affinity rules in Kubernetes can lead to challenges such as increased complexity in scheduling algorithms and potential resource fragmentation across nodes. Affinity constraints may cause pods to be concentrated on specific nodes, risking resource exhaustion and reducing fault tolerance. Anti-affinity rules, while improving workload distribution, can lead to unschedulable pods if the cluster lacks sufficient nodes meeting the criteria, impacting deployment reliability.

Best Practices for Managing Affinity and Anti-Affinity

Best practices for managing affinity and anti-affinity include defining clear workload placement policies to optimize availability and performance. Use affinity rules to co-locate related pods or services for enhanced communication efficiency, while applying anti-affinity to prevent resource contention by separating critical workloads across nodes or zones. Regularly monitor and adjust these rules based on real-time resource usage and failure domains to maintain resilience and avoid hotspots in cluster environments.

Affinity vs Anti-Affinity: Choosing the Right Strategy

Affinity and anti-affinity are critical strategies in workload placement that influence application performance and reliability in distributed systems. Affinity directs related workloads to run on the same node or close proximity to optimize communication latency and resource sharing, while anti-affinity enforces separation to prevent resource contention and enhance fault tolerance. Choosing the right strategy depends on the specific requirements for workload interdependence, fault isolation, and overall system scalability to achieve optimal deployment efficiency.

Affinity Infographic

libterm.com

libterm.com