Streaming delivers instant access to movies, music, and live events through the internet, offering unmatched convenience and vast content libraries. High-speed connections and smart devices enhance user experience, enabling seamless playback without downloads or storage concerns. Explore the rest of this article to unlock the full potential of streaming and transform your entertainment habits.

Table of Comparison

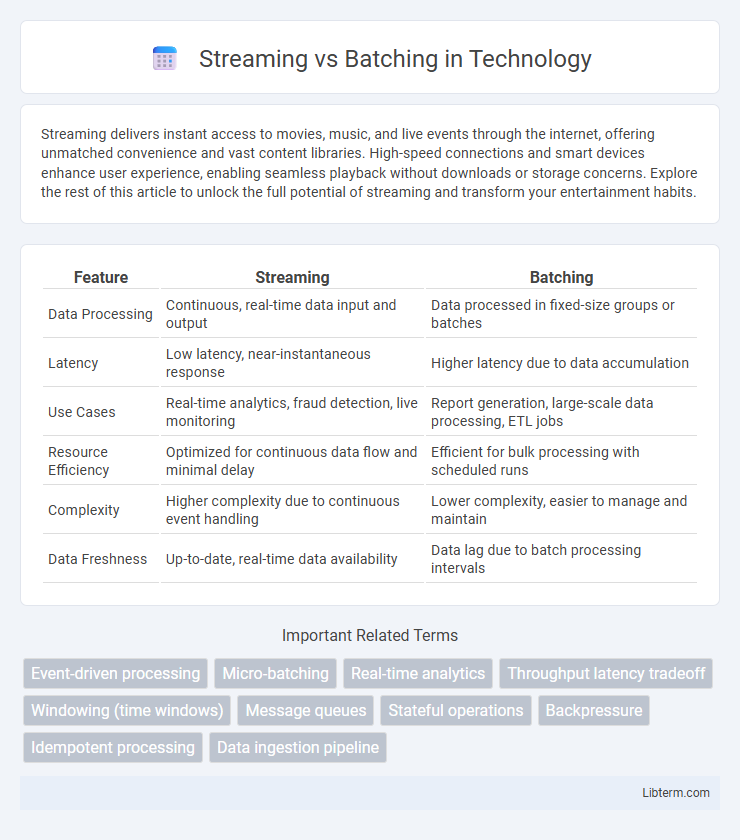

| Feature | Streaming | Batching |

|---|---|---|

| Data Processing | Continuous, real-time data input and output | Data processed in fixed-size groups or batches |

| Latency | Low latency, near-instantaneous response | Higher latency due to data accumulation |

| Use Cases | Real-time analytics, fraud detection, live monitoring | Report generation, large-scale data processing, ETL jobs |

| Resource Efficiency | Optimized for continuous data flow and minimal delay | Efficient for bulk processing with scheduled runs |

| Complexity | Higher complexity due to continuous event handling | Lower complexity, easier to manage and maintain |

| Data Freshness | Up-to-date, real-time data availability | Data lag due to batch processing intervals |

Introduction to Streaming and Batching

Streaming processes data in real-time, enabling immediate analysis and response by continuously ingesting and processing data as it arrives. Batching collects data over a period and processes it in large groups, optimizing resource efficiency for scenarios where latency is less critical. Choosing between streaming and batching depends on factors like data velocity, processing latency requirements, and system scalability.

Core Concepts Defined: Streaming vs Batching

Streaming processes data continuously in real-time as it arrives, enabling immediate insights and low-latency decision-making, essential for applications needing instant updates such as fraud detection or live analytics. Batching collects data over a defined period or volume, processing it as a single job, which is efficient for handling large volumes of data, complex computations, and historical analysis. Core concepts distinguish streaming by its event-driven, incremental processing model, while batching relies on bulk, scheduled processing with higher latency tolerance.

Key Differences in Data Processing Models

Streaming processes data in real-time, handling continuous, incremental data flows to enable immediate insights and actions, often used in applications like fraud detection and live analytics. Batching processes large volumes of data at once by collecting and storing it over a period for scheduled processing, suitable for tasks like report generation and complex analytics. Key differences lie in latency, with streaming offering low-latency processing, while batching is optimized for throughput and resource efficiency.

Advantages of Streaming

Streaming processes data in real-time, enabling immediate insights and faster decision-making for businesses dealing with dynamic information flows. It reduces latency compared to batching, allowing continuous data processing and rapid response to events, which is crucial for applications like fraud detection and live analytics. Furthermore, streaming systems can handle large-scale, unbounded datasets efficiently, providing scalability and flexibility unmatched by traditional batch processing methods.

Benefits of Batching

Batching improves system efficiency by processing data in groups, reducing the overhead associated with handling individual records and optimizing resource usage. It enhances throughput in data-intensive applications by enabling bulk operations, which can lead to faster processing times compared to streaming. Batching also facilitates easier error handling and recovery, as failures can be addressed at the batch level rather than individual data points.

Use Cases Suited for Streaming

Streaming excels in real-time analytics, fraud detection, and monitoring IoT devices where immediate data processing is critical. It suits environments with continuous data flows, such as social media feeds, financial transactions, and live sensor data. Use cases demanding low latency and instantaneous insights benefit most from streaming over batching methods.

Ideal Applications for Batching

Batching is ideal for processing large volumes of data where latency is not a critical factor, such as end-of-day reporting, billing systems, and data warehousing. It efficiently handles substantial datasets by accumulating data over a period before processing, optimizing resource use and throughput in scenarios like payroll processing and ETL (extract, transform, load) workflows. Batching is suited for applications that require high throughput and can tolerate delayed data availability without impacting overall system performance.

Performance Considerations and Scalability

Streaming processes data in real-time, enabling low-latency responses essential for time-sensitive applications, while batching handles large volumes of data in chunks, optimizing throughput but introducing processing delays. Performance in streaming systems depends heavily on event ingestion rates and state management efficiency, whereas batching benefits from optimized resource utilization and reduced overhead per operation. Scalability challenges for streaming involve maintaining consistent processing speeds under fluctuating data rates, whereas batching scales effectively with increased computational resources and parallel processing frameworks.

Challenges and Limitations

Streaming data processing faces challenges such as handling high throughput with low latency, ensuring fault tolerance, and managing event time processing complexities. Batching struggles with latency issues due to fixed processing windows, which delay data availability and reduce real-time insights. Both approaches require scalable infrastructure, but streaming demands more sophisticated state management and error recovery mechanisms to maintain continuous data flow.

Choosing the Right Approach: Factors to Consider

Choosing between streaming and batching depends on factors such as data volume, processing latency requirements, and system complexity. Streaming suits real-time analytics and continuous data flows, providing low-latency insights, while batching is ideal for large volumes of data with less stringent timing needs, optimizing resource use and fault tolerance. Consider data freshness, scalability demands, and infrastructure costs to determine the most effective approach for your data processing objectives.

Streaming Infographic

libterm.com

libterm.com