Serverless computing allows you to run applications without managing servers, enabling automatic scaling and reduced operational overhead. It provides cost efficiency by charging only for actual usage rather than pre-allocated resources. Explore the rest of the article to understand how serverless architecture can transform your development workflow.

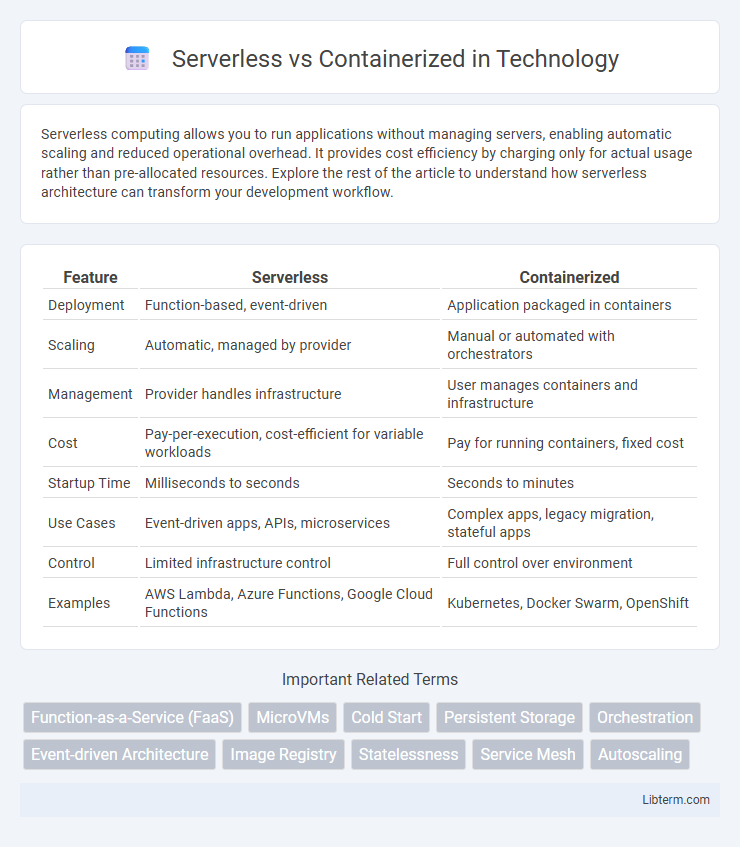

Table of Comparison

| Feature | Serverless | Containerized |

|---|---|---|

| Deployment | Function-based, event-driven | Application packaged in containers |

| Scaling | Automatic, managed by provider | Manual or automated with orchestrators |

| Management | Provider handles infrastructure | User manages containers and infrastructure |

| Cost | Pay-per-execution, cost-efficient for variable workloads | Pay for running containers, fixed cost |

| Startup Time | Milliseconds to seconds | Seconds to minutes |

| Use Cases | Event-driven apps, APIs, microservices | Complex apps, legacy migration, stateful apps |

| Control | Limited infrastructure control | Full control over environment |

| Examples | AWS Lambda, Azure Functions, Google Cloud Functions | Kubernetes, Docker Swarm, OpenShift |

Introduction to Serverless and Containerized Architectures

Serverless architecture enables developers to build and run applications without managing infrastructure, automatically scaling based on demand while charging only for actual usage, making it ideal for event-driven workloads. Containerized architecture packages applications and their dependencies into portable, lightweight units that run consistently across different computing environments, facilitating microservices deployment and easier management via orchestration tools like Kubernetes. Both architectures enhance agility and scalability but differ in operational control, with serverless abstracting infrastructure management and containers offering more customization and environment consistency.

Key Differences Between Serverless and Containers

Serverless computing abstracts server management, automatically scaling resources based on demand, while containerized applications run consistently across environments with explicit resource allocation. Containers provide greater control over the runtime environment and dependencies, facilitating portability and customization, whereas serverless prioritizes operational simplicity and event-driven execution. Costs differ as serverless charges per execution time and resources used, contrasting with containers that incur fixed resource costs regardless of utilization.

Performance Comparison: Serverless vs Containerized

Serverless architectures offer auto-scaling and event-driven execution, resulting in reduced latency for variable workloads but potentially higher cold start delays compared to containerized environments. Containerized deployments provide consistent performance with dedicated resource allocation, enabling optimized CPU and memory usage for steady or predictable workloads. Performance choice depends on workload patterns, with serverless excelling in spiky traffic and containers in sustained, high-throughput applications.

Scalability and Resource Management

Serverless architectures automatically scale based on demand, eliminating the need for manual resource allocation and efficiently handling unpredictable workloads with fine-grained billing. Containerized environments provide granular control over resource management, allowing users to customize scaling policies and optimize performance for consistent workloads using orchestrators like Kubernetes. Both approaches offer robust scalability, but serverless excels in dynamic, event-driven applications, while containerization is suited for complex, stateful services requiring persistent infrastructure management.

Cost Efficiency: Serverless vs Containerized Solutions

Serverless solutions optimize cost efficiency by charging strictly for actual usage, eliminating expenses related to idle resources and server maintenance. Containerized solutions incur costs for allocated compute resources regardless of utilization, potentially leading to higher baseline expenses and the need for capacity planning. Enterprises with variable workloads benefit from serverless architectures, while predictable, steady workloads may find containerized deployments more cost-effective due to fixed resource allocation.

Deployment and Development Workflow

Serverless deployments abstract infrastructure management, allowing developers to focus on writing functions that automatically scale with demand, resulting in faster iterations and reduced operational overhead. Containerized deployments use container orchestration platforms like Kubernetes, offering fine-grained control over application environments but requiring complex setup and maintenance during the development workflow. Serverless environments optimize for rapid deployment with event-driven architectures, while containerized solutions enable consistent environments and easier debugging across development, testing, and production stages.

Security Considerations in Serverless and Containerized

Serverless architectures minimize attack surfaces by abstracting infrastructure management, reducing the need for patching and configuration but require stringent IAM policies to prevent unauthorized function invocation. Containerized environments provide greater control over runtime security with tools like Kubernetes RBAC, image scanning, and network segmentation, yet demand consistent vulnerability management and host security practices. Both models necessitate robust monitoring and encrypted communication to mitigate risks associated with multi-tenant environments and dynamic scaling.

Use Cases Best Suited for Serverless

Serverless computing excels in event-driven applications, real-time data processing, and rapid development of microservices where automatic scaling and cost efficiency are crucial. Ideal use cases include APIs, chatbots, IoT backends, and lightweight ETL jobs requiring minimal infrastructure management. This model reduces operational complexity and is best suited for unpredictable workloads with variable traffic patterns.

Use Cases Best Suited for Containerized

Containerized applications excel in scenarios requiring consistent environments across development, testing, and production, such as microservices architectures where isolation and scalability are critical. They are ideal for stateful applications, long-running processes, and workloads demanding fine-grained control over infrastructure and dependencies. Enterprises heavily leveraging DevOps pipelines benefit from containerization due to its compatibility with orchestration tools like Kubernetes, enabling efficient resource management and seamless updates.

Choosing the Right Architecture for Your Application

Serverless architecture enables automatic scaling and reduced infrastructure management, ideal for event-driven applications with variable workloads. Containerized solutions offer more control and consistency across environments, making them suitable for complex, microservices-based systems requiring custom configurations. Evaluating factors such as application complexity, scalability needs, and operational overhead is essential to choose between serverless and containerized architectures effectively.

Serverless Infographic

libterm.com

libterm.com