On-premise AI solutions provide businesses with enhanced data security and control by hosting AI infrastructure within their own facilities, minimizing risks associated with cloud-based services. These systems enable customization tailored to specific organizational needs while reducing latency for real-time processing. Explore the rest of the article to discover how your enterprise can leverage on-premise AI for maximum efficiency and innovation.

Table of Comparison

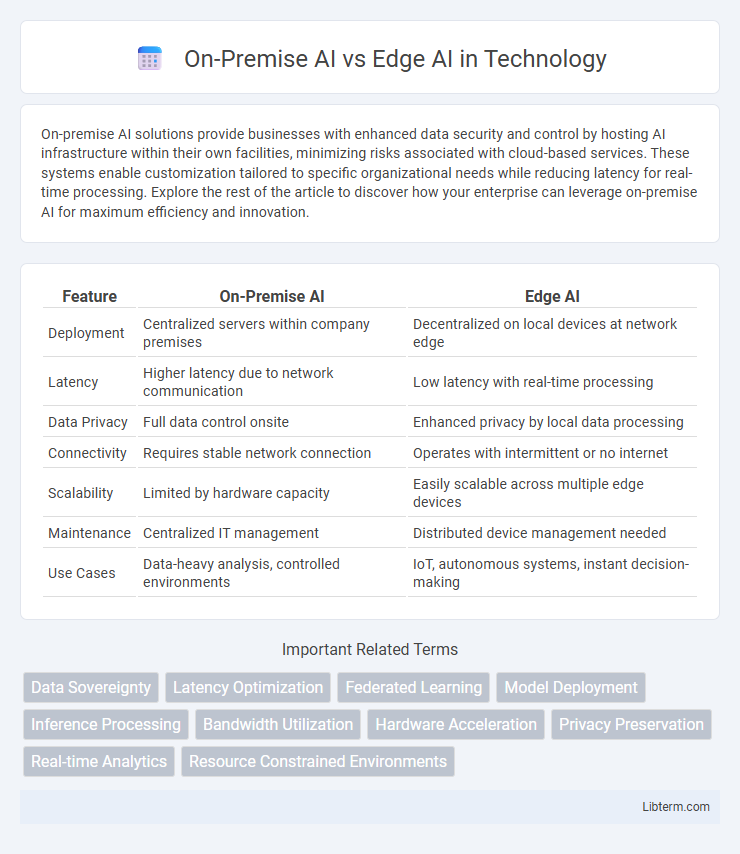

| Feature | On-Premise AI | Edge AI |

|---|---|---|

| Deployment | Centralized servers within company premises | Decentralized on local devices at network edge |

| Latency | Higher latency due to network communication | Low latency with real-time processing |

| Data Privacy | Full data control onsite | Enhanced privacy by local data processing |

| Connectivity | Requires stable network connection | Operates with intermittent or no internet |

| Scalability | Limited by hardware capacity | Easily scalable across multiple edge devices |

| Maintenance | Centralized IT management | Distributed device management needed |

| Use Cases | Data-heavy analysis, controlled environments | IoT, autonomous systems, instant decision-making |

Introduction to On-Premise AI and Edge AI

On-Premise AI involves deploying artificial intelligence models and data processing within an organization's local infrastructure, ensuring greater control over data security, latency, and compliance. Edge AI decentralizes computation by executing AI algorithms directly on devices at the network edge, such as IoT sensors or smartphones, enabling real-time analytics and reducing dependency on cloud connectivity. Both approaches optimize AI deployment based on specific operational demands, balancing factors like latency, bandwidth, privacy, and scalability.

Core Definitions: What Sets On-Premise AI Apart from Edge AI?

On-Premise AI operates within a centralized data center or local servers, enabling robust data security, control, and integration with existing IT infrastructure. Edge AI processes data locally on devices or edge nodes near the data source, reducing latency and bandwidth use while supporting real-time decision-making in distributed environments. The fundamental distinction lies in deployment location: On-Premise AI is centralized, suited for heavy computational tasks, whereas Edge AI emphasizes decentralized, low-latency processing at or near the device level.

Architecture and Deployment Differences

On-Premise AI relies on centralized data centers within an organization, providing robust processing power and strict data control, while Edge AI deploys intelligence directly on devices at the network's edge, enabling real-time processing with reduced latency. On-Premise AI architectures typically involve high-performance servers, extensive storage, and complex networking infrastructure, whereas Edge AI integrates lightweight models into edge devices like sensors, IoT devices, or gateways for decentralized computation. Deployment of On-Premise AI requires maintaining dedicated facilities and managing data security internally, contrasting with Edge AI's model of distributed deployment prioritizing immediate data processing and minimal cloud dependency.

Key Advantages of On-Premise AI

On-Premise AI offers enhanced data security by keeping sensitive information within the local infrastructure, reducing exposure to external threats. It enables low-latency processing suitable for real-time analytics and critical decision-making without reliance on internet connectivity. Organizations benefit from greater control over hardware customization and compliance with strict regulatory requirements through on-premise deployments.

Key Advantages of Edge AI

Edge AI processes data locally on devices such as sensors or smartphones, enabling real-time decision-making with minimal latency, crucial for applications like autonomous vehicles and industrial automation. It enhances data privacy and security by limiting the need to transmit sensitive information to centralized servers, reducing exposure to cyber threats. Edge AI also reduces bandwidth usage and operational costs by minimizing reliance on cloud infrastructure, ensuring more efficient and scalable deployment in distributed environments.

Challenges Faced by On-Premise AI Solutions

On-premise AI solutions face significant challenges including high infrastructure costs, complex maintenance requirements, and limited scalability compared to cloud-based alternatives. Data security remains a critical concern due to the need for robust internal protection measures against breaches and unauthorized access. Furthermore, on-premise AI often struggles with real-time data processing limitations, hindering performance in latency-sensitive applications.

Challenges Faced by Edge AI Implementations

Edge AI implementation faces significant challenges such as limited computational power and storage capacity on edge devices, which restricts processing complex AI models locally. Network connectivity issues and the need for real-time data processing further complicate maintaining consistent performance and reliability. Security risks and data privacy concerns also arise due to decentralized data handling in distributed edge environments.

Security and Data Privacy Considerations

On-Premise AI offers robust security by keeping sensitive data within internal networks, minimizing exposure to external threats and ensuring compliance with strict data privacy regulations such as GDPR and HIPAA. Edge AI processes data locally on devices, reducing latency and lowering the risk of data breaches during transmission, but it requires strong endpoint security measures to protect distributed nodes from cyberattacks. Both architectures demand tailored encryption, access controls, and continuous monitoring to safeguard data integrity and privacy in dynamic operational environments.

Use Cases: When to Choose On-Premise vs Edge AI

On-premise AI is ideal for industries requiring stringent data privacy and regulatory compliance, such as healthcare and finance, where sensitive data must remain on internal servers. Edge AI excels in real-time decision-making scenarios like autonomous vehicles, smart manufacturing, and IoT devices, where low latency and immediate processing at the data source are critical. Choosing between on-premise and edge AI depends on factors like data sensitivity, latency requirements, infrastructure costs, and the need for real-time analytics.

Future Trends in On-Premise and Edge AI

Future trends in on-premise AI emphasize enhanced data security, increased computational power, and real-time processing capabilities within localized environments to meet enterprise demands. Edge AI is rapidly advancing with improved hardware efficiency, AI model optimization for low-latency inference, and seamless integration with 5G networks, enabling smarter IoT devices and autonomous systems. The convergence of on-premise and edge AI solutions suggests a hybrid future where distributed intelligence balances privacy, speed, and scalability across industries.

On-Premise AI Infographic

libterm.com

libterm.com