Cloud servers offer scalable, reliable, and flexible hosting solutions that adapt to your business needs, ensuring optimal performance without the limitations of traditional physical hardware. They provide enhanced security measures, easy resource management, and cost-effective pay-as-you-go pricing models that increase operational efficiency. Discover how leveraging cloud servers can transform your IT infrastructure by reading the full article.

Table of Comparison

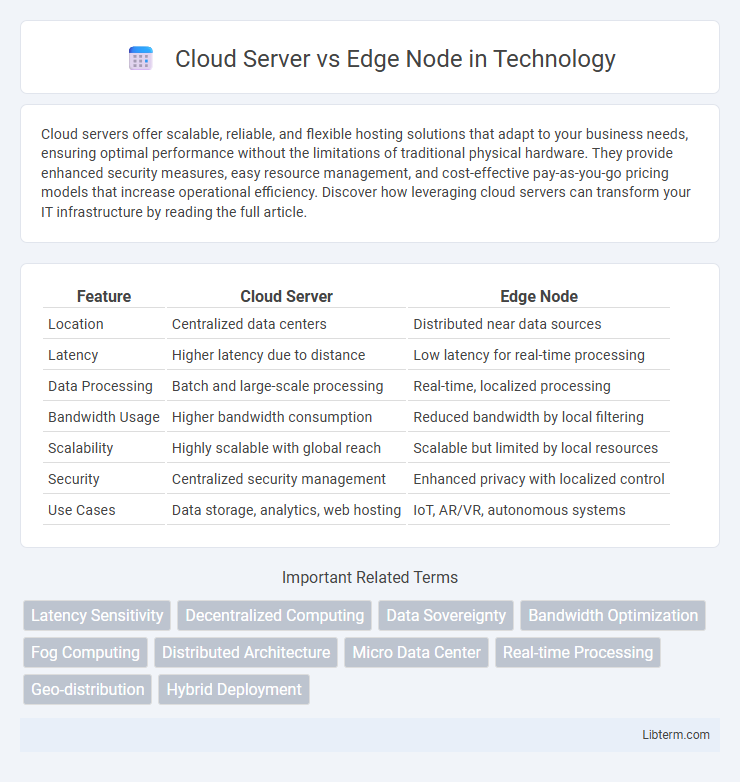

| Feature | Cloud Server | Edge Node |

|---|---|---|

| Location | Centralized data centers | Distributed near data sources |

| Latency | Higher latency due to distance | Low latency for real-time processing |

| Data Processing | Batch and large-scale processing | Real-time, localized processing |

| Bandwidth Usage | Higher bandwidth consumption | Reduced bandwidth by local filtering |

| Scalability | Highly scalable with global reach | Scalable but limited by local resources |

| Security | Centralized security management | Enhanced privacy with localized control |

| Use Cases | Data storage, analytics, web hosting | IoT, AR/VR, autonomous systems |

Introduction to Cloud Servers and Edge Nodes

Cloud servers are centralized, virtualized computing resources hosted in data centers, providing scalable and flexible infrastructure for diverse applications and large-scale data processing. Edge nodes are decentralized computing points located closer to end users, enabling real-time data processing and reduced latency by handling tasks at the network's edge. This distinction highlights cloud servers' strength in heavy-duty computation and storage, while edge nodes optimize responsiveness and bandwidth efficiency for distributed environments.

Key Features of Cloud Servers

Cloud servers provide scalable virtualized resources hosted in centralized data centers, offering high availability, robust security protocols, and flexible configurations to accommodate diverse workloads. They support rapid deployment, automated backup, and seamless integration with cloud services like AI, databases, and analytics tools. Their pay-as-you-go pricing model and global accessibility make cloud servers ideal for enterprises seeking cost-efficient, reliable, and scalable infrastructure.

Core Functions of Edge Nodes

Edge nodes perform critical functions such as real-time data processing, local analytics, and latency reduction by bringing computation closer to data sources. Unlike cloud servers, edge nodes enhance responsiveness and bandwidth efficiency by handling tasks like IoT data aggregation, event filtering, and immediate decision-making at the network edge. These core capabilities enable edge nodes to support applications requiring low-latency processing, such as autonomous vehicles, smart grids, and augmented reality.

Architectural Differences: Cloud vs Edge

Cloud servers are centralized data centers with vast computational resources, offering scalable storage and processing capabilities through virtualized infrastructure. Edge nodes operate closer to data sources, providing localized computing power and low-latency processing by distributing workloads across numerous geographically dispersed devices. The architectural difference lies in cloud's focus on centralized resource aggregation versus edge's emphasis on decentralized, real-time data handling to enhance responsiveness.

Performance and Latency Comparison

Cloud servers deliver robust computational power and storage capacity centralized in data centers, which may introduce higher latency due to the physical distance between users and servers. Edge nodes process data closer to the source, significantly reducing latency and improving real-time performance by minimizing data travel time and network congestion. Consequently, edge nodes excel in applications requiring immediate responsiveness, while cloud servers are optimal for intensive processing tasks needing extensive resources.

Security Implications in Cloud and Edge Computing

Cloud servers centralize data storage in large-scale data centers, increasing exposure to cyber threats such as DDoS attacks and data breaches but benefit from robust, continuously updated security frameworks. Edge nodes process data closer to the source, reducing latency and minimizing data transmission risks, yet face challenges in physical security and device-level vulnerabilities. Implementing end-to-end encryption and rigorous access controls in both environments is essential to mitigate risks inherent to their architectural differences.

Scalability: Cloud Servers vs Edge Nodes

Cloud servers offer extensive scalability by leveraging vast centralized data centers with dynamic resource allocation, enabling rapid expansion and high-volume processing tailored to fluctuating demands. Edge nodes provide localized scalability by distributing computational power closer to end-users, reducing latency and bandwidth usage, but typically support more limited resource scaling compared to centralized cloud servers. Combining cloud servers with edge nodes creates a hybrid architecture that maximizes scalability through centralized resource abundance and edge-level responsiveness.

Cost Considerations and ROI Analysis

Cloud servers offer scalable resources with predictable operational expenses, making them cost-efficient for fluctuating workloads but may incur higher latency costs for data-intensive applications. Edge nodes reduce data transmission expenses and latency by processing information closer to the source, which can enhance real-time performance and lower bandwidth usage but involve upfront investment in distributed hardware. ROI analysis must account for workload patterns, data sensitivity, geographic distribution, and long-term maintenance to determine whether centralizing on cloud infrastructure or deploying edge nodes delivers optimal cost efficiency and value.

Use Cases for Cloud Servers and Edge Nodes

Cloud servers excel in handling large-scale data processing, centralized storage, and hosting web applications, making them ideal for enterprises requiring robust computing power and scalable resources. Edge nodes are essential for real-time data processing, low-latency applications, and Internet of Things (IoT) deployments where data is generated at the network edge, such as autonomous vehicles and smart city sensors. Use cases for cloud servers include big data analytics, disaster recovery, and SaaS platforms, while edge nodes are preferred for video streaming optimization, augmented reality, and industrial automation.

Choosing Between Cloud Server and Edge Node: Decision Factors

Choosing between a cloud server and an edge node depends on factors such as latency requirements, data processing needs, and network bandwidth availability. Cloud servers offer scalable resources and centralized management, ideal for applications that tolerate higher latency and require extensive data storage. Edge nodes excel in delivering low-latency computing and real-time data processing closer to end-users, making them suitable for IoT, augmented reality, and mission-critical applications.

Cloud Server Infographic

libterm.com

libterm.com