Tokenization is a crucial process in natural language processing where text is segmented into smaller units called tokens, such as words or phrases. This step enables machines to better understand and analyze language by breaking down complex sentences into manageable parts. Explore the rest of the article to learn how tokenization enhances your applications and drives accurate language comprehension.

Table of Comparison

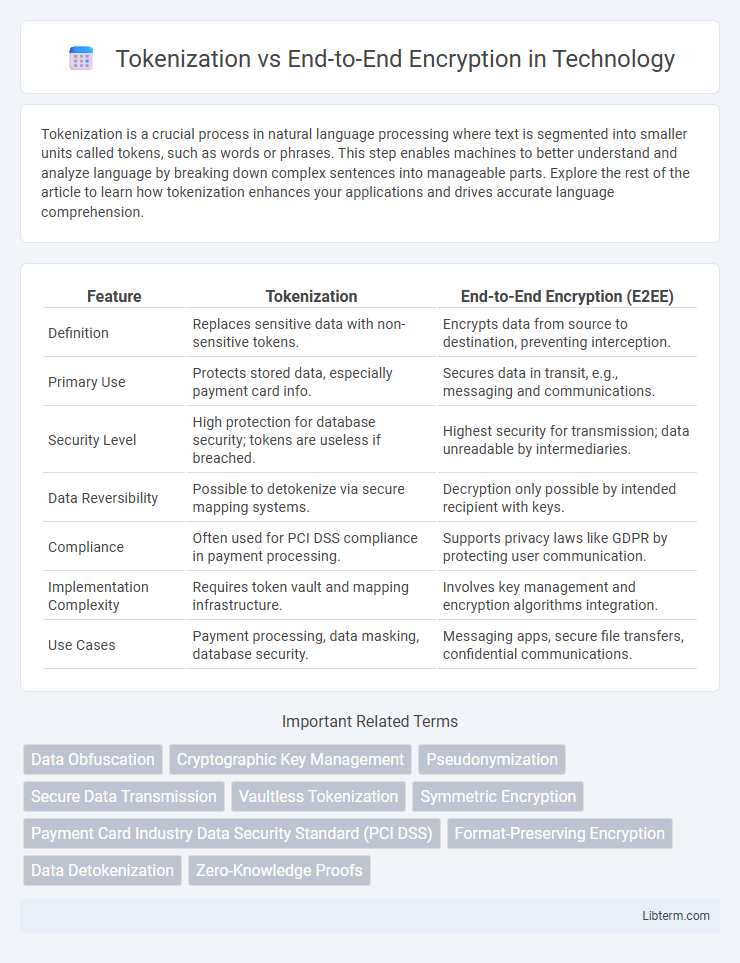

| Feature | Tokenization | End-to-End Encryption (E2EE) |

|---|---|---|

| Definition | Replaces sensitive data with non-sensitive tokens. | Encrypts data from source to destination, preventing interception. |

| Primary Use | Protects stored data, especially payment card info. | Secures data in transit, e.g., messaging and communications. |

| Security Level | High protection for database security; tokens are useless if breached. | Highest security for transmission; data unreadable by intermediaries. |

| Data Reversibility | Possible to detokenize via secure mapping systems. | Decryption only possible by intended recipient with keys. |

| Compliance | Often used for PCI DSS compliance in payment processing. | Supports privacy laws like GDPR by protecting user communication. |

| Implementation Complexity | Requires token vault and mapping infrastructure. | Involves key management and encryption algorithms integration. |

| Use Cases | Payment processing, data masking, database security. | Messaging apps, secure file transfers, confidential communications. |

Introduction to Data Security Methods

Tokenization replaces sensitive data with unique identification symbols that retain essential information without compromising security. End-to-End Encryption (E2EE) secures data by encrypting it at the source and decrypting it only at the destination, ensuring that intermediaries cannot access the information. Both methods strengthen data security frameworks, with tokenization primarily used for reducing exposure of sensitive information and E2EE providing robust protection for data in transit.

What is Tokenization?

Tokenization is a data security process that replaces sensitive information, such as credit card numbers or personal identifiers, with unique, non-sensitive tokens that have no exploitable value outside the specific system. These tokens are stored and used in place of the original data, significantly reducing the risk of data breaches during transactions or data storage. Unlike end-to-end encryption, which secures data in transit, tokenization minimizes exposure by ensuring that sensitive data is never stored or transmitted beyond secure environments.

What is End-to-End Encryption?

End-to-end encryption (E2EE) ensures that data is encrypted on the sender's device and only decrypted on the recipient's device, preventing intermediaries from accessing the information. Unlike tokenization, which replaces sensitive data with non-sensitive tokens for secure storage and processing, E2EE secures communication channels by encrypting messages throughout transmission. This method is widely used in messaging apps, financial transactions, and data privacy solutions to maintain confidentiality and integrity.

Key Differences Between Tokenization and End-to-End Encryption

Tokenization replaces sensitive data with non-sensitive placeholders called tokens, which cannot be reversed without access to the tokenization system, enhancing data security by isolating and minimizing exposure. End-to-end encryption (E2EE) secures data by encrypting it from the sender to the receiver, ensuring that only authorized parties can decrypt and access the original content during transmission. The key difference lies in tokenization protecting data at rest by substituting it, while E2EE safeguards data in transit by encrypting it end-to-end.

How Tokenization Works in Practice

Tokenization replaces sensitive data with unique identification symbols called tokens that have no exploitable meaning or value outside the specific context. In practice, tokenization involves generating a random or pseudo-random token that maps to the original data stored securely in a centralized token vault. This method reduces the risk of data breaches by ensuring that sensitive information, such as credit card numbers or personal identifiers, is never exposed during transactions, providing enhanced security compared to traditional end-to-end encryption alone.

How End-to-End Encryption Functions

End-to-end encryption (E2EE) functions by encrypting data on the sender's device and only decrypting it on the recipient's device, ensuring that intermediaries such as servers or service providers cannot access the plaintext information. This process uses cryptographic keys uniquely shared between communicating parties, typically employing asymmetric encryption algorithms like RSA or elliptic-curve cryptography for secure key exchange, followed by symmetric encryption for efficient message protection. By maintaining encryption boundaries at the endpoints, E2EE guarantees confidentiality and integrity of data in transit, contrasting with tokenization which replaces sensitive data with surrogate tokens but may allow access within secure environments.

Use Cases for Tokenization

Tokenization is widely used in payment processing to securely replace sensitive card information with non-sensitive tokens, reducing the risk of data breaches. E-commerce platforms leverage tokenization to store customer payment data safely without exposing actual account details, enabling seamless repeat transactions. It is also applied in healthcare to protect patient information by substituting personal identifiers with tokens that ensure compliance with data privacy regulations like HIPAA.

Use Cases for End-to-End Encryption

End-to-end encryption (E2EE) is essential for securing sensitive communications in messaging apps, financial transactions, and healthcare data exchanges by ensuring that only the intended recipients can access the information. Unlike tokenization, which replaces sensitive data with tokens for secure storage and processing, E2EE protects data throughout transmission, making it ideal for real-time, private communication scenarios. Use cases such as WhatsApp messages, secure email services, and confidential telehealth consultations demonstrate how E2EE maintains data confidentiality and integrity against interception or unauthorized access.

Pros and Cons: Tokenization vs End-to-End Encryption

Tokenization replaces sensitive data with unique identification symbols, reducing data breach risks by storing tokens instead of actual information, but it requires secure token vault management and may add complexity to systems. End-to-end encryption secures data by encrypting it from sender to recipient, preventing unauthorized access even during transmission, yet it can slow down processing speeds and complicate key management. While tokenization excels in limiting stored sensitive data exposure, encryption provides comprehensive data confidentiality but demands robust key protection strategies.

Choosing the Right Solution for Your Business

Tokenization replaces sensitive data with unique identification symbols, reducing risk by keeping actual data secure, while end-to-end encryption encrypts information during transmission to prevent unauthorized access. Businesses handling payment processing or compliance-heavy data benefit from tokenization's mitigation of PCI DSS scope, whereas industries requiring confidentiality in communication favor end-to-end encryption for robust data privacy. Selecting the right solution depends on your specific security needs, regulatory environment, and operational workflow to balance usability and protection effectively.

Tokenization Infographic

libterm.com

libterm.com