An ETL pipeline efficiently extracts data from various sources, transforms it to meet business requirements, and loads it into a target database or data warehouse for analysis. This process ensures data consistency, quality, and accessibility, enabling informed decision-making and streamlined operations. Discover how your organization can leverage ETL pipelines to optimize data workflows by reading the full article.

Table of Comparison

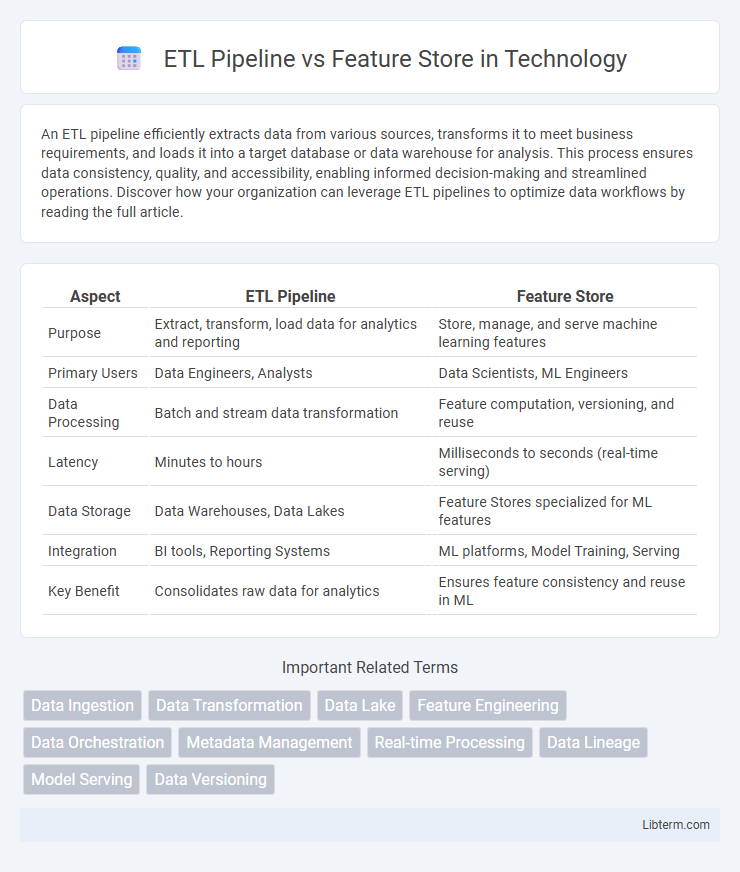

| Aspect | ETL Pipeline | Feature Store |

|---|---|---|

| Purpose | Extract, transform, load data for analytics and reporting | Store, manage, and serve machine learning features |

| Primary Users | Data Engineers, Analysts | Data Scientists, ML Engineers |

| Data Processing | Batch and stream data transformation | Feature computation, versioning, and reuse |

| Latency | Minutes to hours | Milliseconds to seconds (real-time serving) |

| Data Storage | Data Warehouses, Data Lakes | Feature Stores specialized for ML features |

| Integration | BI tools, Reporting Systems | ML platforms, Model Training, Serving |

| Key Benefit | Consolidates raw data for analytics | Ensures feature consistency and reuse in ML |

Introduction to ETL Pipelines and Feature Stores

ETL pipelines automate the Extract, Transform, and Load process, enabling seamless data integration from multiple sources into data warehouses or lakes while ensuring data quality and consistency. Feature stores centralize the management, storage, and sharing of machine learning features, streamlining feature engineering and promoting reuse across models. Both ETL pipelines and feature stores drive efficient data workflows but serve distinct roles in data ingestion versus feature lifecycle management.

Definitions: What Is an ETL Pipeline?

An ETL pipeline is a data processing framework that Extracts raw data from various sources, Transforms it into a suitable format by cleansing and aggregating, and Loads it into a target system such as a data warehouse or database. It enables organizations to consolidate disparate data streams for analytics, reporting, and machine learning workflows. Unlike feature stores, which focus on storing reusable, precomputed features for machine learning models, ETL pipelines primarily address data integration and preparation across multiple domains.

Definitions: What Is a Feature Store?

A feature store is a centralized repository designed to efficiently store, manage, and serve machine learning features for training and inference. Unlike ETL pipelines, which primarily extract, transform, and load raw data, a feature store focuses on creating reusable, versioned, and consistent feature sets that improve model accuracy and deployment speed. Feature stores enable data scientists and engineers to avoid feature duplication while ensuring real-time and batch feature availability across the entire ML lifecycle.

Core Components of ETL Pipelines

ETL pipelines comprise core components such as data extraction, transformation, and loading, which enable the systematic collection, cleaning, and integration of raw data from multiple sources into a target data warehouse. These pipelines emphasize data preprocessing tasks like filtering, aggregating, and schema mapping essential for preparing datasets for analytics and machine learning applications. In contrast to feature stores, ETL pipelines primarily focus on bulk data processing and batch workflows rather than real-time feature retrieval and versioning capabilities.

Core Components of Feature Stores

Feature stores centralize feature management through components such as the feature registry, which documents feature definitions and metadata, and the online and offline stores that enable real-time and batch access to features, respectively. They also include a transformation engine to ensure consistent feature engineering across training and inference, and monitoring systems to track feature quality and drift, enhancing model reliability. In contrast, ETL pipelines primarily focus on extracting, transforming, and loading raw data without specialized mechanisms for feature reuse, consistency, or serving.

Key Similarities Between ETL Pipelines and Feature Stores

ETL pipelines and feature stores both facilitate the transformation and management of data for advanced analytics and machine learning models by ensuring data cleanliness, consistency, and availability. They automate the process of extracting raw data, applying necessary transformations, and loading the resulting datasets into storage systems optimized for analysis or real-time use. Both systems emphasize efficient data versioning, lineage tracking, and scalability to support continuous model training and deployment workflows.

Major Differences: ETL Pipeline vs Feature Store

ETL pipelines primarily extract, transform, and load raw data from disparate sources into data warehouses for analytics and reporting, focusing on bulk data processing workflows. Feature Stores centralize and manage engineered features for machine learning models, ensuring consistent feature calculation, storage, and real-time retrieval across training and serving environments. The major difference lies in ETL pipelines handling general data integration tasks, while Feature Stores specialize in optimized feature management to accelerate ML model development and deployment.

Use Cases: When to Use ETL Pipelines

ETL pipelines are essential for data integration tasks requiring extraction, transformation, and loading of raw data into centralized data warehouses for comprehensive analytics and reporting. They excel in use cases involving large-scale batch processing, historical data consolidation, and preparing data for traditional business intelligence workloads. ETL pipelines are ideal when the goal is to create clean, structured datasets from diverse sources for broad organizational access rather than serving real-time machine learning feature retrieval.

Use Cases: When to Use Feature Stores

Feature stores excel in machine learning workflows by centralizing, storing, and serving curated features for model training and real-time inference, ensuring consistency and reducing redundancy across teams. Unlike traditional ETL pipelines designed primarily for data extraction, transformation, and loading into data warehouses, feature stores streamline feature management, versioning, and online access, supporting rapid experimentation and deployment. Use feature stores when the priority is to maintain feature reproducibility, enable feature sharing across multiple models, and support low-latency feature retrieval for scalable ML applications.

Choosing the Right Solution: Factors to Consider

Choosing the right solution between an ETL pipeline and a feature store depends on the specific needs of data processing and machine learning workflows. ETL pipelines excel at transforming and loading large volumes of raw data into data warehouses, optimizing data quality and consistency for analytics. Feature stores are specialized for managing, serving, and reusing machine learning features, improving model accuracy and development speed by ensuring feature consistency across training and inference stages.

ETL Pipeline Infographic

libterm.com

libterm.com