Grid computing harnesses the power of multiple interconnected computers to perform complex tasks more efficiently than a single machine. By distributing workloads across various systems, it enables large-scale data processing and resource sharing, optimizing performance and reducing costs. Explore the rest of the article to discover how grid computing can revolutionize your computational needs.

Table of Comparison

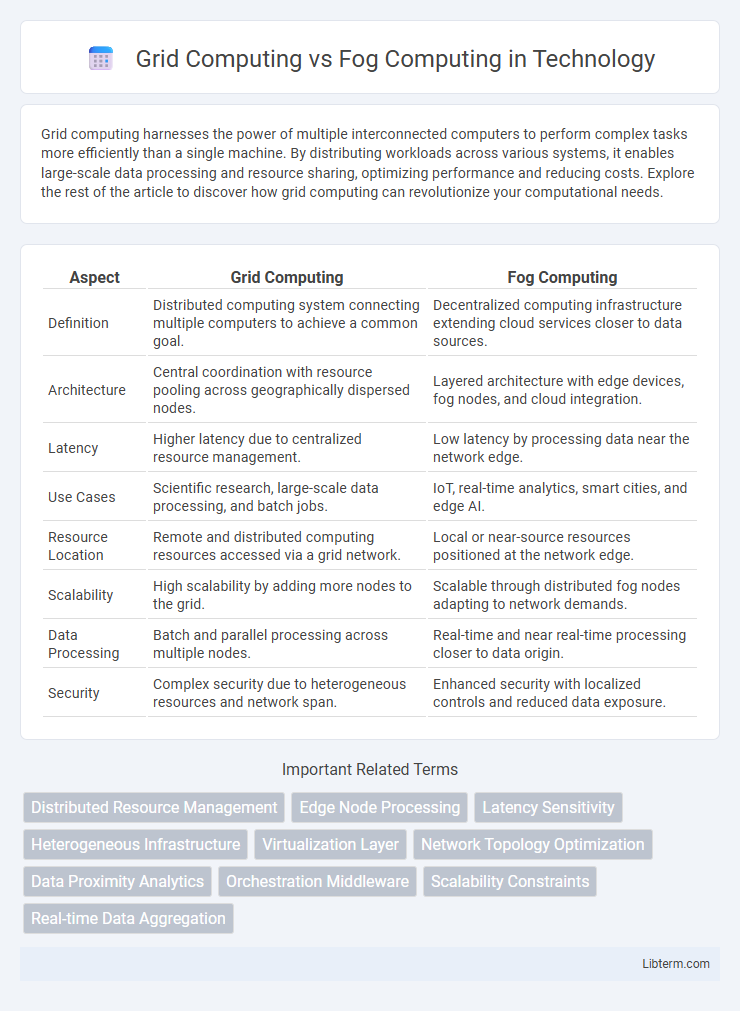

| Aspect | Grid Computing | Fog Computing |

|---|---|---|

| Definition | Distributed computing system connecting multiple computers to achieve a common goal. | Decentralized computing infrastructure extending cloud services closer to data sources. |

| Architecture | Central coordination with resource pooling across geographically dispersed nodes. | Layered architecture with edge devices, fog nodes, and cloud integration. |

| Latency | Higher latency due to centralized resource management. | Low latency by processing data near the network edge. |

| Use Cases | Scientific research, large-scale data processing, and batch jobs. | IoT, real-time analytics, smart cities, and edge AI. |

| Resource Location | Remote and distributed computing resources accessed via a grid network. | Local or near-source resources positioned at the network edge. |

| Scalability | High scalability by adding more nodes to the grid. | Scalable through distributed fog nodes adapting to network demands. |

| Data Processing | Batch and parallel processing across multiple nodes. | Real-time and near real-time processing closer to data origin. |

| Security | Complex security due to heterogeneous resources and network span. | Enhanced security with localized controls and reduced data exposure. |

Introduction to Grid Computing and Fog Computing

Grid computing leverages distributed computing resources across multiple locations to process large-scale tasks by sharing processing power, storage, and data, optimizing resource utilization and scalability. Fog computing extends cloud capabilities by placing computing, storage, and networking services closer to the data sources or end devices, thus reducing latency and improving real-time processing for Internet of Things (IoT) applications. Both paradigms enhance computational efficiency but serve different needs: grid computing emphasizes resource pooling for complex computations, while fog computing prioritizes low-latency processing near the edge of the network.

Key Concepts: Understanding Grid Computing

Grid Computing harnesses a distributed network of heterogeneous resources to perform complex computational tasks by dividing workloads across multiple interconnected nodes. It emphasizes resource sharing, parallel processing, and scalability, enabling efficient handling of large-scale scientific and engineering applications. Unlike Fog Computing, which focuses on processing data closer to the network edge, Grid Computing relies on centralized resource management to optimize processing power over wide-area networks.

Key Concepts: Understanding Fog Computing

Fog Computing extends cloud capabilities by bringing computation, storage, and networking closer to data sources, reducing latency and enhancing real-time processing for IoT devices. Unlike Grid Computing, which relies on a distributed network of heterogeneous resources to perform large-scale tasks, Fog Computing targets localized data handling with edge nodes like gateways and routers. This proximity supports efficient data filtering, analytics, and decision-making at the network's edge, essential for time-sensitive applications.

Architecture Comparison: Grid vs Fog Computing

Grid computing architecture relies on a centralized resource management system integrating distributed computing resources across multiple locations to perform large-scale tasks. Fog computing architecture decentralizes processing by deploying nodes closer to the data source, enabling real-time data processing and low-latency communication at the network edge. Unlike grid computing's hierarchical model, fog computing emphasizes a multi-layered structure that supports IoT devices with enhanced scalability, mobility, and location-awareness.

Performance and Scalability Differences

Grid computing offers high performance through resource sharing across distributed networks, enabling parallel processing of large tasks, but scalability can be hindered by network latency and centralized management constraints. Fog computing enhances real-time performance by processing data closer to the source at the network edge, reducing latency significantly and supporting dynamic scaling to accommodate fluctuating workloads in IoT environments. Scalability in fog computing benefits from decentralized architecture, allowing seamless integration of edge devices without overwhelming central servers, unlike grid computing where resource allocation depends heavily on fixed infrastructure.

Latency and Real-Time Processing Capabilities

Grid computing distributes large-scale tasks across geographically dispersed resources, often resulting in higher latency due to centralized data processing and long-distance communication. Fog computing processes data closer to the edge, significantly reducing latency and enhancing real-time processing capabilities by localizing computation near data sources. This proximity enables fog computing to handle time-sensitive applications more efficiently compared to grid computing's traditional batch-oriented approach.

Security and Privacy Considerations

Grid computing distributes large-scale tasks across multiple interconnected nodes, posing challenges due to the heterogeneity and geographic dispersion of resources, which can increase vulnerability to unauthorized access and data breaches. Fog computing enhances security and privacy by processing data closer to the source at the network edge, reducing latency and minimizing exposure of sensitive information during transmission. Both paradigms require robust authentication, encryption, and access control mechanisms, but fog computing offers superior data confidentiality and integrity through localized processing and real-time threat detection.

Practical Applications and Use Cases

Grid computing excels in large-scale scientific research projects like climate modeling and genomic analysis by harnessing distributed computing resources across multiple locations to process vast datasets efficiently. Fog computing is ideal for real-time IoT applications such as smart cities, autonomous vehicles, and industrial automation, where low latency and localized data processing at the network edge are critical. Both paradigms optimize resource utilization, but fog computing's proximity to data sources enhances responsiveness for time-sensitive tasks, while grid computing supports extensive computational workloads over a wide geographic area.

Advantages and Limitations: Grid vs Fog

Grid computing offers high computational power by pooling distributed resources, ideal for large-scale scientific tasks, but faces challenges with latency and real-time processing. Fog computing excels in reducing latency by processing data closer to the source, enabling real-time analytics and IoT integration, yet it may have limited scalability and resource availability compared to grid computing. Grid's centralized coordination contrasts with fog's decentralized approach, impacting flexibility, security management, and resource allocation efficiency.

Future Trends in Grid and Fog Computing

Future trends in grid computing emphasize enhanced resource sharing through decentralized cloud integration and improved virtualization technologies, enabling more efficient distributed processing for large-scale scientific and industrial applications. Fog computing is advancing with edge AI deployment, real-time data analytics, and increased security protocols to support the growing Internet of Things (IoT) ecosystem and latency-sensitive services. Converging trends indicate a hybrid approach leveraging grid computing's extensive resource pools and fog computing's proximity-driven low-latency processing for smarter, scalable, and more resilient distributed systems.

Grid Computing Infographic

libterm.com

libterm.com