Sparse data refers to datasets with a high proportion of missing, zero, or irrelevant values, posing unique challenges for analysis and modeling. Effective handling of sparse data requires specialized techniques such as dimensionality reduction, imputation, or advanced algorithms designed to extract meaningful patterns despite the limited information. Discover how mastering sparse data can improve your data-driven decisions by exploring the rest of this article.

Table of Comparison

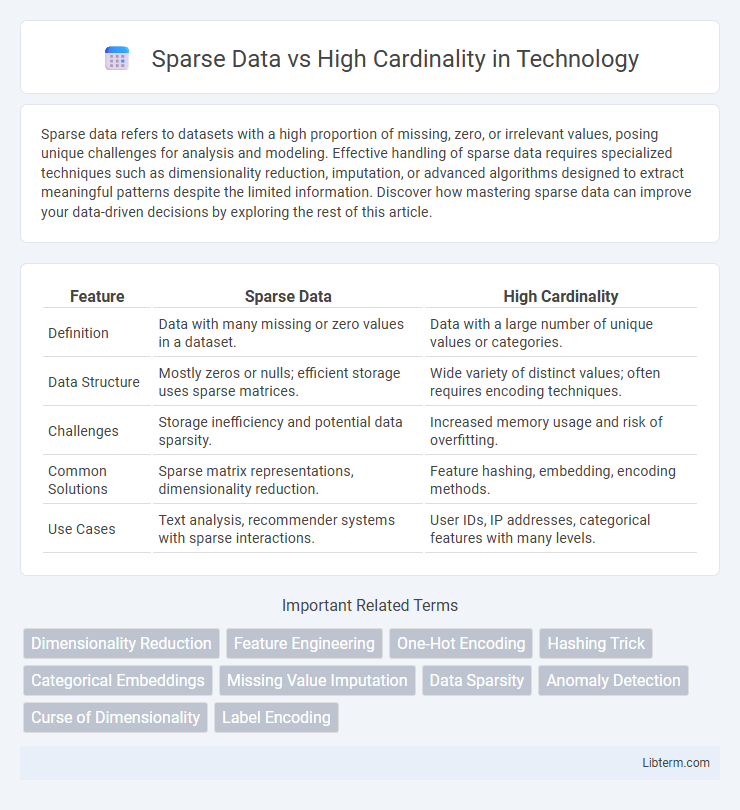

| Feature | Sparse Data | High Cardinality |

|---|---|---|

| Definition | Data with many missing or zero values in a dataset. | Data with a large number of unique values or categories. |

| Data Structure | Mostly zeros or nulls; efficient storage uses sparse matrices. | Wide variety of distinct values; often requires encoding techniques. |

| Challenges | Storage inefficiency and potential data sparsity. | Increased memory usage and risk of overfitting. |

| Common Solutions | Sparse matrix representations, dimensionality reduction. | Feature hashing, embedding, encoding methods. |

| Use Cases | Text analysis, recommender systems with sparse interactions. | User IDs, IP addresses, categorical features with many levels. |

Understanding Sparse Data: Definition and Examples

Sparse data refers to datasets where a large proportion of elements are zero or missing, resulting in many empty or insignificant values that can hinder analysis and modeling. Common examples include text data represented by word occurrence matrices, user-item interaction logs in recommendation systems, and sensor readings with intermittent activity. Understanding sparse data is crucial for selecting appropriate storage methods and algorithms, such as sparse matrix representations or dimensionality reduction techniques, to efficiently process and extract meaningful insights.

Defining High Cardinality: Key Concepts

High cardinality refers to attributes or columns in a dataset that contain a large number of unique values relative to the total number of observations, often posing challenges for data processing and analysis. This characteristic is commonly found in features such as user IDs, transaction IDs, or timestamps, where each value is distinct and rarely repeated. Managing high cardinality requires advanced techniques like encoding methods or dimensionality reduction to improve model performance and computational efficiency.

Core Differences Between Sparse Data and High Cardinality

Sparse data refers to datasets with a large number of missing or zero values, while high cardinality denotes features with a vast number of unique values or categories. The core difference lies in sparsity measuring the distribution density of data points, whereas cardinality concerns the diversity of categorical values within a feature. Handling sparse data often involves techniques like dimensionality reduction, and managing high cardinality requires strategies such as encoding or feature hashing to improve model performance.

Real-World Applications of Sparse Data

Sparse data frequently appears in real-world applications such as recommendation systems, natural language processing, and bioinformatics, where datasets contain many zero or missing values. In these contexts, algorithms like matrix factorization and sparsity-aware models efficiently handle sparse input to improve prediction accuracy and computational performance. Addressing sparseness enables better representation of underlying patterns in user-item interactions, textual data, or genetic sequences, facilitating scalable and robust analytics.

Use Cases Where High Cardinality Matters

High cardinality plays a crucial role in use cases such as fraud detection, recommendation systems, and customer segmentation where unique identifiers or attributes number in the millions or billions. In these scenarios, models must efficiently handle vast, discrete values without losing predictive power or encountering scalability issues. Managing high cardinality effectively enhances precision in differentiation and improves the overall performance of machine learning algorithms in complex, data-rich environments.

Challenges in Handling Sparse Data

Sparse data presents significant challenges such as increased computational complexity and reduced model accuracy due to the abundance of missing or zero values. High dimensionality in sparse datasets often leads to overfitting and difficulties in feature extraction, complicating machine learning model training and interpretation. Efficient storage and processing techniques like sparse matrix representations and dimensionality reduction are essential to mitigate these issues and enhance algorithm performance.

Impact of High Cardinality on Data Processing

High cardinality significantly impacts data processing by increasing computational complexity and memory usage, leading to slower query performance and higher storage costs. Sparse data combined with high cardinality can cause inefficiencies in indexing and hinder the effectiveness of machine learning algorithms due to the proliferation of unique values. Efficient handling of high cardinality is crucial for scalable database management and accurate predictive modeling.

Techniques for Managing Sparse Data

Techniques for managing sparse data include dimensionality reduction methods such as Principal Component Analysis (PCA) and feature selection to eliminate irrelevant variables. Matrix factorization approaches like Singular Value Decomposition (SVD) and embedding methods transform sparse input into dense representations that improve model performance. Regularization techniques such as L1 and L2 penalties prevent overfitting by shrinking less important feature weights in sparse datasets.

Best Practices for Handling High Cardinality

High cardinality in datasets, characterized by numerous unique values within a feature, requires careful encoding strategies like target encoding or feature hashing to reduce dimensionality without losing predictive power. Leveraging techniques such as dimensionality reduction, clustering similar categories, or combining rare levels into an "other" group helps prevent overfitting and improves model interpretability. Efficient handling of high cardinality features enhances performance in machine learning tasks, especially in large-scale data environments.

Choosing the Right Approach: Sparse Data vs High Cardinality

Choosing the right approach depends on understanding the key differences between sparse data and high cardinality challenges in datasets. Sparse data involves a large number of zero or null values, requiring techniques like dimensionality reduction or specialized matrix factorization to enhance model efficiency. High cardinality features, with numerous unique categorical values, benefit from encoding methods such as target encoding or feature hashing to prevent overfitting and improve predictive performance.

Sparse Data Infographic

libterm.com

libterm.com