Caching stores frequently accessed data temporarily to speed up retrieval and improve system performance across websites and applications. Effective caching strategies reduce server load and latency, enhancing user experience and resource efficiency. Discover how to implement caching techniques to optimize your digital platforms in the rest of this article.

Table of Comparison

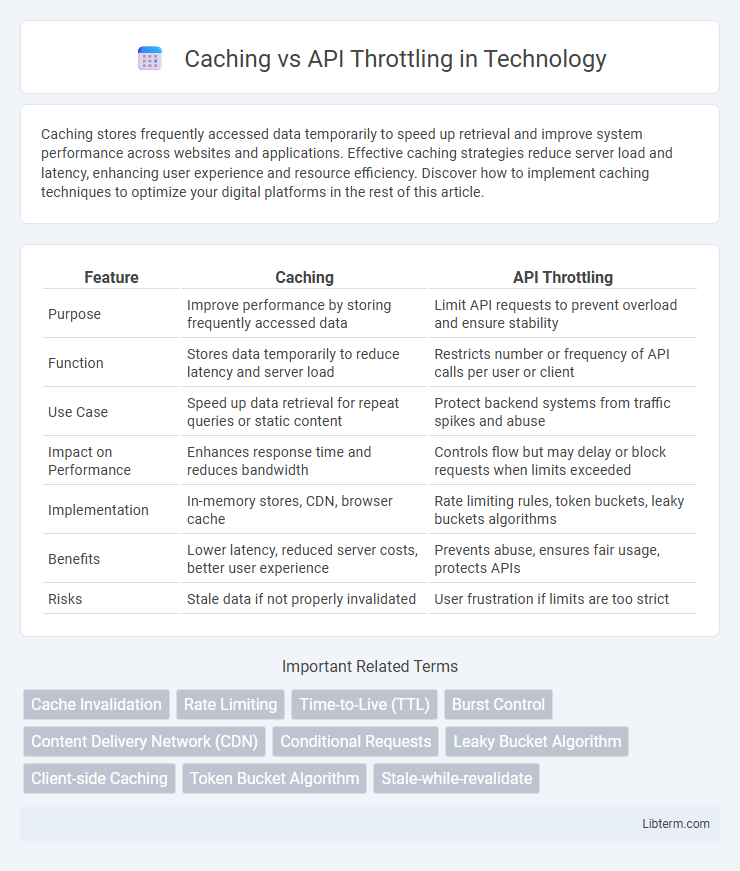

| Feature | Caching | API Throttling |

|---|---|---|

| Purpose | Improve performance by storing frequently accessed data | Limit API requests to prevent overload and ensure stability |

| Function | Stores data temporarily to reduce latency and server load | Restricts number or frequency of API calls per user or client |

| Use Case | Speed up data retrieval for repeat queries or static content | Protect backend systems from traffic spikes and abuse |

| Impact on Performance | Enhances response time and reduces bandwidth | Controls flow but may delay or block requests when limits exceeded |

| Implementation | In-memory stores, CDN, browser cache | Rate limiting rules, token buckets, leaky buckets algorithms |

| Benefits | Lower latency, reduced server costs, better user experience | Prevents abuse, ensures fair usage, protects APIs |

| Risks | Stale data if not properly invalidated | User frustration if limits are too strict |

Understanding Caching: Key Concepts

Caching stores frequently accessed data temporarily to reduce server load and improve response time by serving repeated requests from a faster storage layer. Key concepts include cache expiration, invalidation, and cache hit ratio, which determine how fresh and accurate the cached data is. Proper caching strategies enhance API performance by minimizing redundant processing and network latency compared to API throttling, which limits request rates to prevent overload.

What is API Throttling? An Overview

API throttling is a technique used to control the number of API requests a client can make within a specified time window, preventing server overload and ensuring fair resource distribution. It helps maintain API performance and availability by limiting excessive or abusive traffic, typically through rate limits defined by the API provider. Unlike caching, which stores responses to reduce request frequency, throttling manages real-time request flow to protect backend systems.

Use Cases: When to Use Caching

Caching is highly effective for optimizing performance in scenarios where data is frequently requested but changes infrequently, such as content delivery networks (CDNs), e-commerce product pages, or user session data. It reduces server load, decreases latency, and improves response times for read-heavy operations by storing and reusing data locally or in-memory. Use caching in applications requiring fast access to static or semi-static data, like image serving, configuration settings, and database query results.

Use Cases: When API Throttling is Essential

API throttling is essential in use cases where controlling the rate of incoming requests prevents server overload and ensures fair resource distribution among users. It is crucial for maintaining performance and reliability in high-traffic applications such as social media platforms, financial services, and public APIs with rate limits imposed by third parties. Throttling also protects backend systems from abuse and mitigates denial-of-service attacks, preserving service availability and stability.

Benefits of Implementing Caching

Implementing caching improves application performance by storing frequently accessed data closer to the user, reducing server load and decreasing latency. It enhances scalability by minimizing repetitive API calls, leading to cost savings on bandwidth and computational resources. Caching also increases availability by serving data during backend outages, ensuring a smoother user experience.

Advantages of API Throttling

API throttling enhances application performance by controlling the rate of incoming requests, preventing server overload and maintaining stability. It improves security by mitigating denial-of-service (DoS) attacks and abuse, ensuring fair resource allocation among users. This mechanism supports efficient resource management, reduces latency, and provides predictable API behavior under high traffic conditions.

Core Differences: Caching vs API Throttling

Caching stores frequently accessed data temporarily to reduce server load and decrease response times, improving application performance. API throttling limits the number of API requests within a specified time frame to prevent server overload and ensure fair usage among consumers. While caching optimizes data retrieval efficiency, API throttling focuses on controlling traffic to maintain system stability and prevent abuse.

Performance Impact: Caching vs Throttling

Caching significantly improves performance by storing frequently accessed data locally, reducing server load and response times. API throttling, however, limits the number of requests to avoid overloading the server but can introduce delays and reduce throughput under high demand. Combining caching with throttling balances performance gains with controlled resource usage, ensuring efficient API responsiveness.

Security Implications of Both Strategies

Caching enhances security by reducing direct API calls, which limits exposure to attacks such as DDoS and data extraction by minimizing backend interactions. API throttling enforces rate limits to prevent abuse and brute force attacks, ensuring fair usage and protecting the system from overload. Both strategies complement each other by balancing performance optimization with safeguarding sensitive data and maintaining service availability.

Choosing the Right Approach for Your Application

Choosing between caching and API throttling depends on your application's specific performance needs and user experience goals. Caching efficiently reduces server load and latency by storing frequently accessed data, ideal for static or infrequently changing content, while API throttling controls the rate of client requests to protect backend resources and ensure fair usage. Evaluating factors such as data volatility, request patterns, and scalability requirements guides the decision to implement a strategy that balances responsiveness with system stability.

Caching Infographic

libterm.com

libterm.com