Computational complexity studies the resources required for algorithms to solve problems, focusing on time and space efficiency. Exploring complexity classes like P, NP, and NP-complete helps determine the feasibility of problem-solving in practical scenarios. Discover how understanding computational complexity can optimize Your approach by reading the rest of this article.

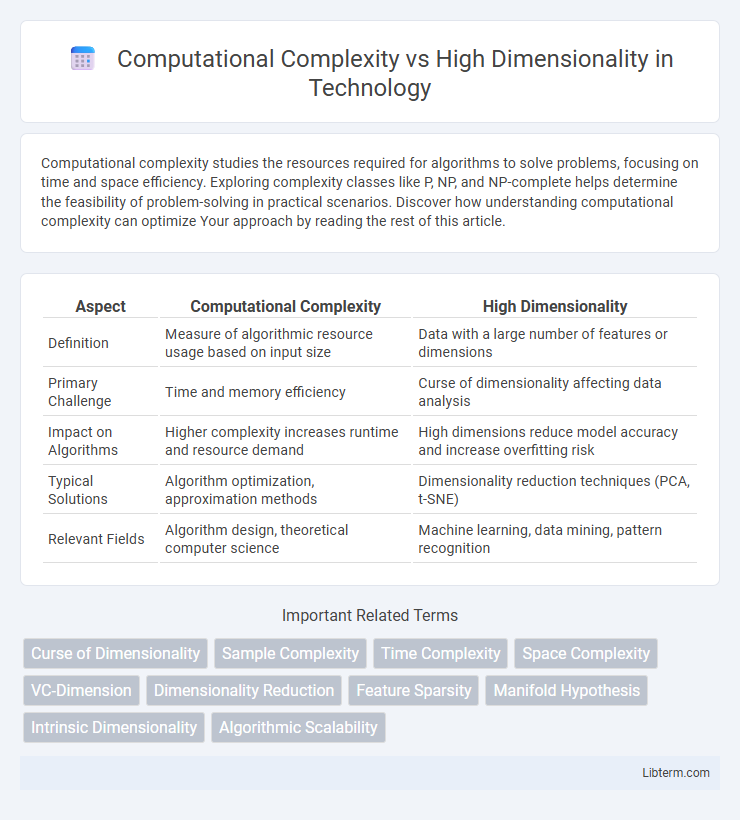

Table of Comparison

| Aspect | Computational Complexity | High Dimensionality |

|---|---|---|

| Definition | Measure of algorithmic resource usage based on input size | Data with a large number of features or dimensions |

| Primary Challenge | Time and memory efficiency | Curse of dimensionality affecting data analysis |

| Impact on Algorithms | Higher complexity increases runtime and resource demand | High dimensions reduce model accuracy and increase overfitting risk |

| Typical Solutions | Algorithm optimization, approximation methods | Dimensionality reduction techniques (PCA, t-SNE) |

| Relevant Fields | Algorithm design, theoretical computer science | Machine learning, data mining, pattern recognition |

Understanding Computational Complexity

Understanding computational complexity involves analyzing the resources required by an algorithm as input size expands, notably time and memory usage. High dimensionality exacerbates computational complexity by increasing the search space exponentially, leading to the "curse of dimensionality" that complicates problem-solving. Optimization techniques and dimensionality reduction methods like PCA are crucial to managing computational complexity in high-dimensional datasets.

Defining High Dimensionality

High dimensionality refers to datasets or feature spaces characterized by a large number of variables or dimensions, often resulting in complex geometric structures that challenge conventional analysis methods. As dimensionality increases, computational complexity grows exponentially, making tasks like optimization, classification, and clustering more resource-intensive and prone to overfitting. Addressing high dimensionality involves dimensionality reduction techniques and efficient algorithms to mitigate the curse of dimensionality while preserving data integrity.

The Interplay Between Complexity and Dimensionality

Computational complexity increases exponentially with high dimensionality due to the curse of dimensionality, where the number of computations grows rapidly as dimensions expand. High-dimensional datasets demand advanced algorithms that can efficiently navigate and reduce dimensional space while maintaining accuracy. The interplay between complexity and dimensionality challenges optimization and scalability in machine learning models and data analysis tasks.

Curse of Dimensionality: Challenges and Implications

The curse of dimensionality significantly impacts computational complexity by exponentially increasing the volume of the feature space, leading to sparse data distribution and degraded algorithm performance. High-dimensional datasets require exponentially more computational resources for tasks such as clustering, classification, and optimization, resulting in slower processing times and reduced accuracy. Strategies to mitigate these challenges include dimensionality reduction techniques like principal component analysis (PCA) and manifold learning, which help to preserve meaningful structures while alleviating computational burdens.

Algorithmic Efficiency in High-Dimensional Spaces

Algorithmic efficiency in high-dimensional spaces is critical due to the exponential growth of computational complexity known as the "curse of dimensionality." Techniques such as dimensionality reduction, sparse representations, and approximate nearest neighbor search significantly reduce runtime and memory requirements. Optimizing algorithms for high-dimensional data often involves balancing accuracy with computational cost to maintain scalable performance in machine learning and data analysis tasks.

Dimensionality Reduction Techniques and Their Impact

Dimensionality reduction techniques such as Principal Component Analysis (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE), and Autoencoders significantly mitigate computational complexity by transforming high-dimensional data into lower-dimensional spaces while preserving essential structures. These methods reduce memory usage and processing time, enabling scalable analysis of large datasets in fields like genomics, image recognition, and natural language processing. Effective dimensionality reduction improves model performance by alleviating the curse of dimensionality, enhancing generalization, and reducing overfitting.

Scalability of Computational Methods

Computational complexity significantly impacts the scalability of algorithms when dealing with high-dimensional data sets. As dimensionality increases, the computational cost often grows exponentially, challenging the feasibility of traditional methods like exhaustive search or brute-force algorithms. Efficient computational techniques such as dimensionality reduction, approximation algorithms, and parallel processing are crucial to manage scalability while maintaining accuracy in high-dimensional spaces.

Trade-Offs Between Accuracy and Computational Cost

High dimensionality in data exponentially increases computational complexity, often leading to longer processing times and greater memory usage. Reducing dimensions through techniques like Principal Component Analysis can lower computational cost but may sacrifice model accuracy and interpretability. Balancing this trade-off requires careful selection of feature reduction methods to maintain essential data characteristics while optimizing resource efficiency.

Real-World Applications in High-Dimensional Data Analysis

Real-world applications in high-dimensional data analysis frequently encounter steep computational complexity due to the exponential growth of data points and features, known as the curse of dimensionality. Techniques such as dimensionality reduction, sparse representation, and efficient indexing algorithms substantially mitigate computational burdens while preserving critical information for tasks like image recognition, genomics, and natural language processing. Optimizing these algorithms to handle scalability and noise robustness remains a focal challenge in leveraging high-dimensional datasets for actionable insights.

Future Directions in Managing Complexity and Dimensionality

Future directions in managing computational complexity and high dimensionality center on developing advanced dimensionality reduction techniques that preserve essential data features while minimizing processing overhead. Integrating machine learning algorithms with adaptive sampling and representation learning promises scalable solutions for complex, high-dimensional datasets. Emphasis on quantum computing and parallel processing architectures also aims to significantly accelerate computations in high-dimensional problem spaces.

Computational Complexity Infographic

libterm.com

libterm.com