Efficient data pipelines streamline the process of collecting, processing, and transferring data across various systems, ensuring timely and accurate insights. Implementing robust data pipelines can significantly enhance your organization's decision-making and operational efficiency. Explore the rest of the article to learn how to build and optimize your data pipeline effectively.

Table of Comparison

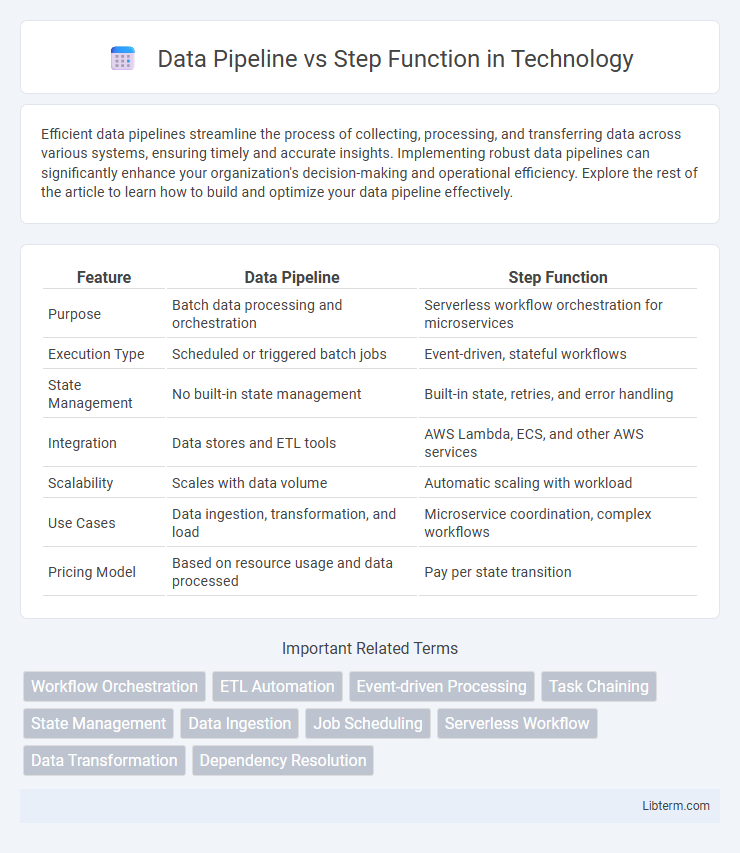

| Feature | Data Pipeline | Step Function |

|---|---|---|

| Purpose | Batch data processing and orchestration | Serverless workflow orchestration for microservices |

| Execution Type | Scheduled or triggered batch jobs | Event-driven, stateful workflows |

| State Management | No built-in state management | Built-in state, retries, and error handling |

| Integration | Data stores and ETL tools | AWS Lambda, ECS, and other AWS services |

| Scalability | Scales with data volume | Automatic scaling with workload |

| Use Cases | Data ingestion, transformation, and load | Microservice coordination, complex workflows |

| Pricing Model | Based on resource usage and data processed | Pay per state transition |

Introduction to Data Pipelines and Step Functions

Data pipelines automate the extraction, transformation, and loading (ETL) of data to enable seamless data flow across different sources and destinations, ensuring real-time or batch processing efficiency. AWS Step Functions coordinate distributed applications using workflows that integrate AWS services, making it easier to build scalable and fault-tolerant processes. Data pipelines primarily focus on moving and transforming data, while step functions orchestrate complex workflows and state machines for application logic execution.

Core Concepts: What is a Data Pipeline?

A data pipeline is a series of automated processes that extract, transform, and load (ETL) data from various sources into a destination for analysis or storage. It ensures the continuous flow and processing of data, enabling real-time or batch data integration across systems. Core components include data ingestion, data processing, and data storage, optimized for scalability and reliability.

Core Concepts: What are Step Functions?

Step Functions are AWS services designed to coordinate components of distributed applications and microservices as workflows. They enable the orchestration of multiple AWS services into serverless workflows using state machines, which simplify complex task sequences with built-in error handling, retries, and parallel execution. Unlike data pipelines focused on data movement and transformation, Step Functions emphasize process orchestration and state management in application workflows.

Key Differences Between Data Pipelines and Step Functions

Data Pipelines primarily manage and automate the process of moving and transforming large volumes of data across various storage and processing services, optimizing data flow for analytics and machine learning use cases. Step Functions orchestrate complex workflows by coordinating multiple AWS services through state machines, allowing for error handling, retries, and branching logic within application processes. Key differences include Data Pipelines' focus on data-centric tasks and scheduling, whereas Step Functions emphasize application orchestration, workflow state management, and integration with diverse AWS service APIs.

Use Cases: When to Use Data Pipelines

Data Pipelines are ideal for orchestrating large-scale data processing workflows involving ETL (Extract, Transform, Load) tasks, batch processing, and data integration from various sources into data lakes or warehouses. They excel in scenarios requiring continuous data ingestion, transformation, and loading for analytics and machine learning pipelines. Step Functions are better suited for managing complex application workflows with conditional branching, human approvals, and event-driven microservices orchestration.

Use Cases: When to Use Step Functions

Step Functions excel in orchestrating complex workflows involving multiple AWS services, making them ideal for use cases requiring state management, error handling, and sequential or parallel task execution. They are preferred when building serverless applications that need visual workflow monitoring, retry capabilities, and clear step transitions, such as order processing, data processing pipelines with conditional branching, and microservices orchestration. Use Step Functions over simple ETL pipelines when application logic demands dynamic decision-making and integration of diverse service operations within a reliable, scalable state machine.

Performance and Scalability Considerations

Data Pipeline offers highly optimized batch processing capabilities ideal for large-scale ETL workloads, delivering consistent performance through parallel data processing and fault-tolerant retries. Step Functions excel in orchestrating complex workflows with fine-grained state management, providing scalable, event-driven execution that dynamically adapts to workload variability. Performance in Data Pipeline is enhanced by efficient data movement and transformation, while Step Functions scale by coordinating distributed services, enabling seamless integration with AWS Lambda for serverless compute elasticity.

Integration and Compatibility with Other Tools

Data Pipeline offers seamless integration with AWS storage solutions like S3, Redshift, and DynamoDB, enabling efficient data movement and transformation tasks within the AWS ecosystem. Step Functions support orchestration of complex workflows by coordinating various AWS services, including Lambda, ECS, and Batch, allowing for flexible automation of distributed applications. Both tools provide robust compatibility with popular cloud and on-premises systems through API and SDK integrations, facilitating scalable and reliable data processing architectures.

Cost and Maintenance Implications

Data pipelines often incur higher ongoing costs due to complex integrations and frequent data transformations requiring robust infrastructure and continuous monitoring. AWS Step Functions offer cost-effective orchestration by charging primarily based on state transitions, reducing expenses for workflow coordination and simplifying maintenance with built-in error handling and retries. Choosing Step Functions can lower operational overhead and streamline updates compared to maintaining custom-coded data pipelines, especially in scalable cloud environments.

Choosing the Right Solution: Data Pipeline vs Step Function

Choosing the right solution between AWS Data Pipeline and AWS Step Functions depends on the complexity and orchestration needs of your workflows. Data Pipeline excels in managing data-driven workflows with built-in support for scheduling and retry mechanisms, ideal for batch processing and ETL tasks. Step Functions offer more granular control over stateful, event-driven workflows with visual monitoring, making them suitable for complex application orchestration and microservices coordination.

Data Pipeline Infographic

libterm.com

libterm.com