Caching significantly improves system performance by temporarily storing frequently accessed data, reducing retrieval time and server load. Effective caching strategies can optimize website speed and enhance user experience by minimizing latency. Discover how implementing caching can boost Your applications and systems throughout this article.

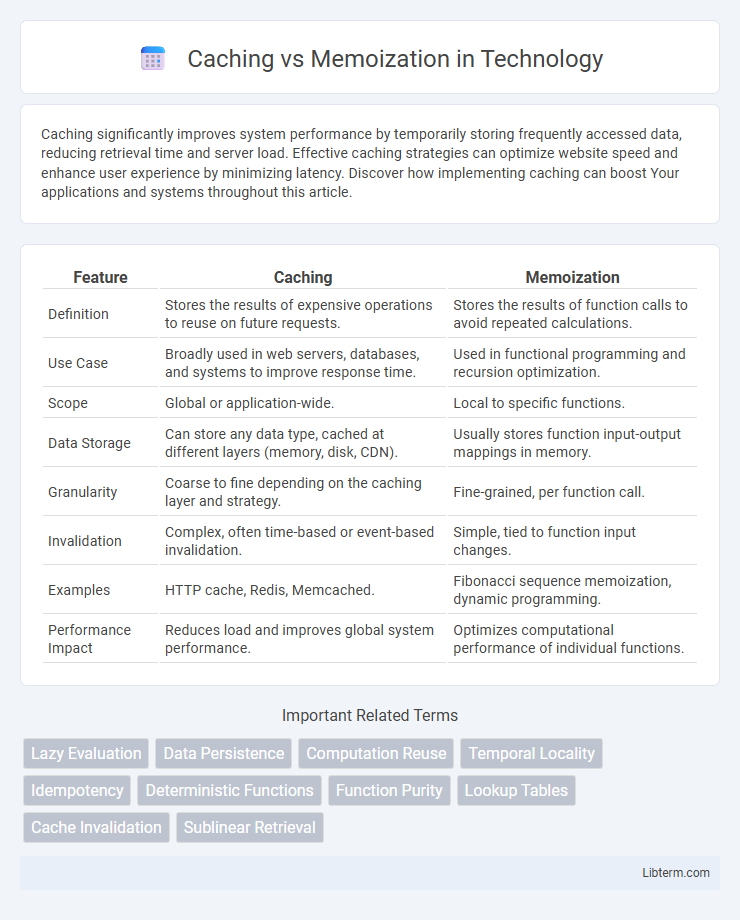

Table of Comparison

| Feature | Caching | Memoization |

|---|---|---|

| Definition | Stores the results of expensive operations to reuse on future requests. | Stores the results of function calls to avoid repeated calculations. |

| Use Case | Broadly used in web servers, databases, and systems to improve response time. | Used in functional programming and recursion optimization. |

| Scope | Global or application-wide. | Local to specific functions. |

| Data Storage | Can store any data type, cached at different layers (memory, disk, CDN). | Usually stores function input-output mappings in memory. |

| Granularity | Coarse to fine depending on the caching layer and strategy. | Fine-grained, per function call. |

| Invalidation | Complex, often time-based or event-based invalidation. | Simple, tied to function input changes. |

| Examples | HTTP cache, Redis, Memcached. | Fibonacci sequence memoization, dynamic programming. |

| Performance Impact | Reduces load and improves global system performance. | Optimizes computational performance of individual functions. |

Introduction to Caching and Memoization

Caching stores the results of expensive function calls or data retrievals to speed up subsequent access by reusing the previously computed output. Memoization is a specific type of caching applied to function calls where the function result is saved based on its input parameters to avoid redundant computations. Both techniques improve performance by reducing latency and computational overhead in software applications.

Defining Caching: Core Concepts

Caching stores frequently accessed data in temporary storage to reduce retrieval time and improve system performance. It involves maintaining copies of data or computational results for quick access, minimizing expensive or time-consuming operations such as database queries or API calls. Key aspects include cache size, eviction policies, and data freshness, which govern how data is stored, replaced, and validated within the cache.

Understanding Memoization: An Overview

Memoization is a technique used in computing to optimize performance by storing the results of expensive function calls and reusing the cached results when the same inputs occur again. Unlike general caching which can store a variety of data types and is often managed independently, memoization specifically targets function output based on input parameters for deterministic functions. This approach significantly reduces computational overhead in recursive algorithms and dynamic programming by avoiding redundant calculations.

Key Differences Between Caching and Memoization

Caching stores data from expensive operations to speed up future access across multiple requests or sessions, whereas memoization specifically caches results of pure function calls during the program's execution to avoid redundant calculations. Caching involves managing storage with policies like expiration and size limits, while memoization uses a function's input as a key in an in-memory lookup table without eviction. Memoization is a subset of caching focused on function optimization, making it highly specific and transparent compared to the broader application-level strategy of caching.

Use Cases for Caching

Caching improves application performance by storing frequently accessed data, such as database query results, API responses, or rendered web pages, to reduce latency and server load. It is particularly effective in scenarios involving repetitive data retrieval, high read-to-write ratio workloads, and distributed systems requiring shared state management. Use cases include content delivery networks (CDNs), web application acceleration, and session storage in scalable architectures.

Practical Applications of Memoization

Memoization is widely used in dynamic programming to optimize recursive algorithms by storing previously computed results, significantly reducing time complexity in tasks like Fibonacci sequence calculation and combinatorial problems. It improves performance in web applications by caching API call results or database queries to minimize redundant processing and decrease response times. In computer graphics and artificial intelligence, memoization accelerates computations such as pathfinding algorithms and rendering calculations by avoiding repeated evaluations.

Performance Impacts: Caching vs Memoization

Caching stores precomputed results of expensive operations across multiple requests or sessions, significantly reducing latency and server load for repeated data retrievals. Memoization specifically optimizes recursive or function-based computations by storing return values for identical inputs within a single execution context, thus improving runtime by avoiding redundant calculations. Performance impacts depend on use case size and volatility; caching excels in data-heavy or I/O-bound scenarios, while memoization provides critical speedup for CPU-bound, deterministic function calls.

Best Practices for Implementing Caching

Implementing caching effectively requires identifying frequently accessed data and setting appropriate expiration policies to balance freshness and performance. Use cache invalidation strategies such as time-to-live (TTL) and event-triggered refresh to maintain data accuracy while minimizing redundant computations. Employ distributed caching solutions like Redis or Memcached for scalable applications, ensuring thread safety and consistent cache key design to optimize retrieval speed and resource utilization.

Best Practices for Memoization Techniques

Memoization best practices emphasize using pure functions to ensure consistent cache results and leveraging immutable data to avoid side effects. Effective memoization involves setting appropriate cache size limits and cache eviction policies to balance memory usage and performance gains. Utilizing built-in memoization libraries or frameworks optimized for specific languages enhances maintainability and execution speed.

Choosing the Right Approach: When to Use Caching or Memoization

Caching is ideal for storing data that is expensive to retrieve and remains valid across multiple requests or sessions, such as API responses or database queries. Memoization suits scenarios involving pure functions with deterministic outputs, optimizing performance by saving results of expensive computations within the same execution context. Selecting between caching and memoization depends on factors like data volatility, lifespan, and scope of reuse to achieve efficient resource management and response times.

Caching Infographic

libterm.com

libterm.com