Access time determines how quickly your device can retrieve data from memory, significantly impacting overall system performance. Faster access times reduce latency, enabling smoother multitasking and improved responsiveness in applications. Discover how optimizing access time can enhance your technology experience in the rest of this article.

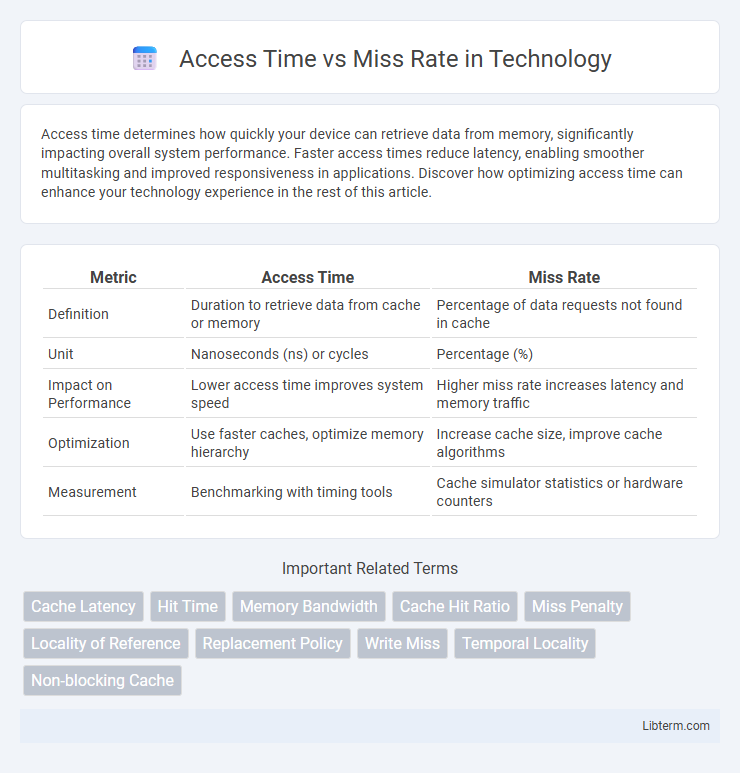

Table of Comparison

| Metric | Access Time | Miss Rate |

|---|---|---|

| Definition | Duration to retrieve data from cache or memory | Percentage of data requests not found in cache |

| Unit | Nanoseconds (ns) or cycles | Percentage (%) |

| Impact on Performance | Lower access time improves system speed | Higher miss rate increases latency and memory traffic |

| Optimization | Use faster caches, optimize memory hierarchy | Increase cache size, improve cache algorithms |

| Measurement | Benchmarking with timing tools | Cache simulator statistics or hardware counters |

Introduction to Access Time and Miss Rate

Access time measures the duration a system takes to retrieve data from a memory hierarchy, impacting overall performance and responsiveness. Miss rate quantifies the frequency at which requested data is not found in the cache, causing delays due to additional memory accesses. Balancing low access time with minimal miss rate is crucial for optimizing system efficiency and throughput.

Defining Access Time in Computing

Access time in computing refers to the duration required for a system to retrieve data from memory or storage after a request is made. It encompasses the delay between initiating a data read or write operation and the moment the data becomes available for use by the processor. Reducing access time is crucial for enhancing system performance, as it directly impacts the efficiency of cache memory and overall computational speed.

Understanding Miss Rate in Memory Systems

Miss rate in memory systems refers to the percentage of memory access attempts that result in a miss, meaning the requested data is not found in the cache and must be fetched from slower main memory. A higher miss rate increases average access time, as fetching data from main memory incurs significant latency compared to accessing cache. Optimizing cache size, block size, and replacement policies directly influences the miss rate, balancing faster access times with efficient memory utilization.

Relationship Between Access Time and Miss Rate

Access time and miss rate exhibit a trade-off in cache memory systems, where reducing miss rate often increases access time due to larger or more complex caches. Larger caches lower the miss rate by storing more data but typically result in longer access times caused by increased latency. Optimizing cache design requires balancing access time and miss rate to achieve the best overall system performance.

Impact of Access Time on System Performance

Access time directly affects system performance by determining how quickly data can be retrieved from memory, with lower access times enabling faster processing and reduced latency. A shorter access time minimizes CPU stalling, boosting overall throughput and efficiency in executing instructions. High access times, even with a low miss rate, can degrade performance by increasing wait times, making it essential to balance both access time and miss rate for optimal system operation.

Effects of Miss Rate on Processing Speed

High miss rates in cache memory significantly degrade processing speed by increasing access time to main memory, which is substantially slower than cache access. Each miss triggers a memory fetch, causing pipeline stalls and reducing instruction throughput. Optimizing cache architecture to lower miss rates directly enhances processing speed by minimizing latency and improving data retrieval efficiency.

Techniques to Reduce Access Time

Techniques to reduce access time in memory systems include using multi-level caches, where smaller, faster caches store frequently accessed data closer to the processor, significantly lowering latency. Implementing prefetching algorithms anticipates data needs by loading data into the cache before it is requested, thus reducing wait times during actual access. Employing faster memory technologies such as SRAM for caches and DRAM optimization for main memory further minimizes access delay while balancing the impact on miss rate.

Strategies to Lower Miss Rate

Cache replacement policies such as Least Recently Used (LRU) and First-In-First-Out (FIFO) optimize access time by lowering the miss rate through efficient data retention. Increasing cache size and utilizing multi-level cache hierarchies enhance hit rates by storing more frequently accessed data close to the processor. Implementing prefetching strategies predicts and loads data before it is needed, significantly decreasing cache misses and improving overall system performance.

Access Time vs Miss Rate: Comparative Analysis

Access time and miss rate are critical metrics in evaluating cache memory performance, where lower access time improves processor speed while a reduced miss rate minimizes costly main memory accesses. A comparative analysis reveals that optimizing for lower miss rate often leads to increased access time due to larger or more complex cache architectures, creating a trade-off that impacts overall system efficiency. Balancing these parameters involves selecting cache size, associativity, and block size to achieve an optimal point that minimizes average memory access time (AMAT).

Best Practices for Optimizing Memory Efficiency

Minimizing access time while reducing miss rate is crucial for optimizing memory efficiency in computer systems. Employing techniques such as increased cache size, multi-level caching, and prefetching algorithms effectively balances faster data retrieval against minimizing costly cache misses. Using adaptive replacement policies and optimizing block size further enhances cache performance by improving hit rates and decreasing latency.

Access Time Infographic

libterm.com

libterm.com