Data drift occurs when the statistical properties of the input data change over time, leading to a decline in model performance. Monitoring and addressing data drift is crucial for maintaining the accuracy and relevance of machine learning models in dynamic environments. Explore the rest of the article to learn effective strategies for detecting and mitigating data drift in your systems.

Table of Comparison

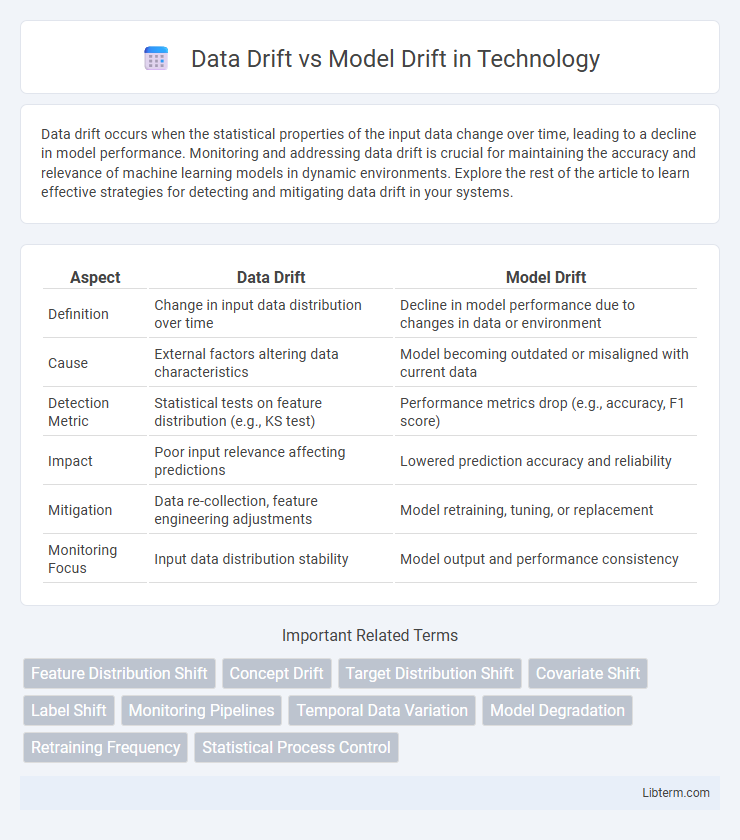

| Aspect | Data Drift | Model Drift |

|---|---|---|

| Definition | Change in input data distribution over time | Decline in model performance due to changes in data or environment |

| Cause | External factors altering data characteristics | Model becoming outdated or misaligned with current data |

| Detection Metric | Statistical tests on feature distribution (e.g., KS test) | Performance metrics drop (e.g., accuracy, F1 score) |

| Impact | Poor input relevance affecting predictions | Lowered prediction accuracy and reliability |

| Mitigation | Data re-collection, feature engineering adjustments | Model retraining, tuning, or replacement |

| Monitoring Focus | Input data distribution stability | Model output and performance consistency |

Understanding Data Drift and Model Drift

Data drift refers to changes in the input data distribution that a machine learning model processes, leading to potential inaccuracies in predictions when the model encounters data that differs from its training set. Model drift occurs when the performance of a predictive model degrades over time due to evolving patterns in data or external factors, requiring periodic retraining or adjustment. Understanding data drift involves monitoring feature distributions and detecting shifts, while understanding model drift requires evaluating model outputs, accuracy metrics, and adapting models to maintain reliability.

Key Differences Between Data Drift and Model Drift

Data drift refers to changes in the input data distribution over time that can degrade model performance, while model drift involves a decline in the model's predictive accuracy due to factors like outdated model parameters or concept drift. Data drift is primarily detected through monitoring shifts in feature statistics and input data patterns, whereas model drift requires evaluation of model outputs, performance metrics, and error rates on new data. Understanding these differences helps in designing effective monitoring strategies to maintain model reliability in production environments.

Causes of Data Drift

Data drift occurs when the statistical properties of input data change over time, often due to evolving user behavior, seasonal trends, or changes in data collection processes. These shifts can cause the model to misinterpret inputs, leading to performance degradation. Unlike model drift, which stems from changes in model parameters or structure, data drift primarily originates from external factors influencing the input data distribution.

Causes of Model Drift

Model drift occurs when the predictive performance of a machine learning model degrades over time due to changes in the underlying data patterns or relationships. Key causes of model drift include evolving real-world phenomena, shifts in user behavior, and the introduction of new, unseen data features that the model was not originally trained on. Unlike data drift, which relates to changes in input data distribution, model drift specifically reflects a loss of model accuracy and relevance because of these dynamic environmental factors.

Impact of Data Drift on Model Performance

Data drift refers to changes in input data distribution over time, significantly impacting model accuracy and reliability. As data patterns shift, models trained on historical data may produce biased or incorrect predictions, leading to degraded performance. Continuous monitoring and recalibration are essential to mitigate the adverse effects of data drift on machine learning models.

Impact of Model Drift on Predictive Accuracy

Model drift significantly degrades predictive accuracy by causing a divergence between the model's learned patterns and the evolving underlying data distribution. Unlike data drift, which reflects changes in input features, model drift directly affects the model's internal parameters and decision boundaries, leading to increased prediction errors over time. Continuous monitoring and retraining are essential to mitigate model drift and maintain robust, reliable predictions in dynamic environments.

Techniques for Detecting Data Drift

Techniques for detecting data drift include statistical tests such as the Kolmogorov-Smirnov test and the Population Stability Index (PSI), which compare the distribution of incoming data against baseline training data. Machine learning-based methods like drift detection using KL divergence or Wasserstein distance provide quantifiable metrics on distribution changes over time. Monitoring feature-level statistics and applying real-time alert systems ensures early identification of shifts impacting model performance.

Methods for Identifying Model Drift

Methods for identifying model drift primarily involve monitoring performance metrics such as accuracy, precision, recall, and AUC over time to detect deviations from expected values. Statistical tests like the Kolmogorov-Smirnov test or the Population Stability Index (PSI) evaluate distributional changes in input features and model predictions, signaling potential drift. Advanced techniques include leveraging explainability methods such as SHAP values to identify shifts in feature importance and using drift detection algorithms like ADWIN or Page-Hinkley to flag significant changes in model behavior.

Strategies to Mitigate Drift in Machine Learning

To mitigate data drift and model drift in machine learning, continuous monitoring of data distribution and model performance is crucial using tools like data validation pipelines and performance dashboards. Implementing retraining schedules based on drift detection metrics such as Population Stability Index (PSI) or Kullback-Leibler divergence helps maintain model accuracy over time. Leveraging adaptive learning techniques and feedback loops that incorporate new labeled data ensures models stay robust against evolving input patterns and changing real-world conditions.

Best Practices for Continuous Drift Monitoring

Continuous drift monitoring involves tracking data drift by comparing incoming data distributions against the training data baseline, using statistical tests like Kolmogorov-Smirnov or Population Stability Index (PSI). Model drift detection requires monitoring performance metrics such as accuracy, precision, or recall over time to identify declines indicating the model's degradation. Implementing automated alerts, retraining triggers, and integrating feature importance analysis ensures responsive and effective management of both data and model drift in production environments.

Data Drift Infographic

libterm.com

libterm.com