Classical computing relies on binary digits (bits) that represent either 0 or 1 to perform complex calculations and data processing through logical operations. It forms the foundation of most modern computers and enables a wide range of applications, from simple arithmetic to advanced simulations. Discover how classical computing works and its impact on today's technology in the rest of this article.

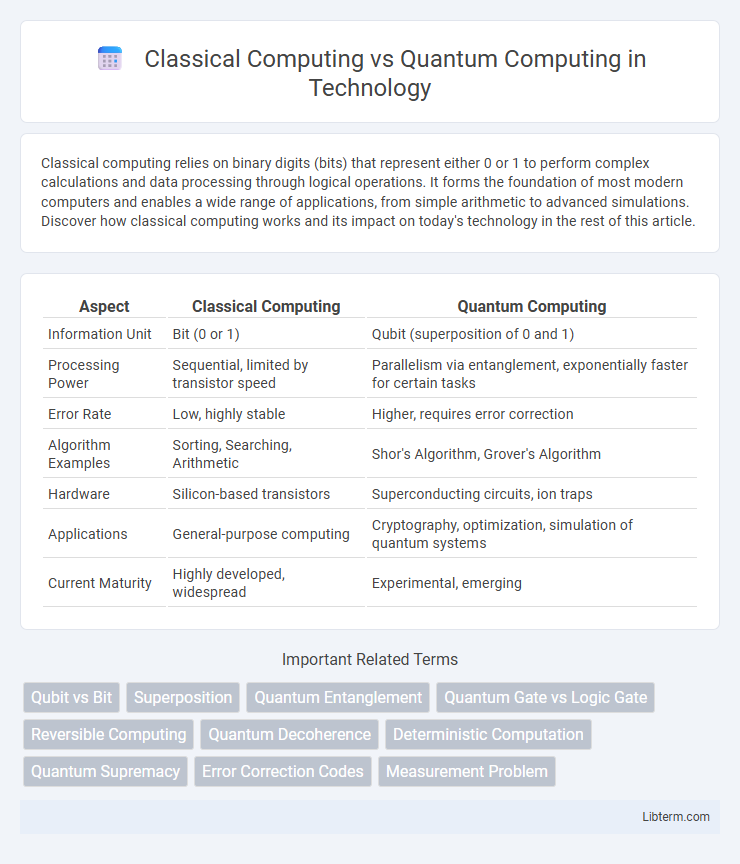

Table of Comparison

| Aspect | Classical Computing | Quantum Computing |

|---|---|---|

| Information Unit | Bit (0 or 1) | Qubit (superposition of 0 and 1) |

| Processing Power | Sequential, limited by transistor speed | Parallelism via entanglement, exponentially faster for certain tasks |

| Error Rate | Low, highly stable | Higher, requires error correction |

| Algorithm Examples | Sorting, Searching, Arithmetic | Shor's Algorithm, Grover's Algorithm |

| Hardware | Silicon-based transistors | Superconducting circuits, ion traps |

| Applications | General-purpose computing | Cryptography, optimization, simulation of quantum systems |

| Current Maturity | Highly developed, widespread | Experimental, emerging |

Introduction to Classical vs Quantum Computing

Classical computing relies on binary bits representing either 0 or 1, using transistors to perform logical operations sequentially or in parallel. Quantum computing leverages quantum bits or qubits that exist in superposition, enabling simultaneous computation of multiple states and harnessing entanglement for complex problem-solving. This fundamental difference in information representation and processing opens new possibilities for solving optimization, cryptography, and simulation problems beyond classical capabilities.

Fundamental Principles of Classical Computing

Classical computing operates on binary bits represented by 0s and 1s, utilizing logic gates such as AND, OR, and NOT to perform deterministic computation. The fundamental principle revolves around Boolean algebra, enabling sequential processing and data manipulation through transistor-based circuits in classical processors. This approach relies on classical physics and electrical engineering, contrasting with quantum computing's use of qubits and quantum phenomena like superposition and entanglement.

Core Concepts of Quantum Computing

Quantum computing operates on qubits that exploit superposition and entanglement, enabling the representation of complex probability states far beyond classical bits' binary 0s and 1s. Quantum gates manipulate qubits through unitary transformations, allowing parallel computations and interference patterns crucial for algorithms like Shor's and Grover's. These core concepts underpin quantum speedup and potential breakthroughs in cryptography, optimization, and material simulation, distinguishing the fundamental architecture from classical computing's deterministic logic circuits.

Key Differences Between Classical and Quantum Computing

Classical computing processes information using binary bits represented as 0s or 1s, while quantum computing utilizes quantum bits or qubits, which can exist in superposition states of 0 and 1 simultaneously. Classical computers rely on deterministic logic gates, whereas quantum computers leverage phenomena like entanglement and quantum interference to perform complex computations exponentially faster. The fundamental difference lies in computational power and speed, with quantum computing excelling at simulating molecular structures, optimization problems, and cryptographic algorithms beyond classical capabilities.

Processing Power: Bits vs Qubits

Classical computing relies on bits as the fundamental unit of information, representing either 0 or 1, which limits processing power to sequential or parallel binary calculations. Quantum computing utilizes qubits that exploit superposition and entanglement, allowing simultaneous representation of multiple states and exponentially increasing computational capacity. This difference in processing power enables quantum computers to solve specific complex problems, such as factoring large numbers or simulating molecules, far more efficiently than classical computers.

Computational Speed and Efficiency Comparison

Classical computing relies on binary bits operating sequentially, limiting its computational speed and efficiency in solving complex problems as the number of variables increases. Quantum computing utilizes qubits that perform parallel processing through superposition and entanglement, exponentially accelerating computations for specific algorithms like factoring and database searches. This results in significantly higher efficiency and speed for tasks such as cryptography and optimization, surpassing classical computing capabilities in handling large-scale, complex computations.

Real-World Applications: Classical vs Quantum

Classical computing excels in everyday applications such as data processing, web browsing, and financial transactions due to its reliability and well-established algorithms. Quantum computing shows promise in real-world scenarios like cryptography, drug discovery, and optimization problems by leveraging qubits and quantum superposition for complex computations. Industries such as pharmaceuticals and logistics are beginning to explore quantum algorithms to solve challenges beyond classical capabilities.

Limitations and Challenges of Quantum Computing

Quantum computing faces key limitations including qubit decoherence, error rates, and scalability challenges which hinder stable and reliable computation. Quantum systems require extremely low temperatures and sophisticated error-correction techniques to maintain qubit coherence and operational fidelity. These constraints pose significant barriers to practical implementation and wide-scale adoption compared to classical computing's well-established infrastructure and stability.

Future Prospects in Computing Technology

Quantum computing promises exponential speedups by harnessing quantum bits (qubits) capable of superposition and entanglement, enabling breakthroughs in cryptography, optimization, and complex simulations beyond the reach of classical computing. Classical computing will continue to dominate general-purpose tasks due to its maturity, reliability, and well-established infrastructure, but faces inherent limits in processing power and miniaturization. Integration of quantum algorithms with classical architectures is expected to create hybrid systems, driving the future of computing technology toward unprecedented efficiency and problem-solving capabilities.

Conclusion: Which Computing Paradigm Leads the Future?

Quantum computing promises exponential speed-ups for complex problems such as cryptography, optimization, and molecular modeling, surpassing the capabilities of classical computing. Classical computing remains essential for general-purpose tasks due to its reliability, scalability, and vast existing infrastructure. Integrating quantum processors with classical systems likely represents the future computing paradigm, leveraging the strengths of both technologies.

Classical Computing Infographic

libterm.com

libterm.com